Announcements

REMINDER: Sign up for the Foundry Newsletter to receive a summary of new products, features, and improvements across the platform directly to your inbox. For more information on how to subscribe, see the Foundry Newsletter and Product Feedback channels announcement.

Share your thoughts about these announcements in our Developer Community Forum ↗.

Track freshness of object data with Workshop's Data Freshness widget

Date published: 2025-07-31

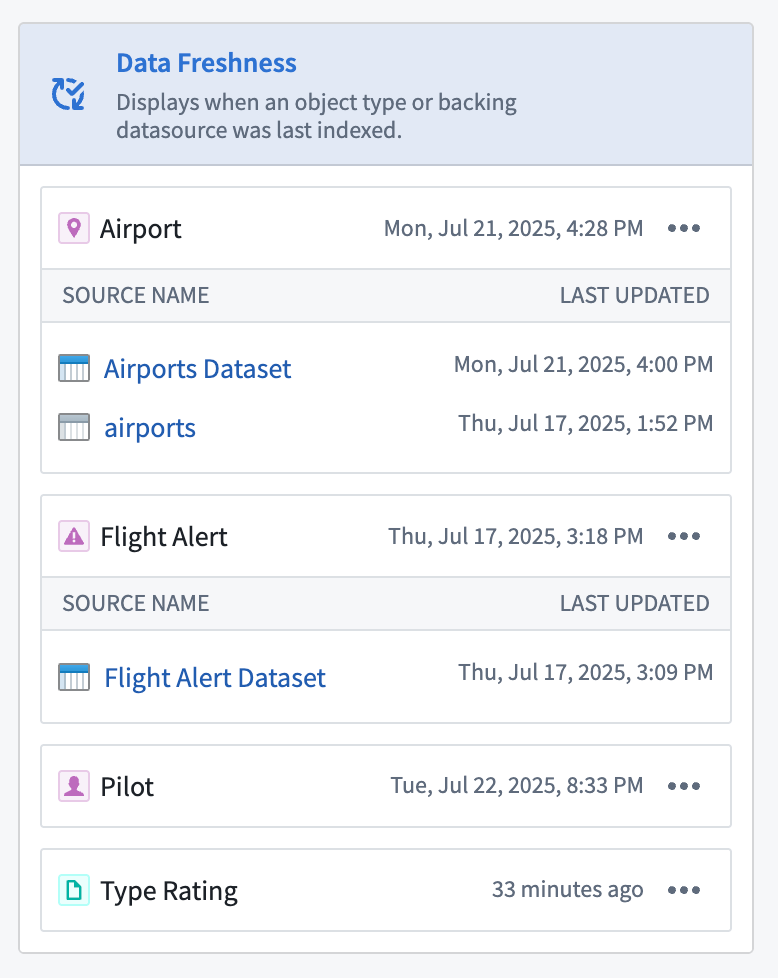

Workshop users can now easily track the freshness of data directly within their application using the new Data Freshness widget. This widget provides users with greater visibility into the state of their data and allows builders to more easily catch any unexpected staleness. Builders may select the object types and backing datasources they wish to display using the widget. The Last updated timestamp shown next to each object type and datasource corresponds to when the object type or datasource was last indexed.

The Data Freshness widget shows when an object type and data source was last updated .

Refer to the Data Freshness widget documentation to learn more about configuring the widget.

Your feedback matters

We want to hear about your experiences with Workshop, and welcome your feedback. Share your thoughts with Palantir Support channels or on our Developer Community ↗ using the workshop ↗ tag.

Looping over arrays of structs and embedded module creation from loop layout configuration in Workshop

Date published: 2025-07-31

Loop over arrays of structs

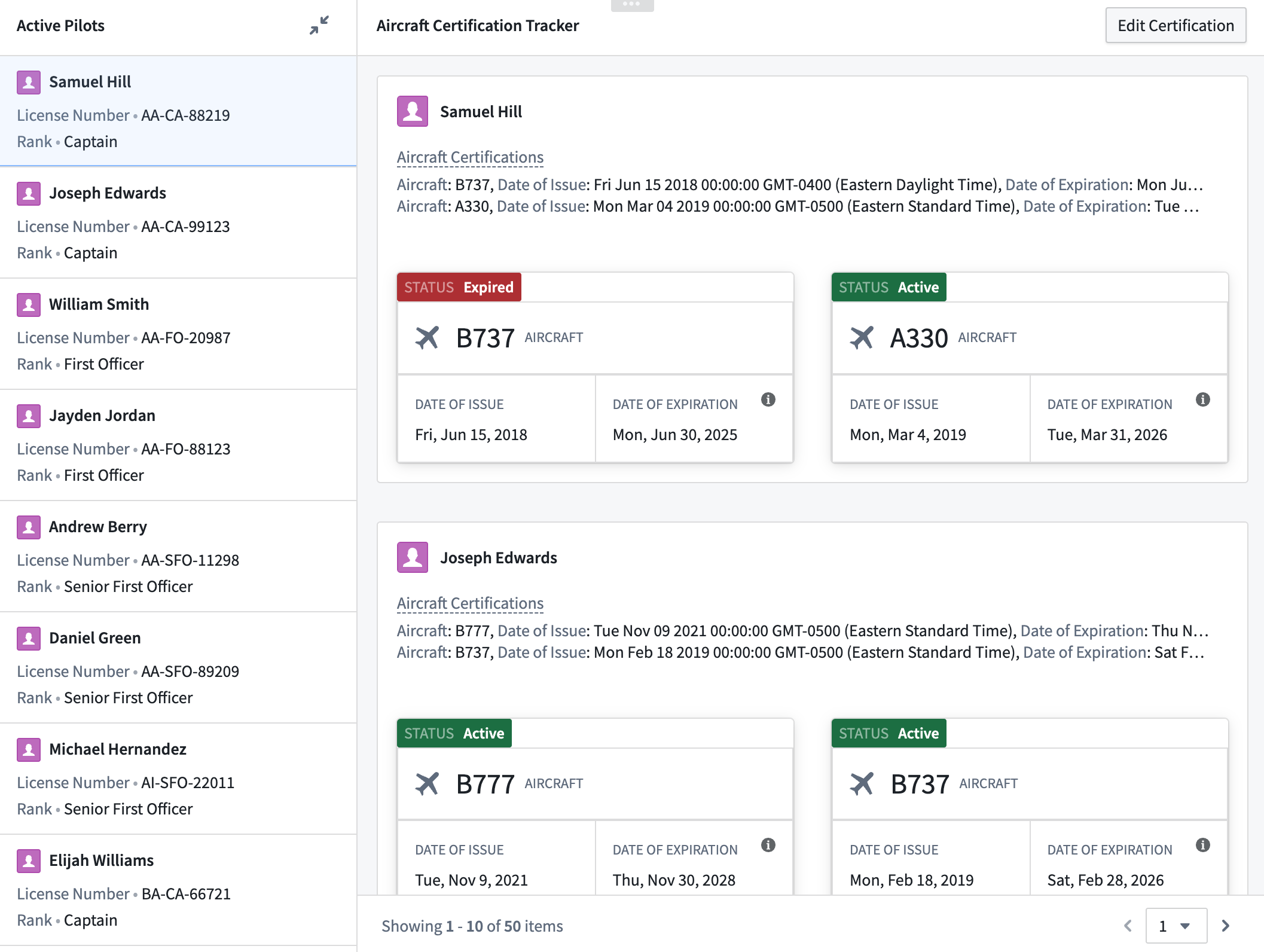

Workshop builders may now configure loop layouts to use arrays of structs by passing in struct array variables output from a function or via an object property. This enables more performant looping setups, especially in cases where nested looped layouts are used.

For example, a builder may configure the display of object properties by constructing an array of structs using a function and passing it into a looped section, rather than looping through each object within an object set and loading each individual object’s properties. This approach reduces the number of network calls within a module and also offers greater flexibility when displaying data in a module, allowing builders to loop over and display non-ontologized data by constructing it as an array of structs output from a function.

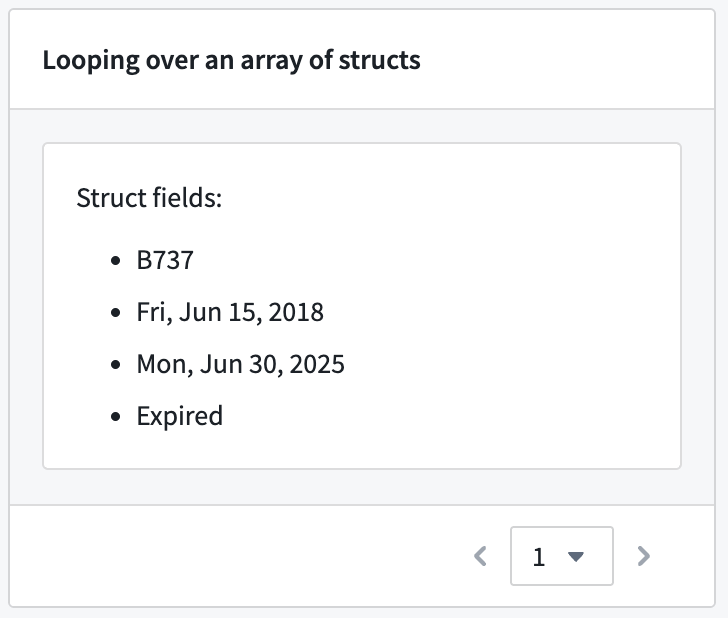

Example of a nested loop layout setup. The outer section loops over a Pilot object set displaying the array of structs typed Aircraft certification object property in a Property List widget. The inner section loops over the Aircraft certification property and displays each struct field’s value using Metric Card widgets.

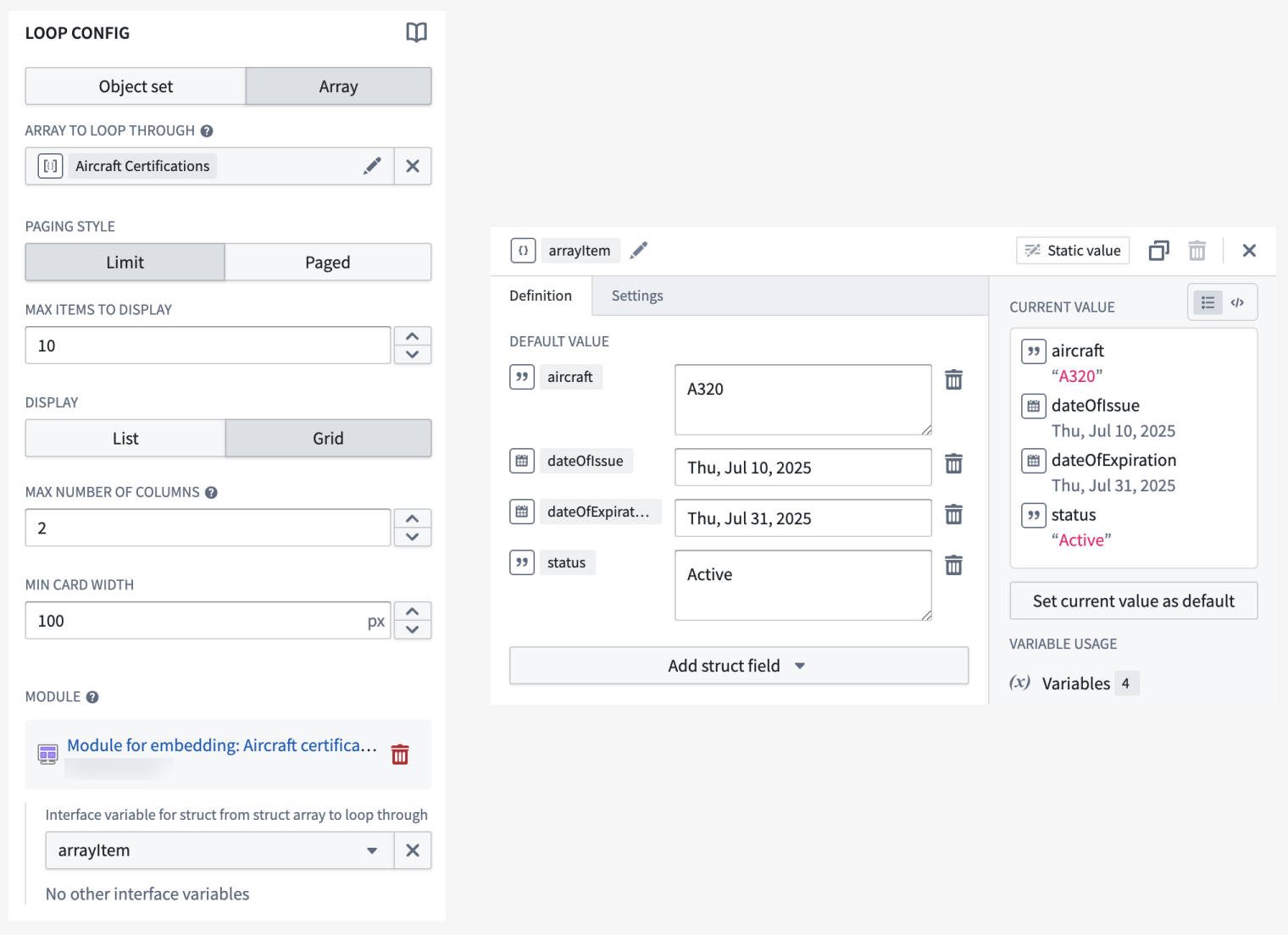

Create embedded modules from loop layout configuration

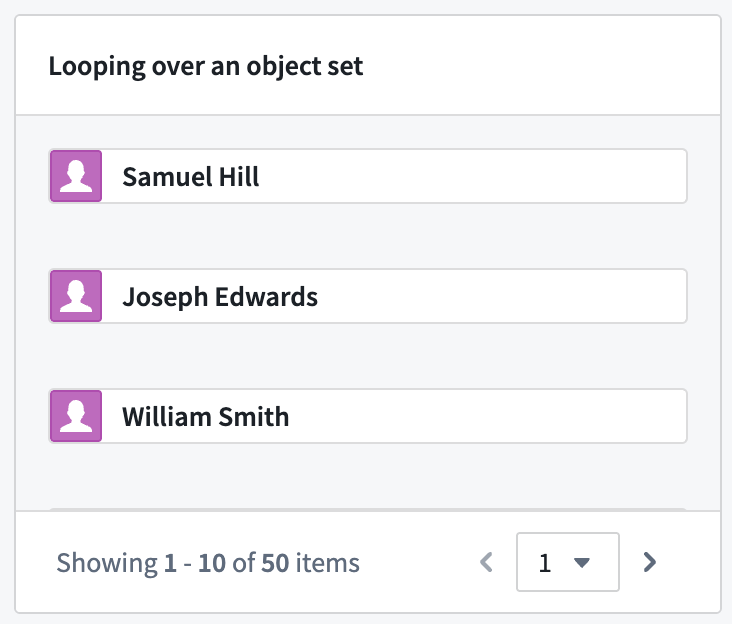

The loop layout configuration experience has been improved to allow the creation and use of an embedded module directly within the parent module. Embedded modules created using this method will feature a basic module with a preset module interface variable and a widget for display, allowing builders to quickly see their looped section in action.

Looped sections configured to use object sets will feature an Object Set Title widget, and looped sections configured to use arrays will feature a Markdown widget. When using an array of structs, the struct module interface variable will contain a predefined schema matching that of the struct item within the array, and the Markdown widget will display each field within the struct.

Section looping over an object set using an embedded module created from the parent module’s loop layout configuration.

Section looping over an array of structs using an embedded module created from the parent module’s loop layout configuration.

For more information on both features, review the documentation on struct variables and loop layout.

Your feedback matters

We want to hear about your experience with Workshop and welcome your feedback. Share your thoughts with Palantir Support channels or on our Developer Community ↗ using the workshop tag ↗.

Python and TypeScript v2 Functions with OSDKs are now compatible with Marketplace

Date published: 2025-07-31

Python and TypeScript v2 Functions that use OSDKs can now be included as content in Marketplace products.

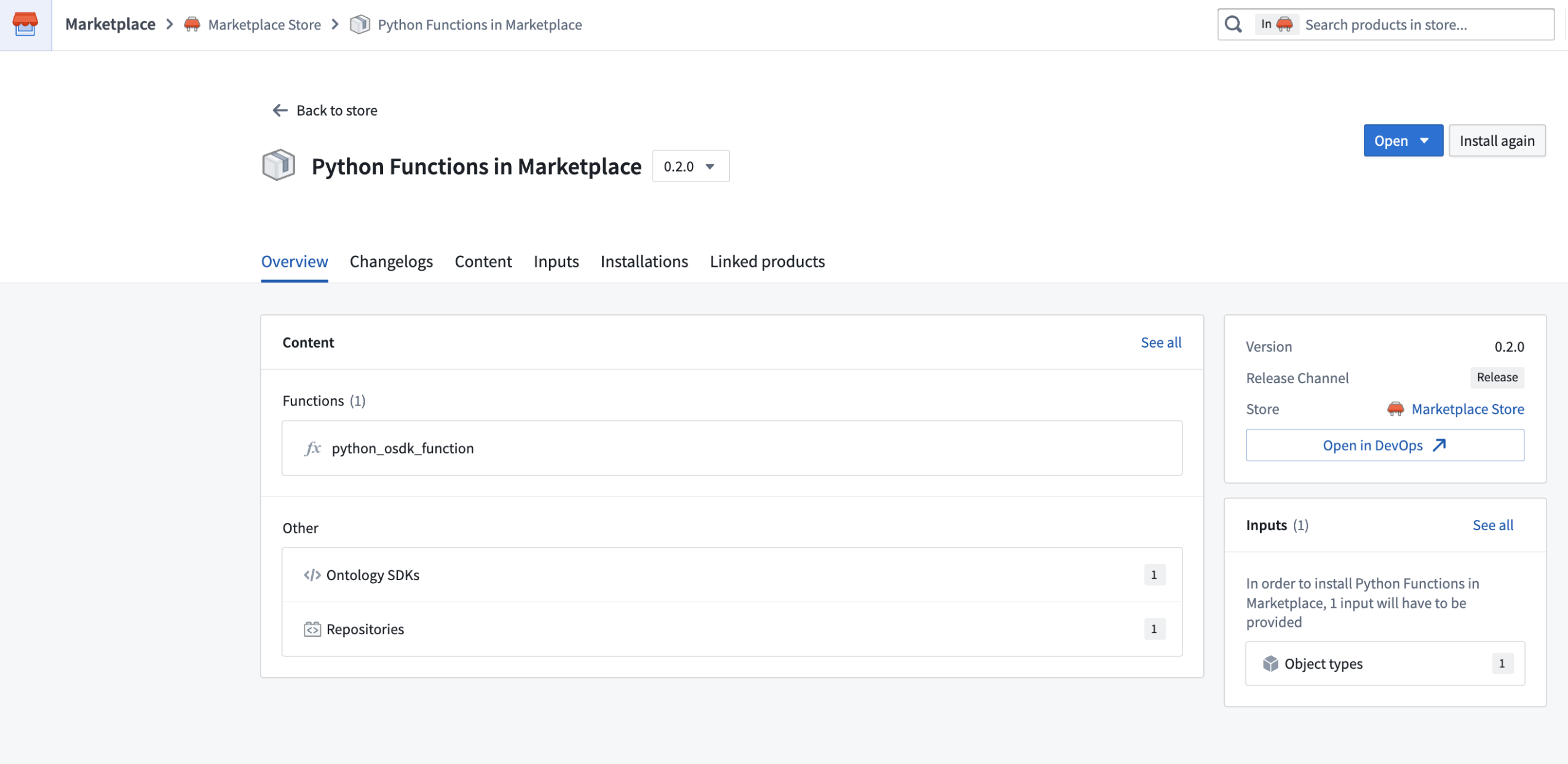

Packaging a Python function that uses an OSDK in a Marketplace product.

When you add a function that uses an OSDK to a Marketplace product, that function’s OSDKs will be automatically added for you. Additionally, all ontology entities used in your OSDK will be added as inputs. When you install your Marketplace product, you can reconfigure each of the entities used by your OSDK to instead reference entities in the ontology where it is being installed.

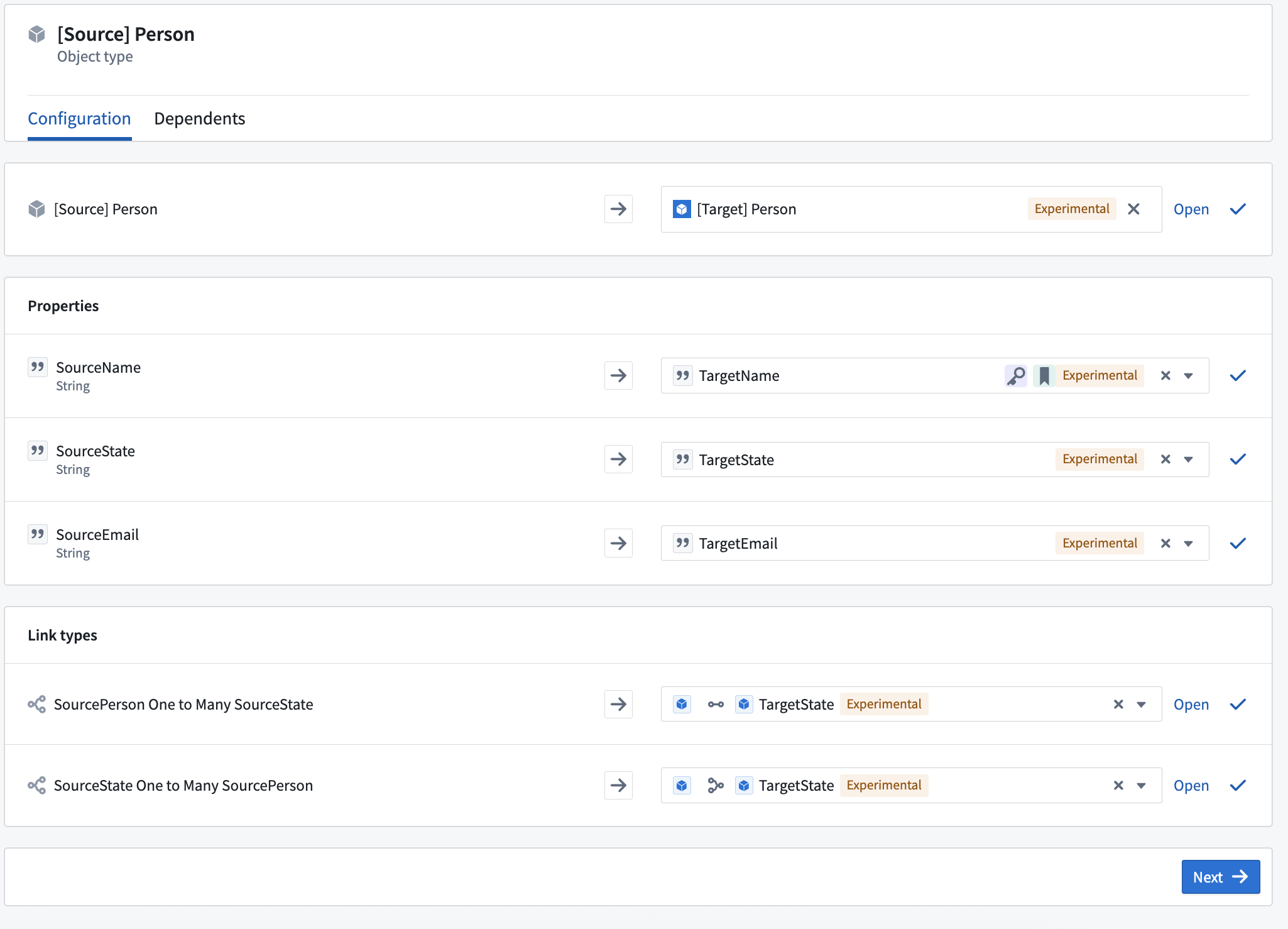

Reconfiguring an object used by an OSDK when installing a Marketplace product containing a Python function.

New configuration options for deployed Python functions and consolidated settings

Date published: 2025-07-31

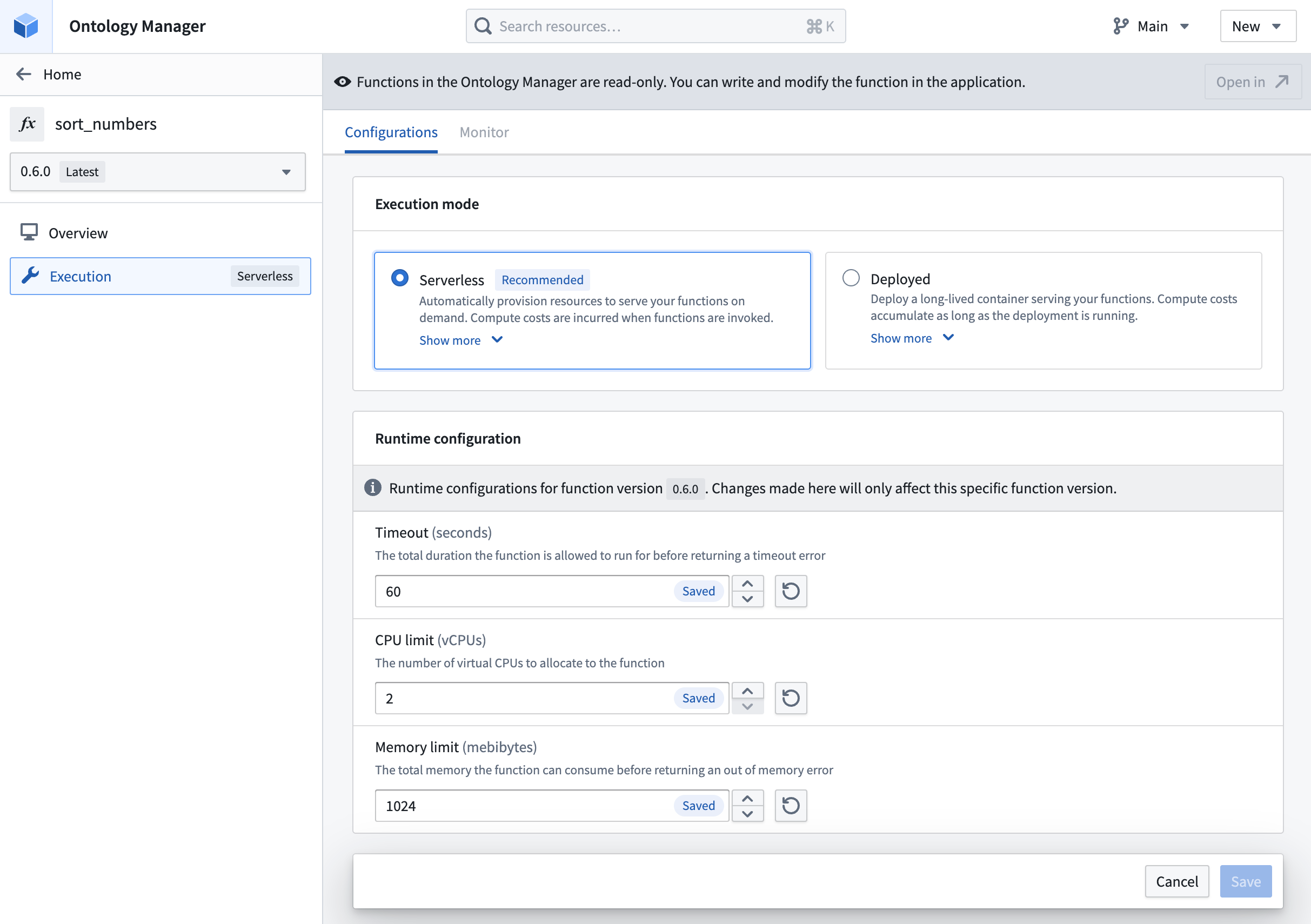

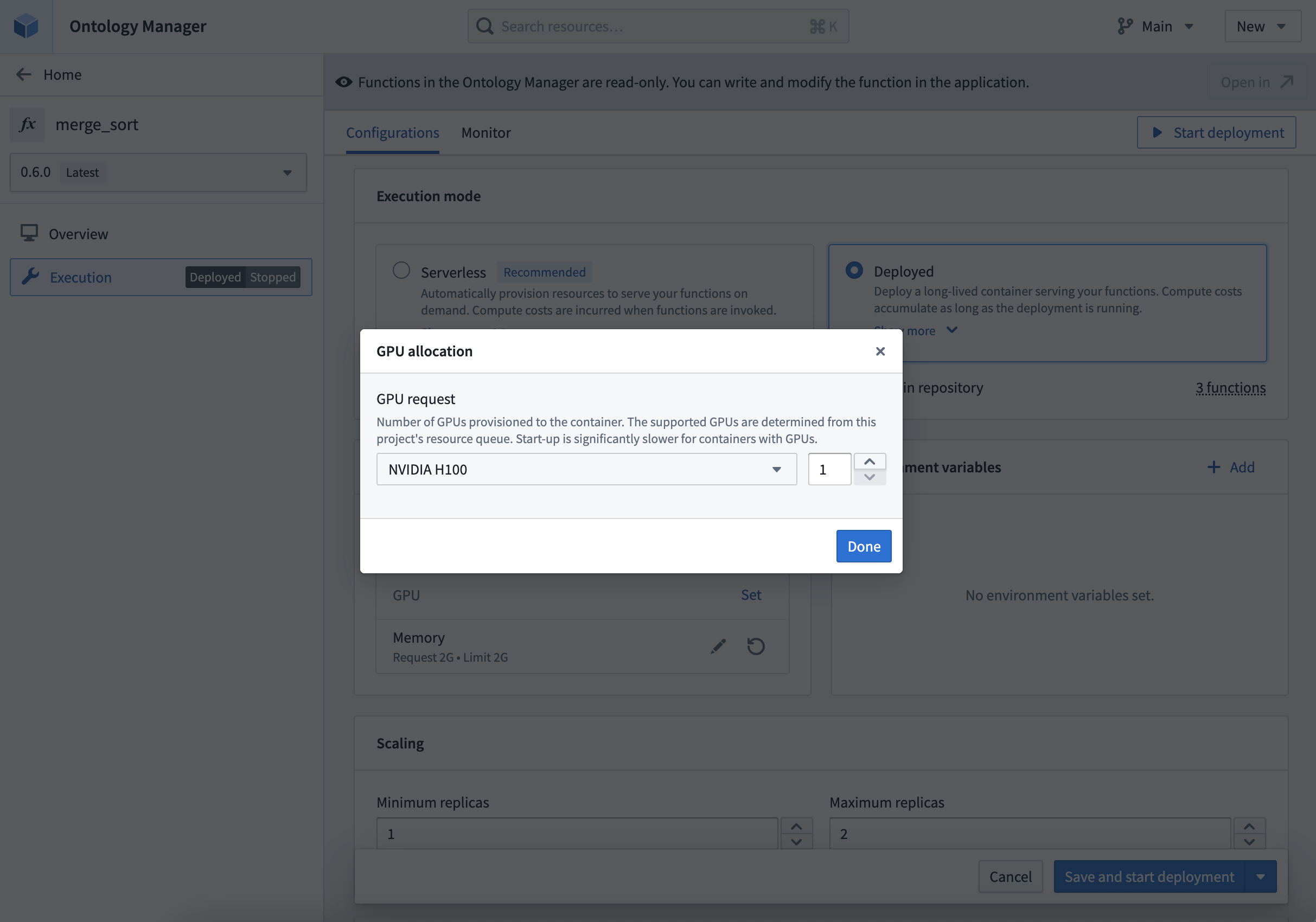

New configuration options for deployed Python functions are now available, providing increased visibility, improved usability, and an improved transition process for zero-downtime upgrades. This update allows users to allocate GPU resources for deployed Python functions, accelerating computationally intensive model training and inference workflows through parallel processing. Access these and other Python function deployment settings in Ontology Manager's new Execution page, which combines the previous Deployment and Configuration pages.

Modify timeout, CPU limit, and memory limit for functions in serverless mode.

Deployment options

- Compute resources: Specify CPU, GPU, and memory resources for the deployment. In addition to the requested amount, you can also specify the limit for CPU and memory resources.

- Horizontal autoscaling parameters: Specify the minimum and maximum limit for the number of replicas that can be launched for the deployment, ensuring effective use of compute resources based on demand.

- Environment variables: Specify environment variables for the deployment upon startup.

- Execution timeout: The total duration that the function is allowed to run before returning a timeout error. Unlike the other deployment settings, execution timeout is configured individually for each function version.

Allocate GPUs based on availability in the project's resource queue.

Get started

To get started, review the step-by-step guide to configure a Python function deployment. Refer to the documentation when deciding between deployed or serverless function execution modes.

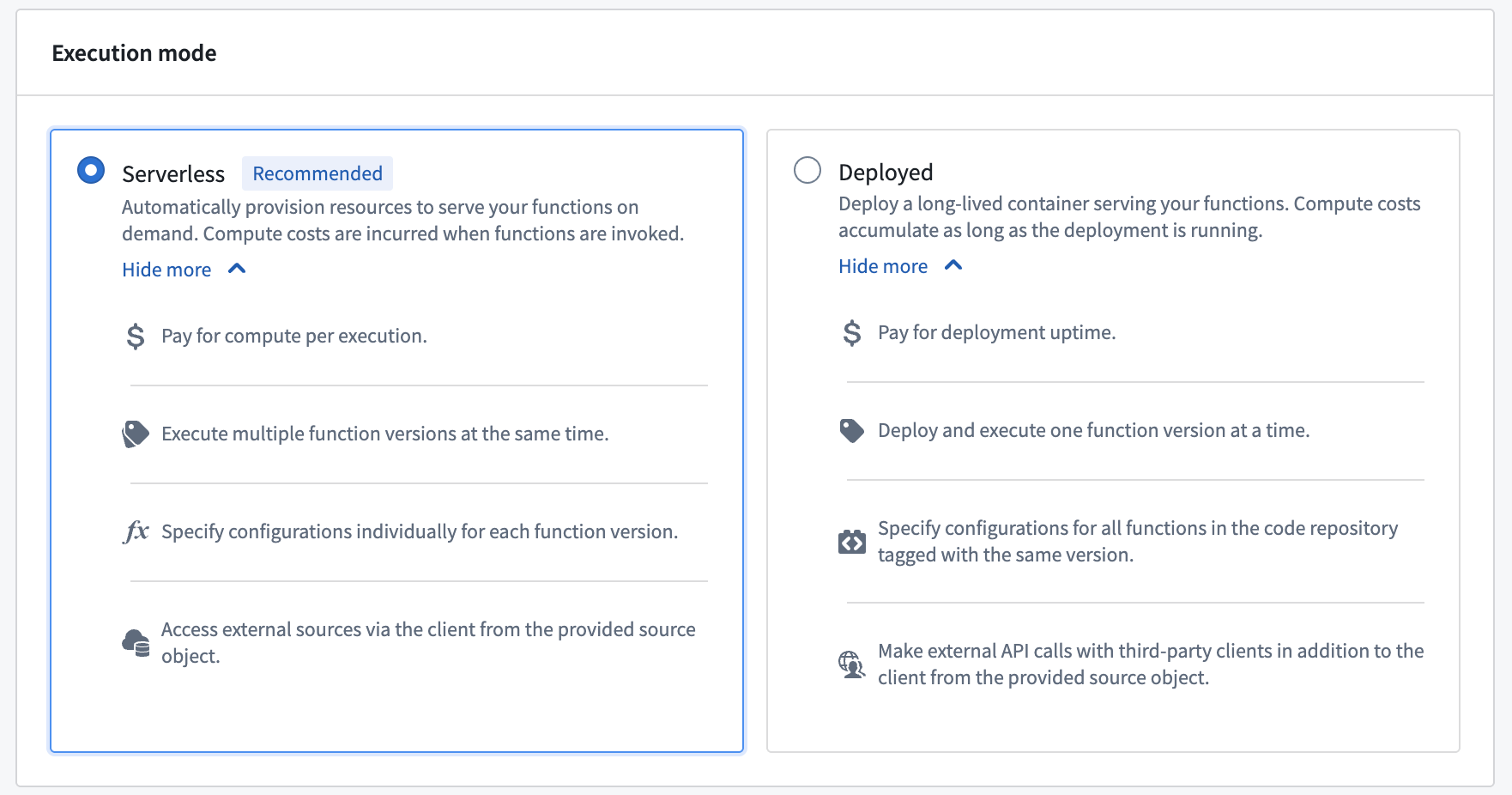

Comparison of serverless and deployed execution modes.

What's next?

We are working on supporting the capability to configure deployments for TypeScript v2 functions. As we continue to improve function execution mode capabilities, we want to hear about your experiences and welcome your feedback. Share your thoughts with Palantir Support channels, or on our Developer Community ↗ using the functions tag ↗.

AIP model family enablement now supported at the organization level

Date published: 2025-07-29

In AIP, enrollment administrators can enable model families for an entire enrollment. Model families represent contractual agreements with model providers for a set of models sharing the same legal and compliance status. As of this week, this capability has been expanded to support organization-level enablement on all AIP-enabled enrollments. Administrators can now grant specific organizations within the same enrollment access to a model family, while restricting others from using it.

To configure model family enablement at the organization level, go to Control Panel, and then navigate to AIP settings → Model enablement.

Similar to AIP enablement, for a project to use models from a model family, all organizations associated with the project must have that model family enabled.

For example, in the scenario shown below, only one organization, with the enrollment Test1, is provided access to AWS Bedrock Claude models. If a project’s AIP Logic resource is linked to both Test1 and Test2 enrollments, the resource will not display any AWS Bedrock Claude models, because Test2 does not have access to this model family.

You can now restrict model family usage at the organization level in Control Panel on the AIP settings page under Model family enablement.

Review the documentation on restricting AIP usage through organizations for additional information.

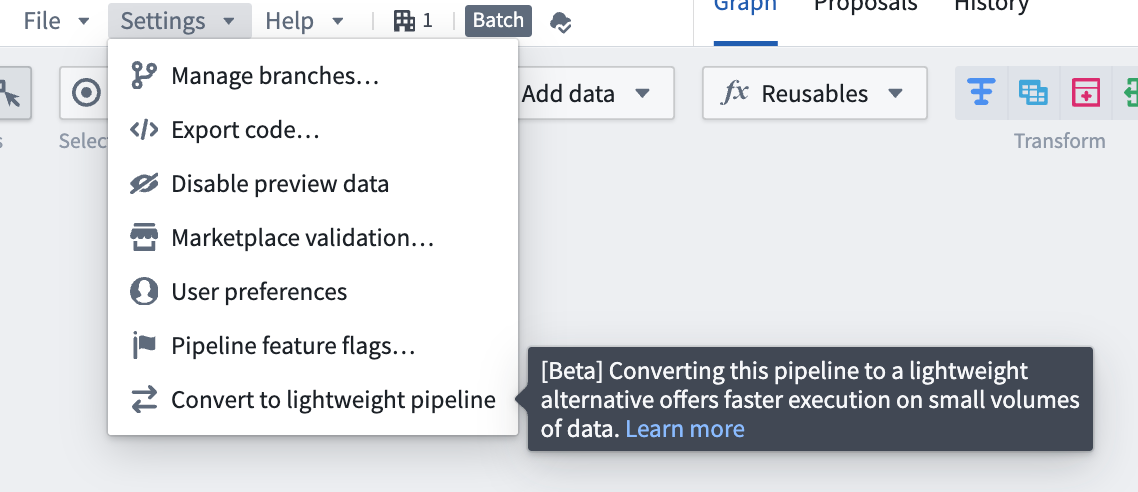

Lightweight pipelines now available in Pipeline Builder [Beta]

Date published: 2025-07-29

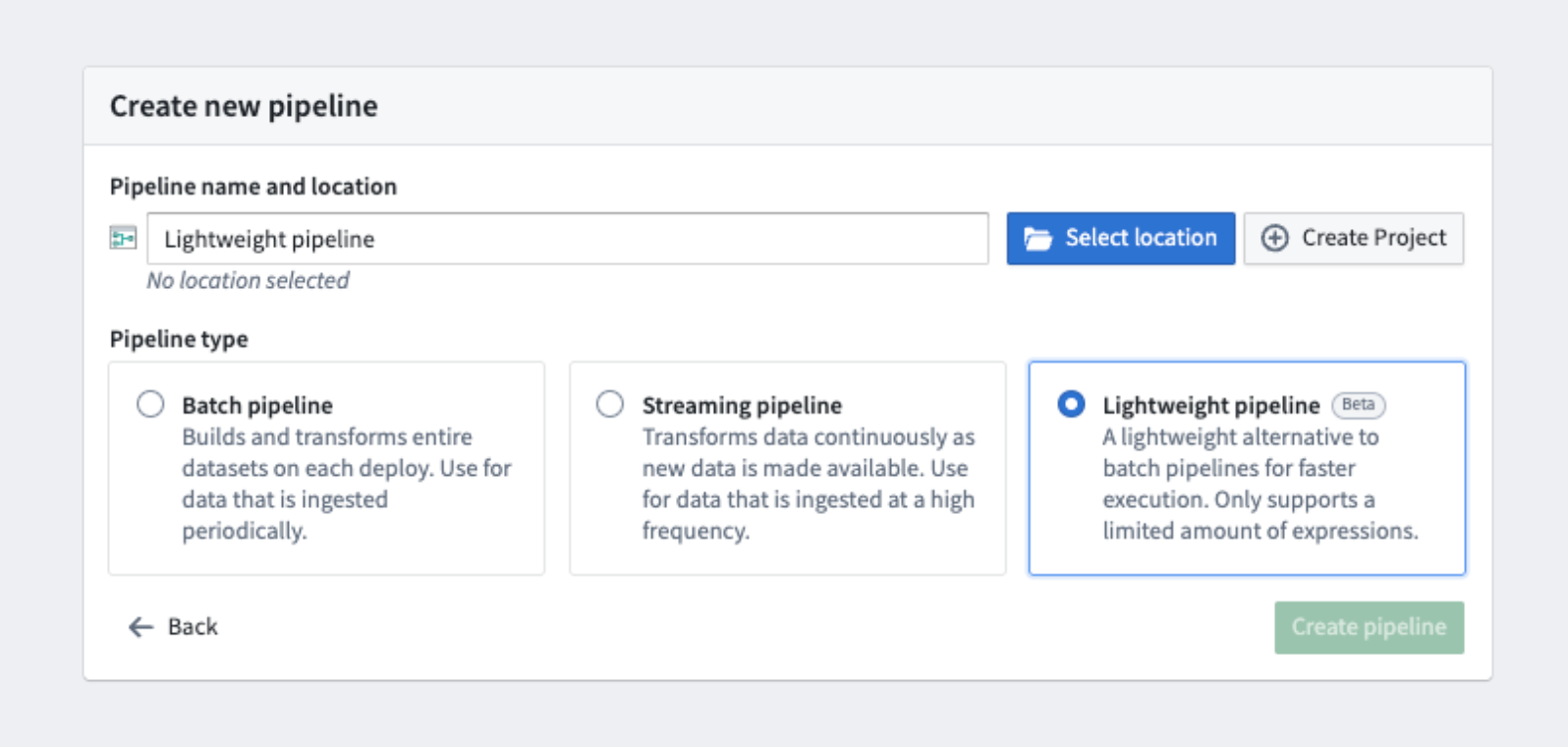

Lightweight pipelines [Beta] are now available in Pipeline Builder, delivering faster execution for batch and incremental pipelines. This feature is particularly beneficial when working with small to medium-sized datasets. Compared to traditional Spark-based pipelines, lightweight pipelines can significantly accelerate compute processes for pipelines that typically run in under fifteen minutes.

What are lightweight pipelines?

Lightweight pipelines are powered by DataFusion ↗, an open-source query engine written in Rust.

Key features:

- Faster build times: Optimized for rapid, low-latency execution.

- Ideal for small and medium datasets: Substantial speed improvements over standard batch pipelines.

- Flexible experimentation: Easily test and compare lightweight pipelines with your existing configurations and switch back to batch in one go using the settings panel.

Get started

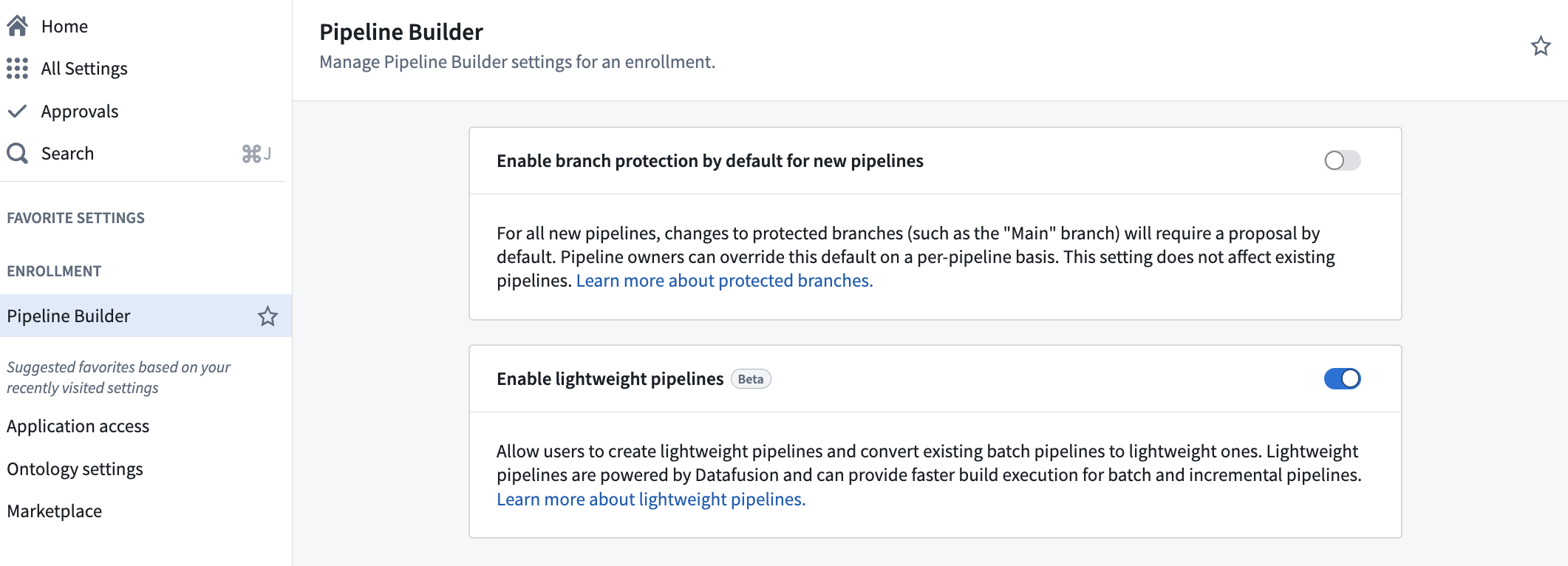

Lightweight pipelines are currently in beta and must be enabled for your organization. To do so, navigate to Control Panel, select your enrollment, and select All settings > Application configuration > Pipeline Builder. In the Pipeline Builder settings, toggle on Enable lightweight pipelines.

The Enable lightweight pipelines option in Control Panel.

To create a new lightweight pipeline, select Create new pipeline in Pipeline Builder, and choose the Lightweight pipeline option during configuration.

The option to select a lightweight pipeline during pipeline creation.

To convert existing pipelines to lightweight pipelines, select Convert to lightweight pipeline in the Settings dropdown, and ensure that your pipeline is compatible. You will receive a warning if your pipeline is incompatible.

The Convert to lightweight pipeline option in pipeline settings.

Note that functionality may change as lightweight pipelines continue to be developed. Lightweight pipelines currently support a subset of the features supported by batch pipelines. Currently unsupported features include the Use LLM node, media set operations, and geospatial functionality.

We recommend verifying your results using preview, or by examining build outputs, especially when converting between pipeline types. You can experiment with lightweight pipelines by testing them on a branch or making a copy of an existing pipeline. Evaluate performance metrics and discover how lightweight pipelines can optimize your workflows.

Learn more about lightweight pipelines in Pipeline Builder.

Your feedback matters

We want to hear about your experiences with Pipeline Builder and welcome your feedback. Share your thoughts with Palantir Support channels or on our Developer Community ↗ using the pipeline-builder tag ↗.

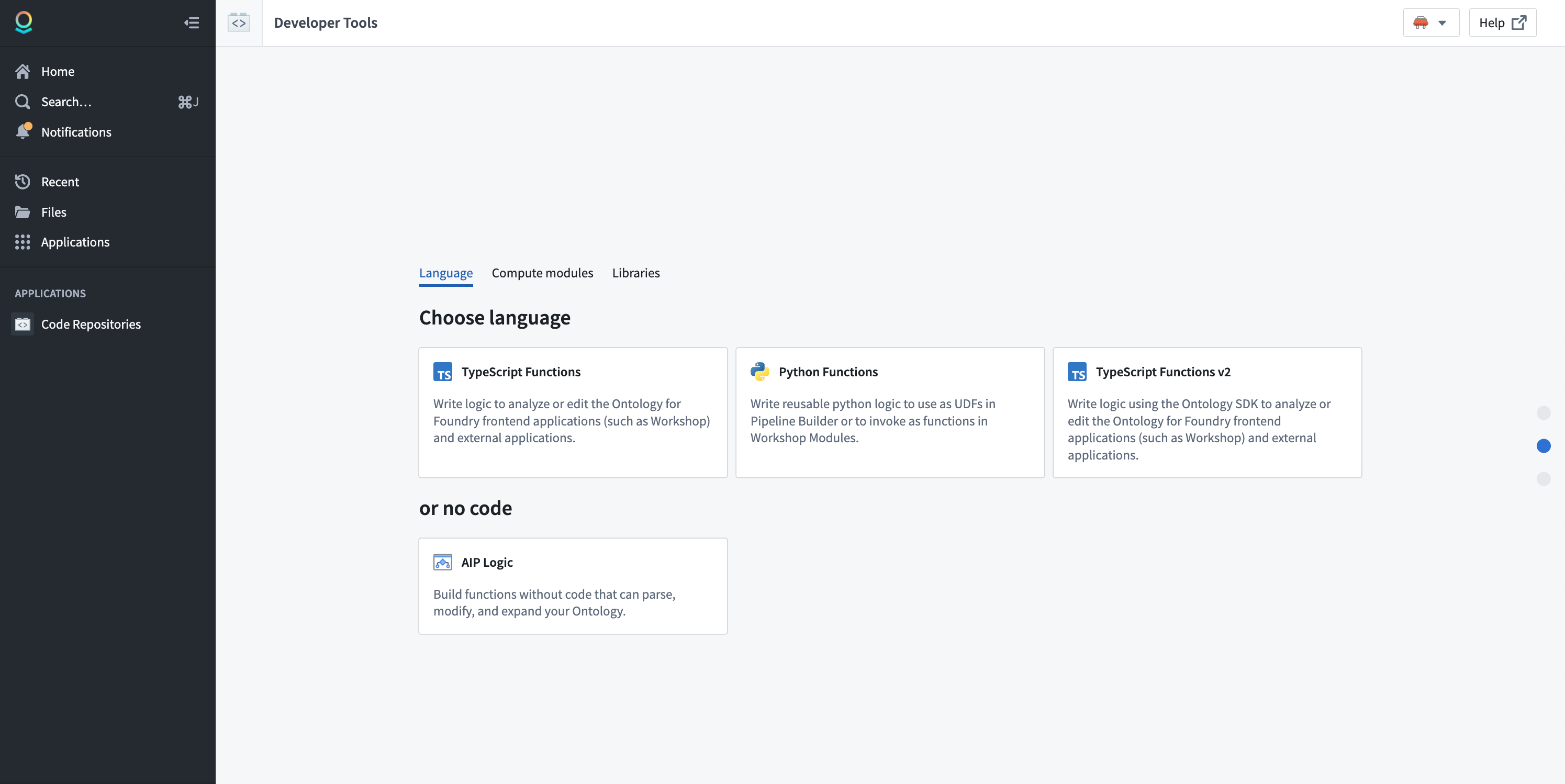

Python functions are now available [GA]

Date published: 2025-07-28

Starting the week of July 28, Python functions will be generally available for all enrollments. Get started with Python functions by creating a new code repository with the Python Functions template.

Choose the Python Functions template in Code Repositories.

Note that some features are not yet supported in Python functions. Review the feature support matrix to decide which template is best for you.

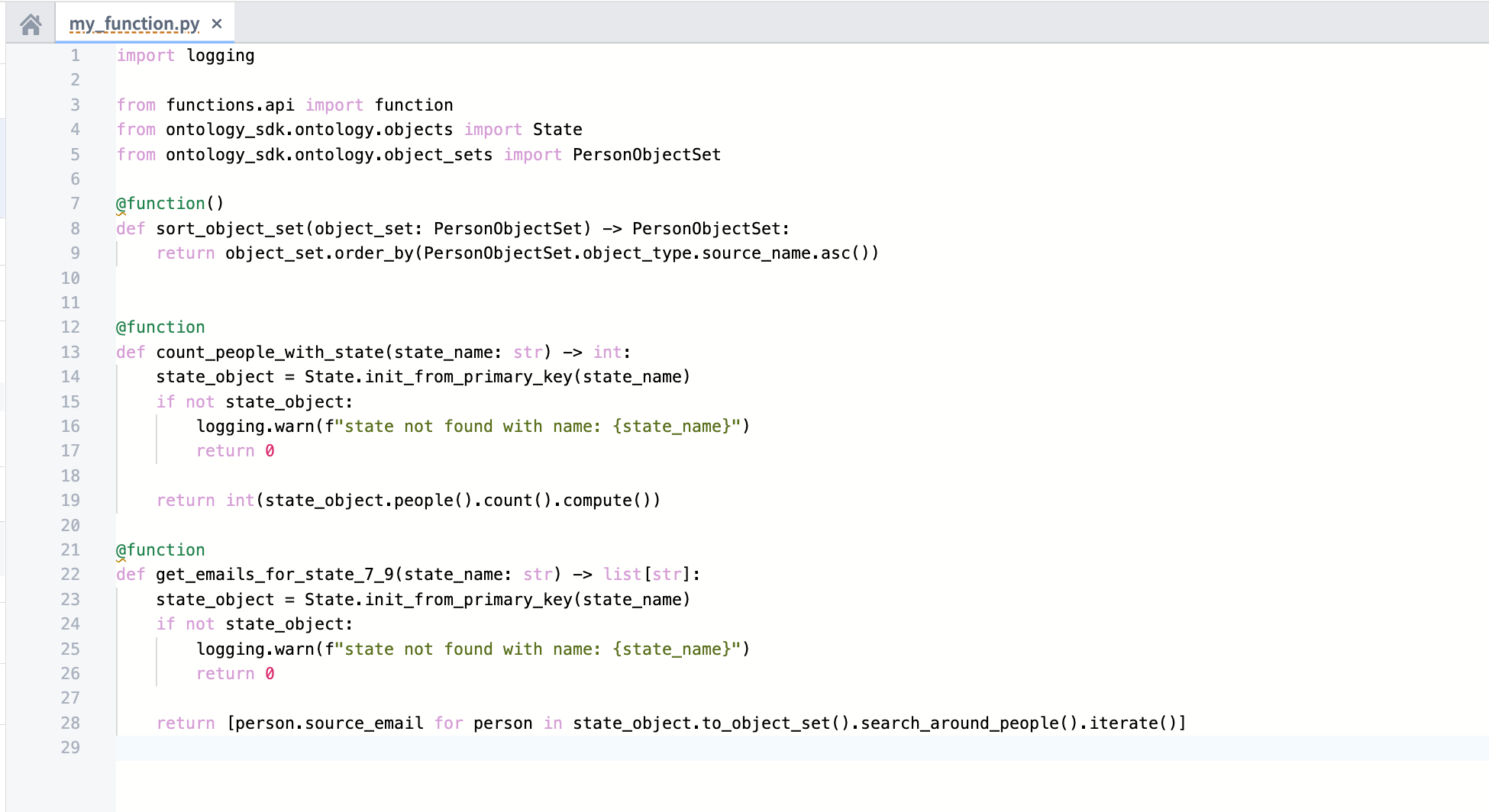

First-class support for OSDK

Python functions are designed around the Ontology SDK (OSDK). You no longer need to learn how to use different SDKs to write your functions. Additionally, powerful Python OSDK tools like property sub-selection will allow you to only load the data you need at a given time.

Use Python functions with the OSDK in your code repository.

You can find documentation generated for your OSDK in the Resource imports section of the code repository sidebar, providing you with examples of how to write code using your specific ontology.

Review OSDK documentation for Python functions in your code repository sidebar.

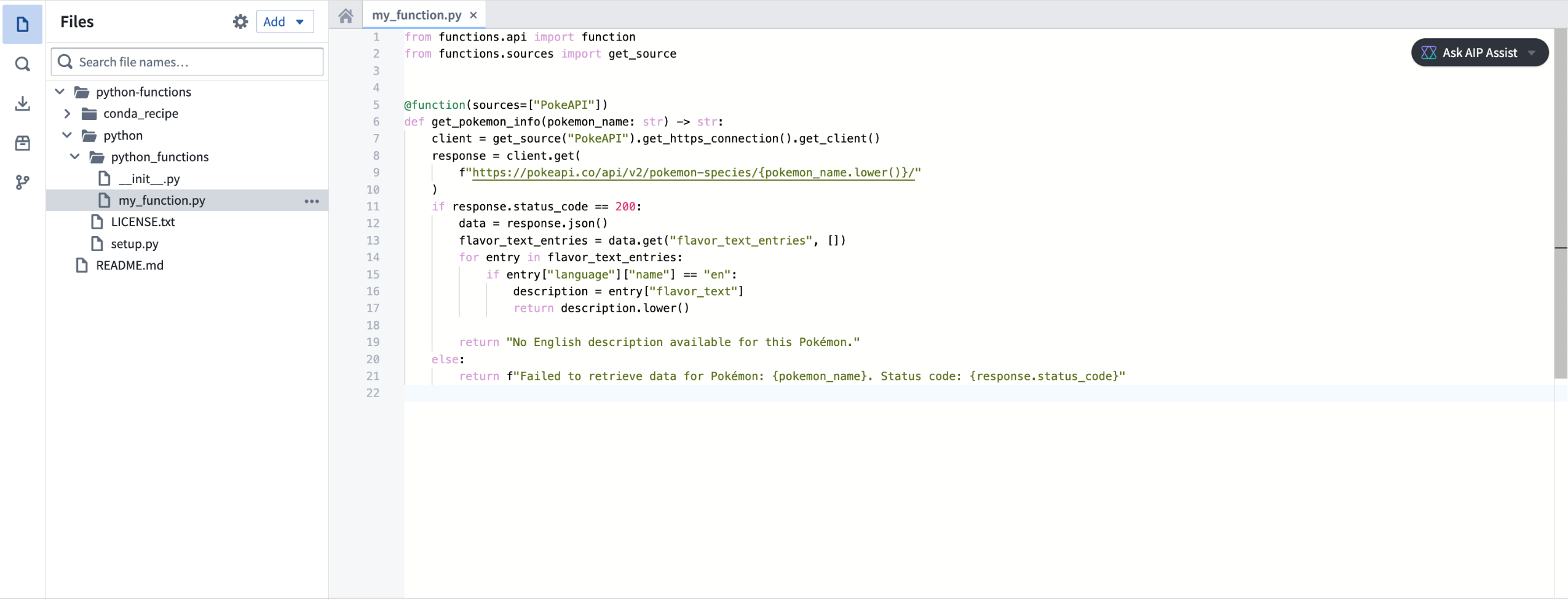

Make API calls

You can make API calls to external systems from Python functions, allowing you to interact with external systems. Use the provided functions.sources library to obtain pre-configured clients that ensure compatibility with the Python networking environment.

Make API calls with Python functions with the functions.sources library.

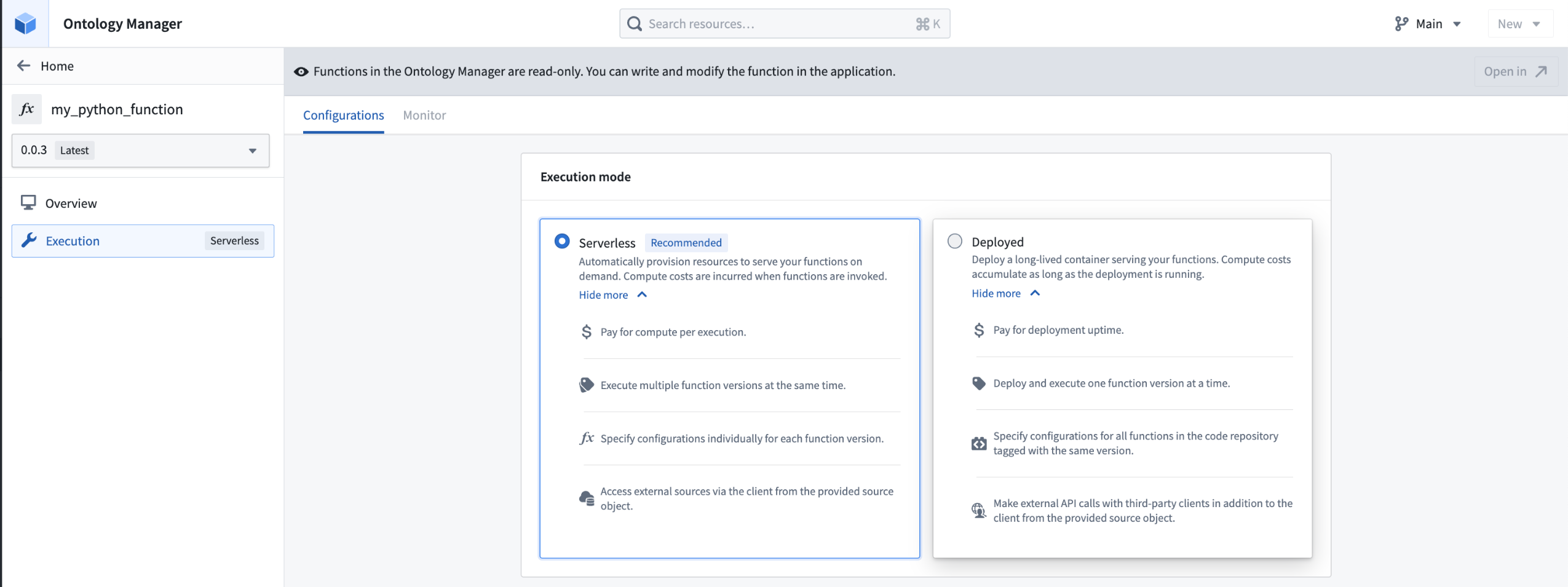

Flexible deployment modes

Python functions can run in either serverless or server-backed modes. This gives you the flexibility and low overhead of serverless functions, while also allowing you to use a stateful server if you need higher availability or want to keep stateful caches. Write your functions once, and decide on your deployment model later.

Choose either a serverless or server-backed deployment mode for your Python function in Ontology Mananger.

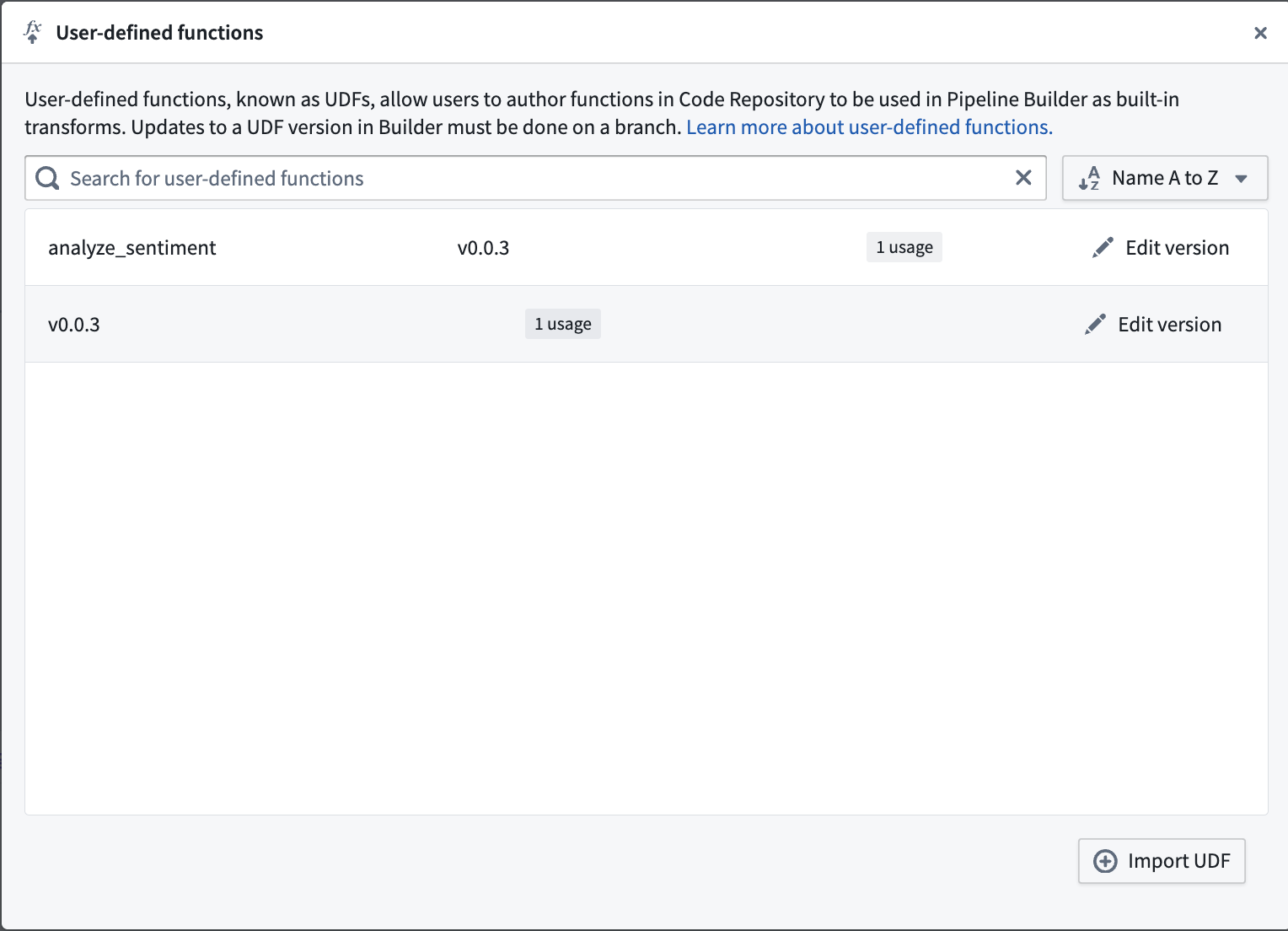

Compatibility with pipeline UDFs

Python functions can be used as user-defined functions (UDFs) in pipelines, allowing you to seamlessly integrate pro-code logic into existing pipelines.

Use a Python function as a UDF in Pipeline Builder.

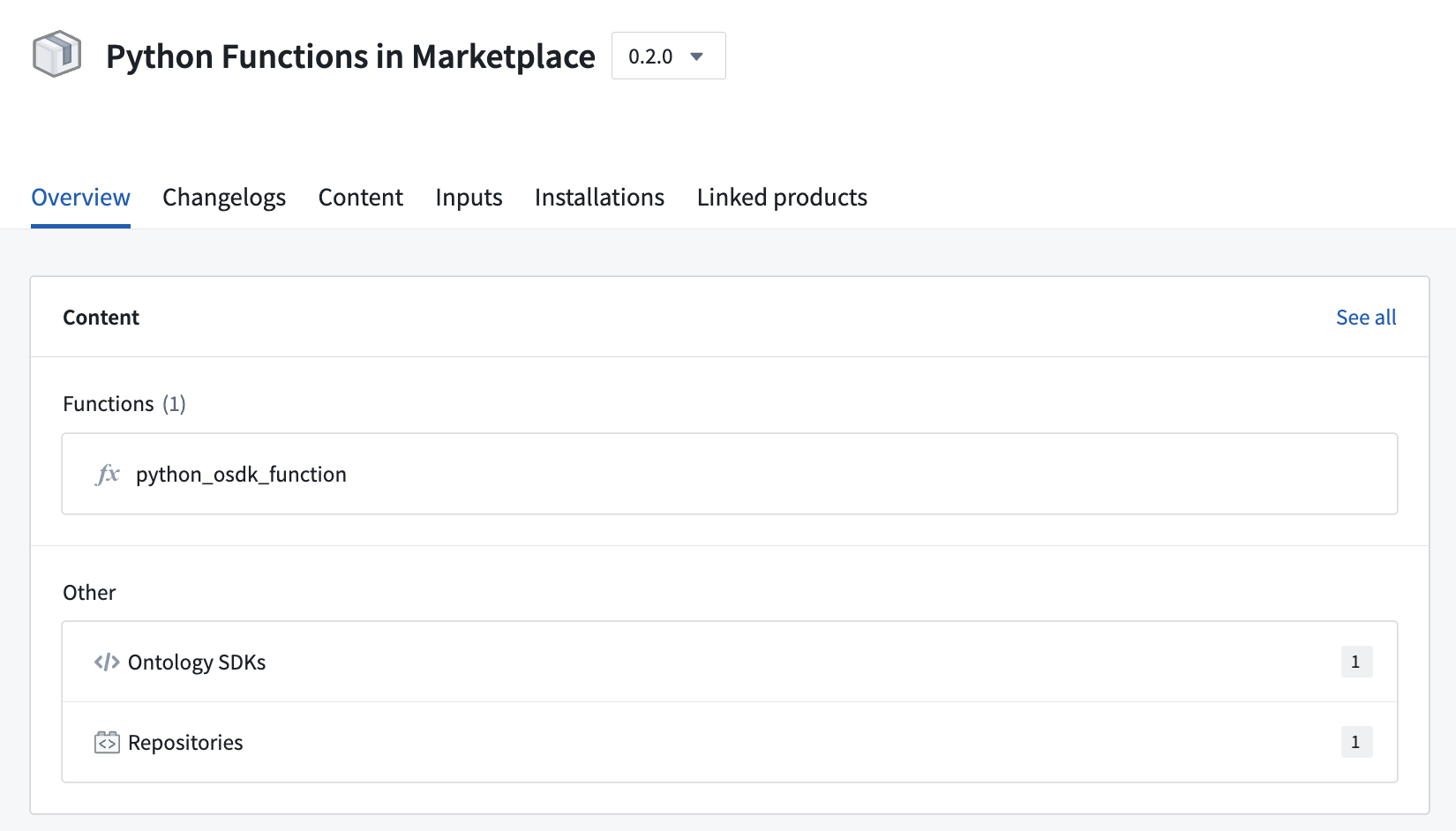

Deploy Python functions through Marketplace

Python functions can be deployed through Marketplace, enabling you to distribute products containing Python functions within your organization and the Foundry community. Marketplace integration includes support for any usage of the OSDK in your function.

An example of a Python function deployed through Marketplace.

Additional resources

Learn more about Python functions in our documentation, or refer to the README.md file contained within a Python functions repository for more information.

TypeScript v2 functions are now available [GA]

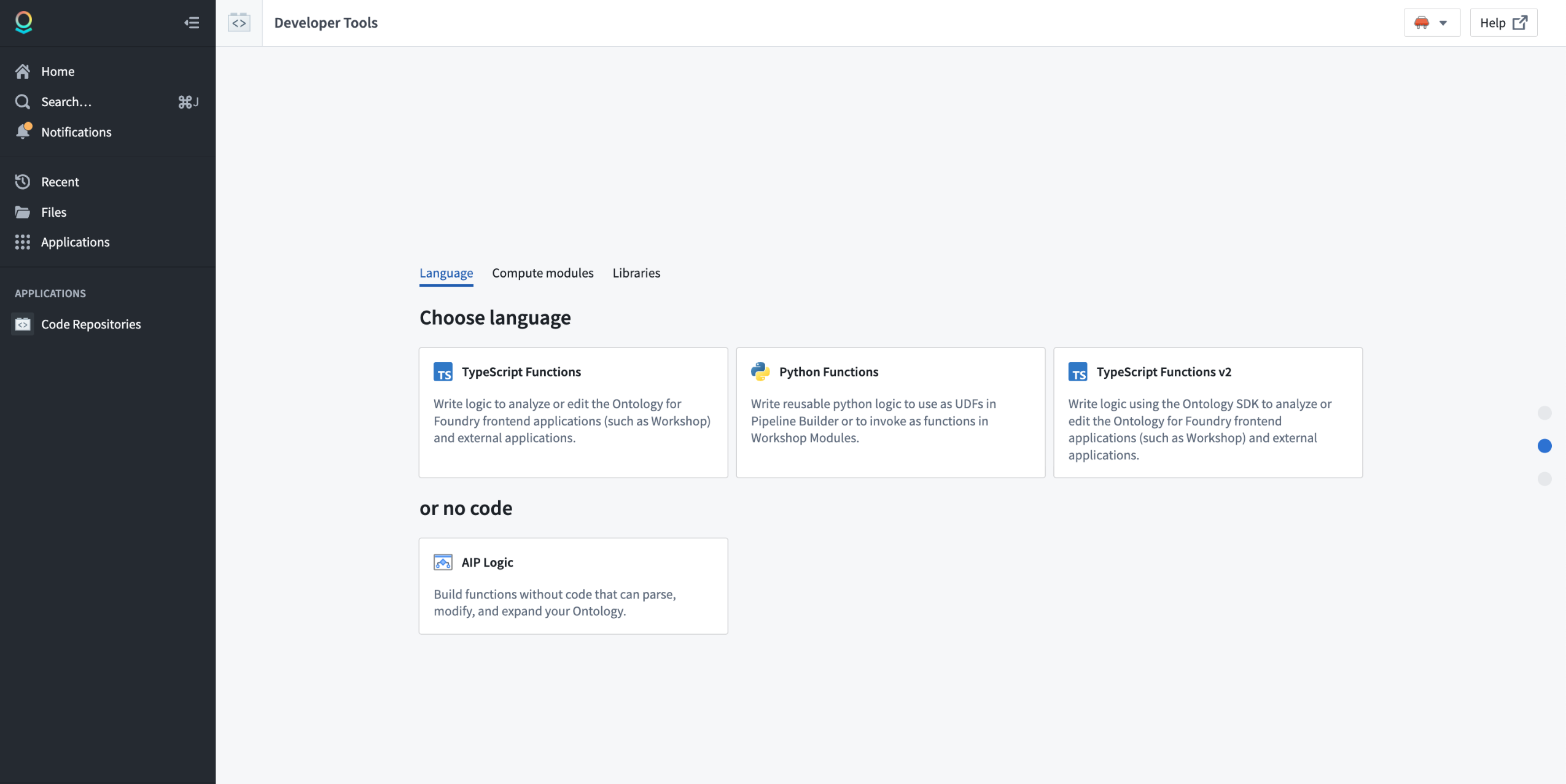

Date published: 2025-07-28

Starting the week of July 28, TypeScript v2 functions will be generally available for all enrollments. Get started with TypeScript v2 functions by creating a new code repository with the TypeScript Functions v2 template. TypeScript v2 functions offer some distinct differences between TypeScript v1 functions, making it a powerful improvement to your platform workflows.

Choose the TypeScript Functions v2 template in Code Repositories.

Note that some features are not yet supported in TypeScript v2 functions. Review the feature support matrix to decide which template is best for you.

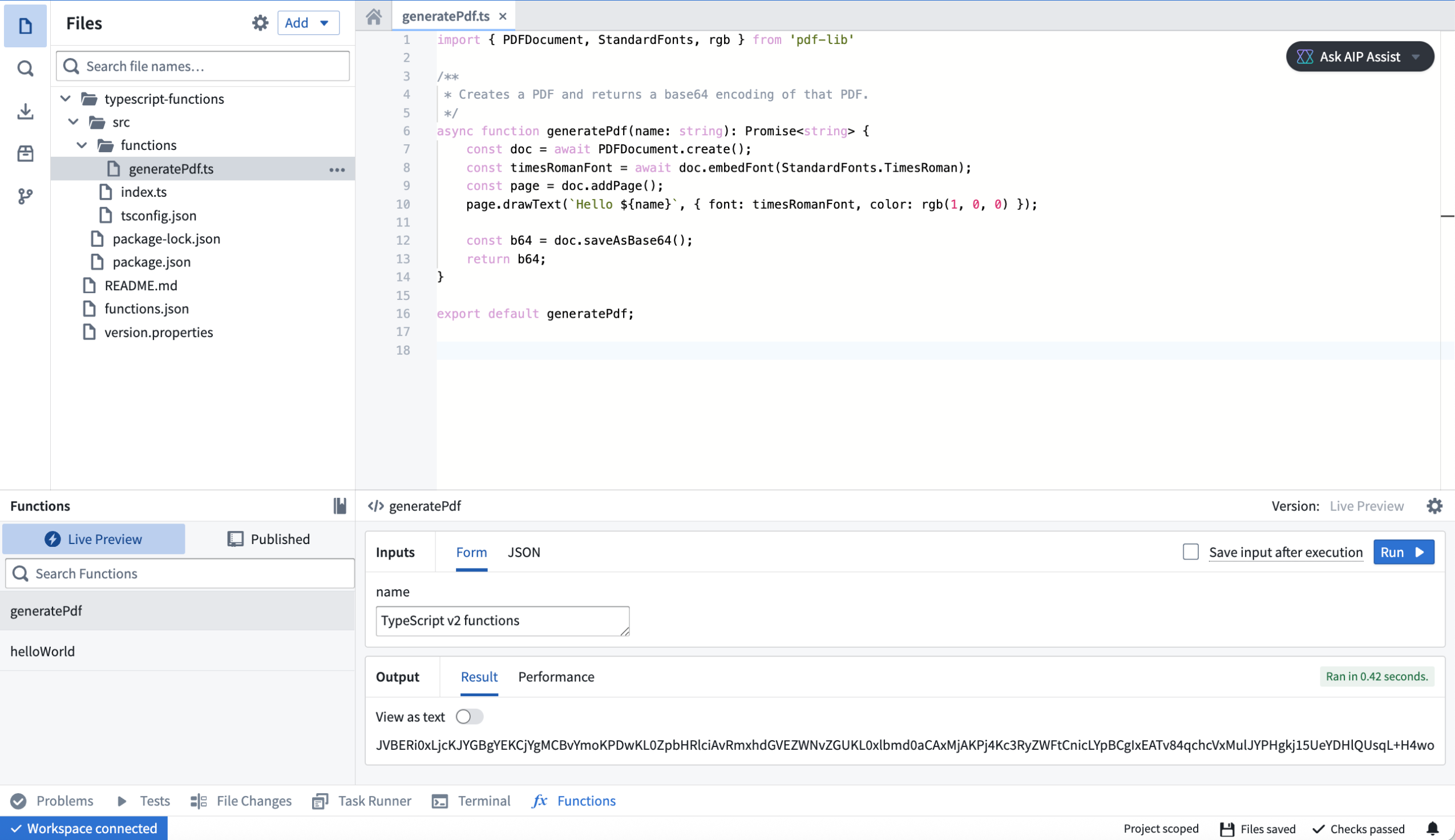

Full Node.js runtime

In TypeScript v2 functions, your code runs in a full Node.js runtime with support for core modules such as fs, child_process and crypto. You can use any libraries that are compatible with a Node.js runtime. Node.js runtime is a substantial improvement over the limited V8 runtime used for TypeScript v1 functions, which restricted functionality and available built-in APIs and resulted in compatibility issues with the open-source ecosystem.

The following example demonstrates using pdf-lib to generate a PDF:

An example of a pdf-lib TypeScript v2 function in a code repository.

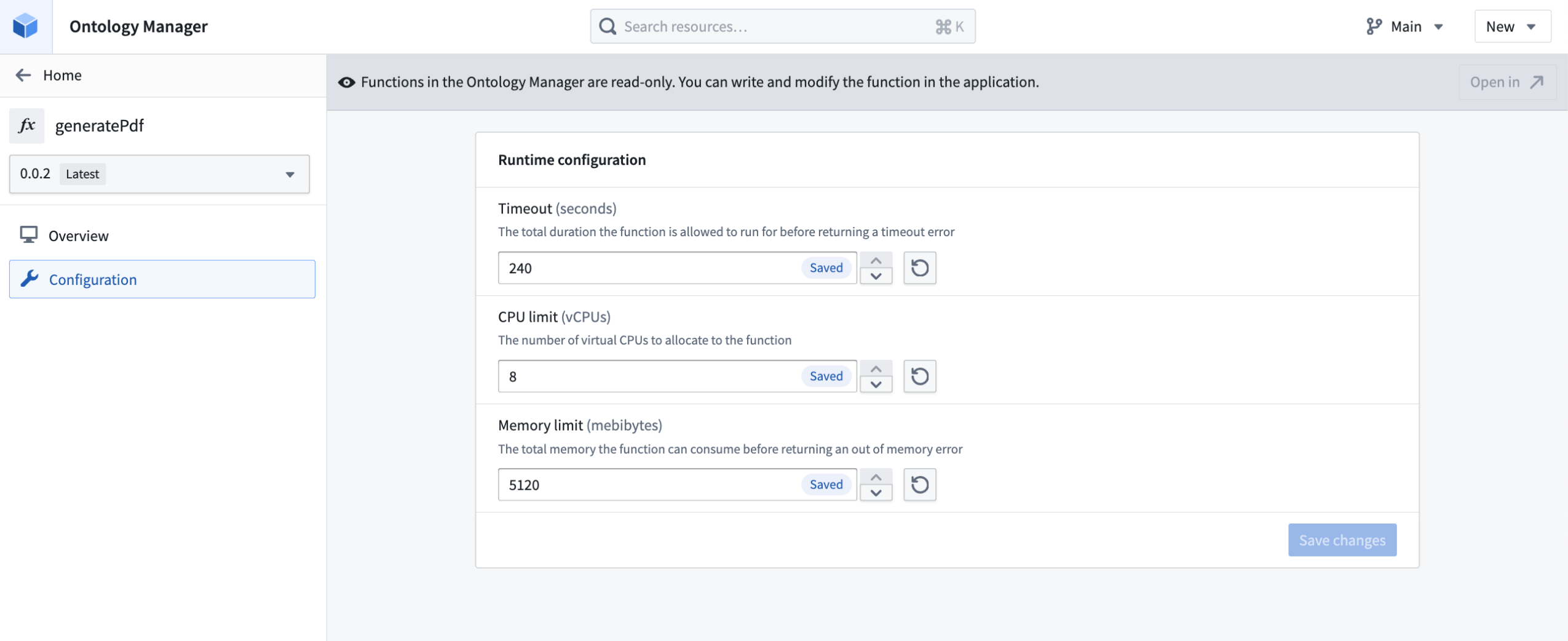

Configurable resources

In TypeScript v2, you can configure your function to have up to 5GB of memory and 8 CPUs, versus 128MB and a single CPU in Typescript v1. This upgrade makes it possible to deal with large-scale data, such as images, and use parallelism to quickly perform CPU-intensive tasks.

Edit TypeScript v2 function runtime configuration in Ontology Manager.

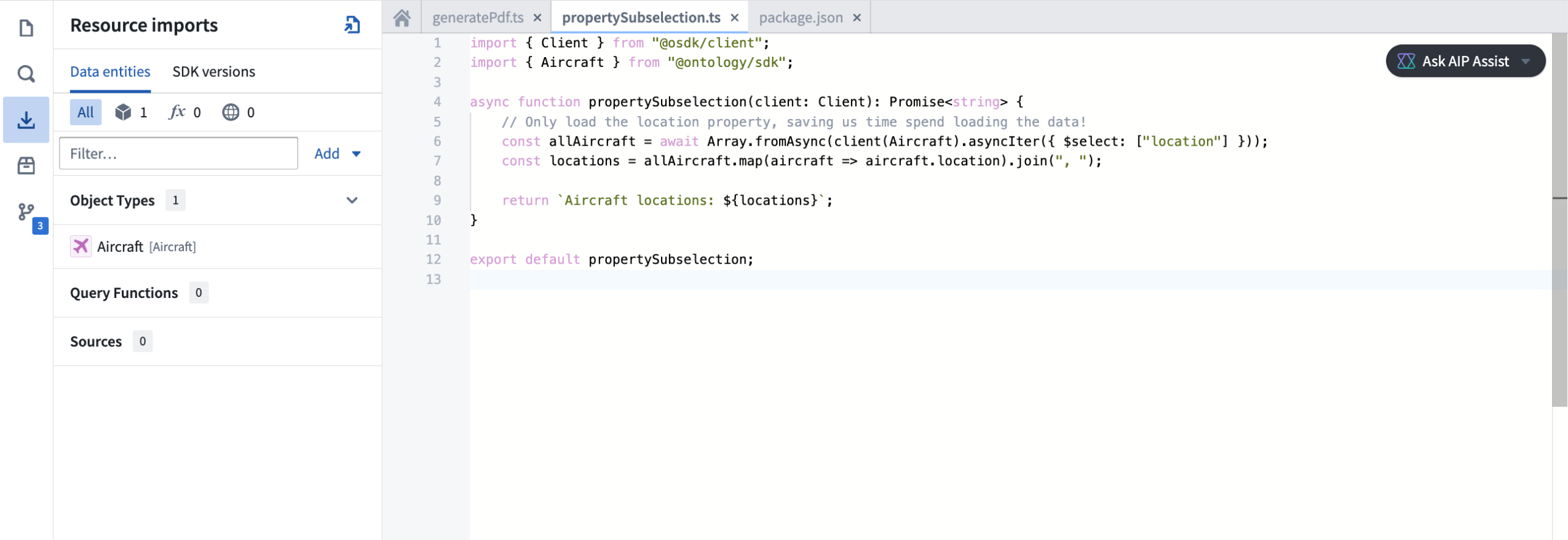

First-class support for OSDK

TypeScript v2 functions are designed around the Ontology SDK (OSDK). You no longer need to learn how to use different SDKs to write your functions. Additionally, powerful TypeScript OSDK tools like property sub-selection will allow you to only load the data you need at a given time.

Use TypeScript v2 functions with the Ontology SDK in your code repository.

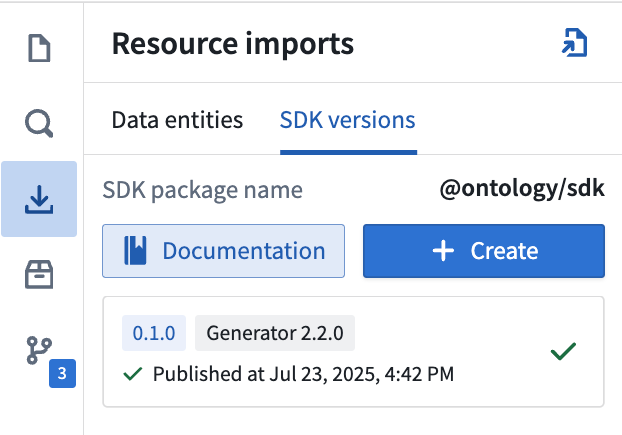

You can find documentation generated for your OSDK in the Resource imports section of the code repository sidebar, providing you with examples of how to write code using your specific ontology.

Review OSDK documentation for TypeScript v2 functions in your code repository sidebar.

Make API calls

With TypeScript v2 functions, you can make API calls more flexibly, especially when used alongside open-source SDKs that rely on a Node.js runtime. You can use the provided @palantir/functions-sources library to obtain pre-configured fetch functions or HTTP agents that ensure compatibility with the TypeScript v2 networking environment.

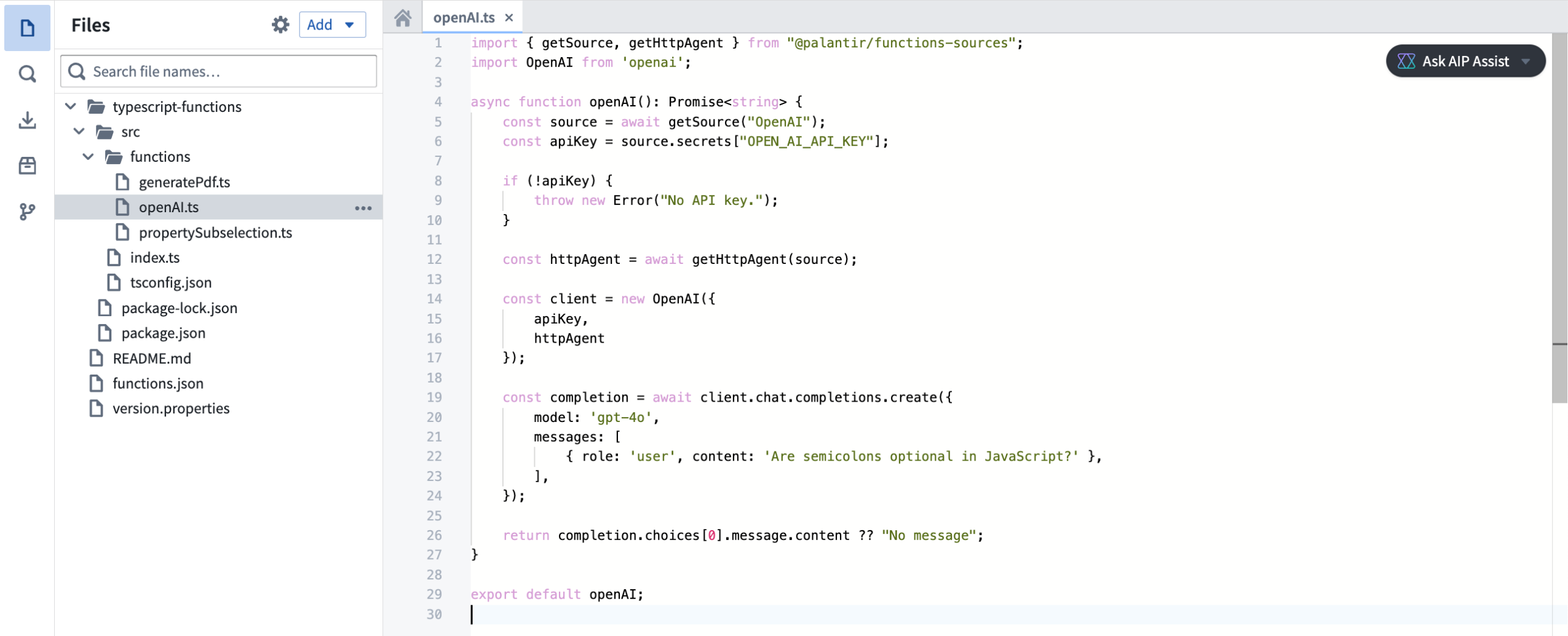

Make API calls with TypeScript v2 functions with the @palantir/functions-source library.

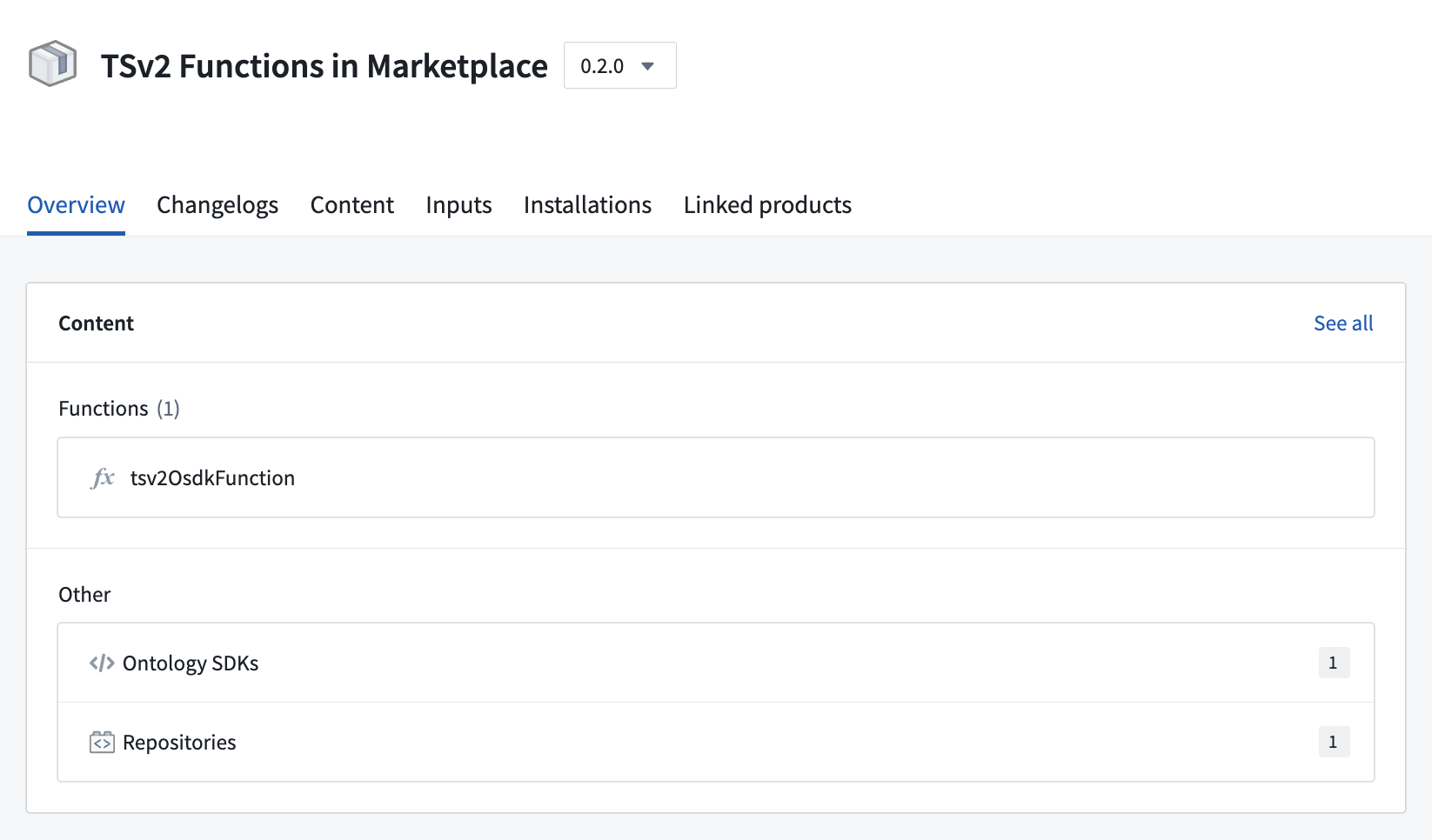

Deploy TypeScript v2 functions through Marketplace

TypeScript v2 functions can be deployed through Marketplace, enabling you to distribute products containing TypeScript v2 functions within your organization and the Foundry community. Marketplace integration includes support for any usage of the OSDK in your function.

An example of a TypeScript v2 function deployed through Marketplace.

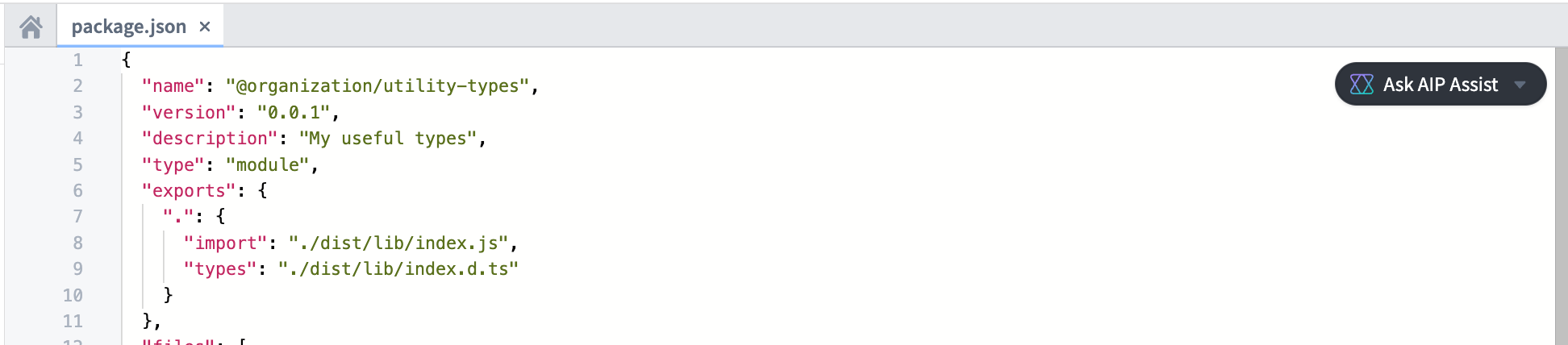

First-class support for NPM libraries

The TypeScript v2 functions template has built-in support to publish NPM libraries. You can define any exports and the name of your package in the package.json, then consume the published library in other TypeScript repositories

Define NPM libraries in a TypeScript v2 repository.

Additional resources

Learn more about TypeScript v2 functions in our documentation, or refer to the README.md file contained within a TypeScript v2 repository for more information.

Code-defined input filtering in the Palantir extension for Visual Studio Code [Experimental]

Date published: 2025-07-24

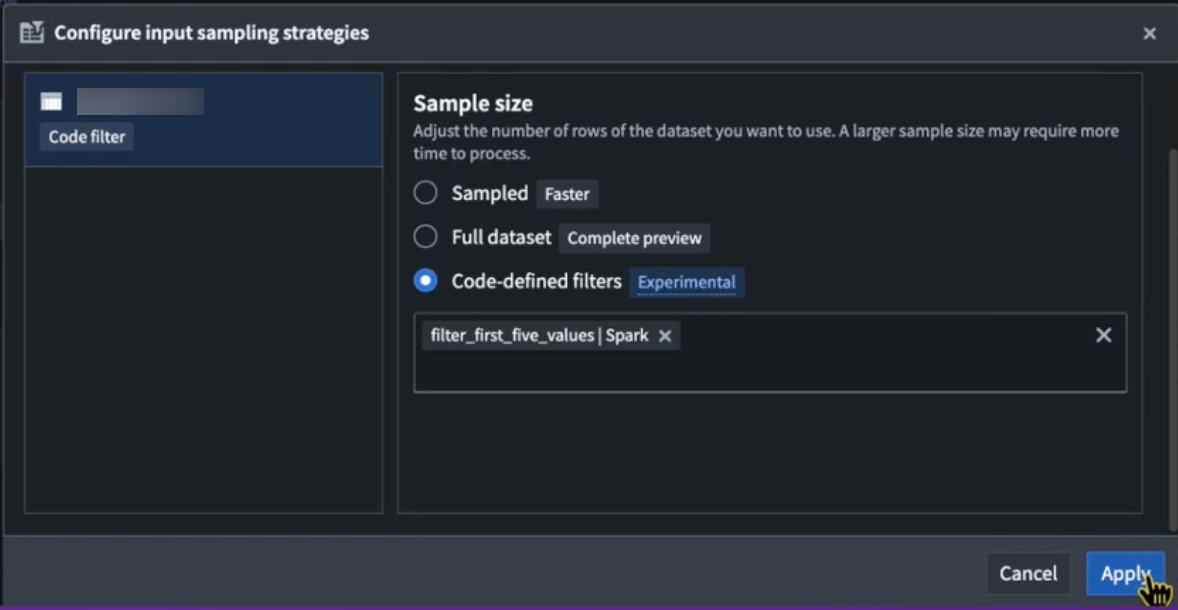

Code-defined input filtering [Experimental] is now available for Python transforms in the Palantir extension for Visual Studio Code. This feature allows users to implement filtering strategies for VS Code previews based on custom code, in contrast to the previous functionality that only supported full and sampled dataset input strategy configurations. When applicable, custom filtering strategies will leverage pushdown predicates ↗ to ensure that only the most relevant data samples are used in previews.

The Palantir extension for Visual Studio Code automatically discovers all eligible filters throughout your codebase and displays them in the selection dropdown when configuring input sample strategies, making it easy to find and apply the needed processing functions. Users can select eligible functions from the repository using the multi-select dropdown menu, and arrange them in order of preference. Filters will be applied sequentially in the order they appear in the selection field.

The Code-defined filters option in the Configure input sampling strategies dialog.

Any added input filtering configuration will be preserved across preview sessions. These configurations will be reset if the workspace is restarted, or if the .maestro/ configuration folder is deleted locally in VS Code.

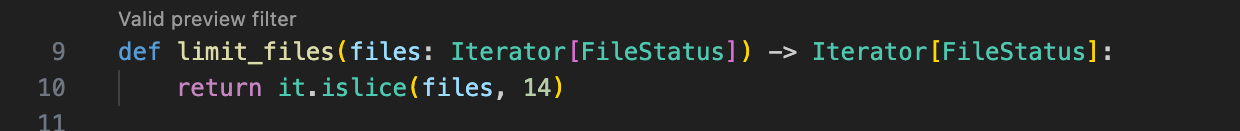

Eligible preview filters will display a codelens hint stating that they are a valid preview filter.

Code-defined input filtering allows users greater flexibility and precision when shaping their data previews, enabling more targeted analyses in Python workflows. Note that structured inputs are supported in Spark and lightweight transforms, while unstructured inputs (raw files) are only supported for Spark.

Learn more about transform previews and code-defined input filtering.

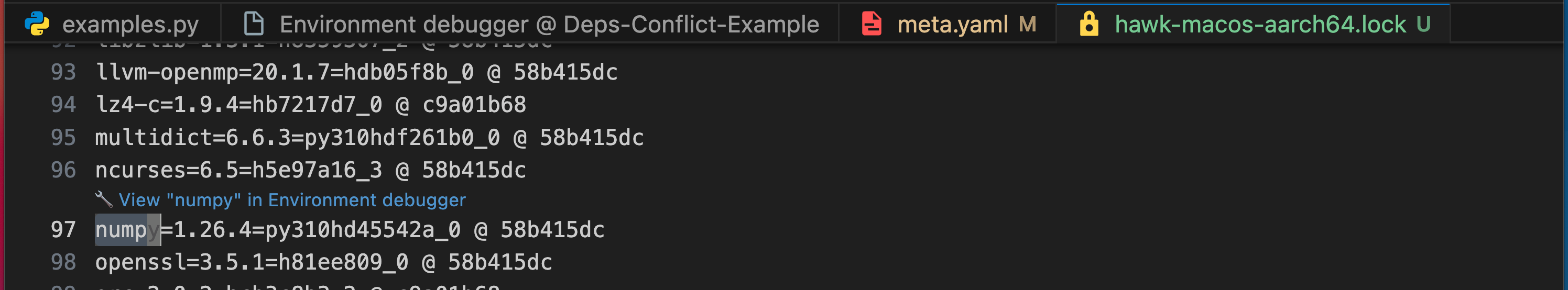

Enhanced environment conflict visualization in Python repositories

Date published: 2025-07-24

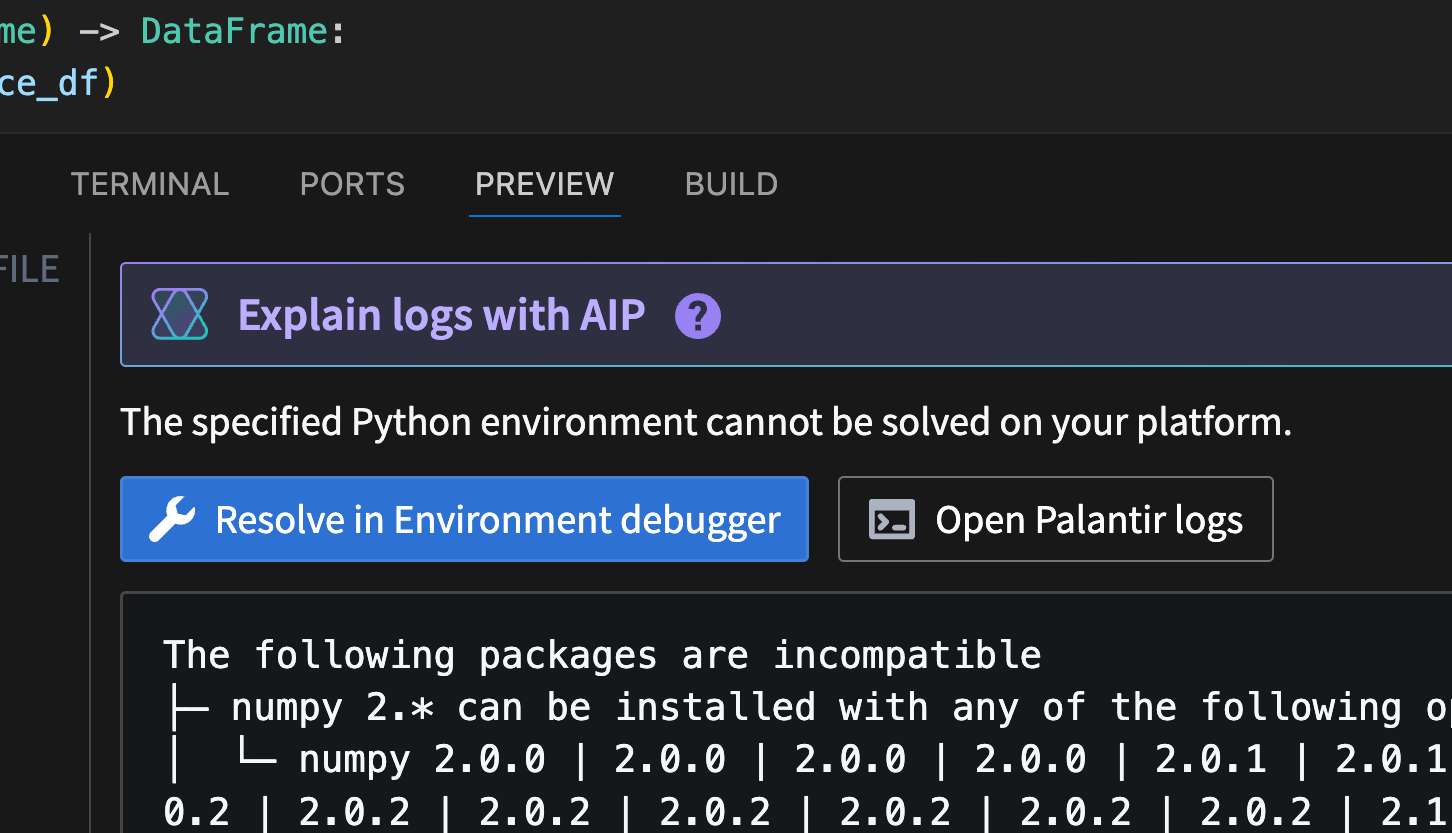

The Palantir extension for Visual Studio Code now supports environment conflict visualization in Python repositories, featuring an intuitive and user-friendly interface. When a conflict occurs, users can now select the Resolve in environment debugger option to open an interactive view of environment conflicts.

The Resolve in environment debugger option, displayed above an environment conflict error message.

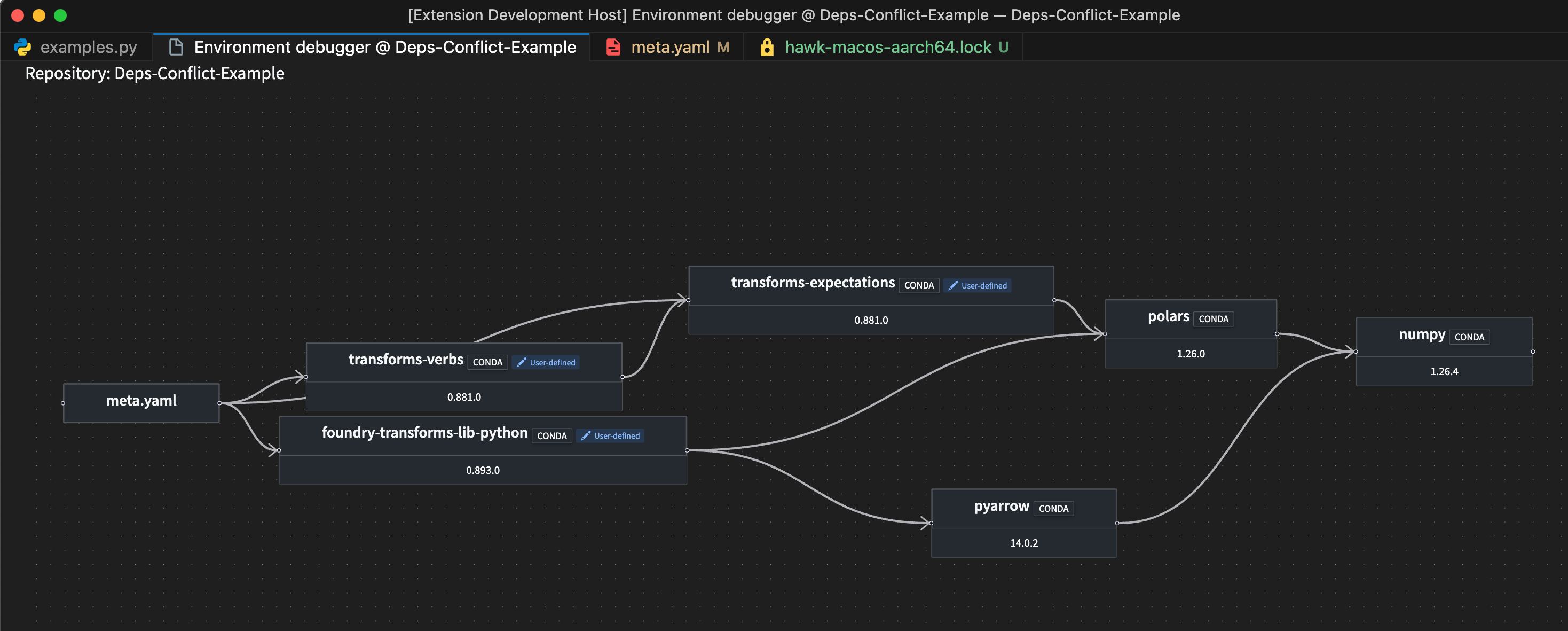

This feature eliminates the need to interpret complex text outputs, and allows users to quickly identify, analyze, and resolve dependency issues with clear insights.

A sample environment debugger graph.

In addition to aiding conflict resolution, this feature also enables comprehensive environment exploration. Every package listed in your lockfile now includes a View package in environment debugger codelens option, allowing you to trace every dependency in your environment and identify its source.

The codelens hint above a highlighted package, providing the option to view the package in the environment debugger.

Leverage this new feature to enhance productivity, reduce time spent troubleshooting, and maintain stable and reliable Python environments.

Learn more about the environment debugger in the Palantir extension for Visual Studio Code.

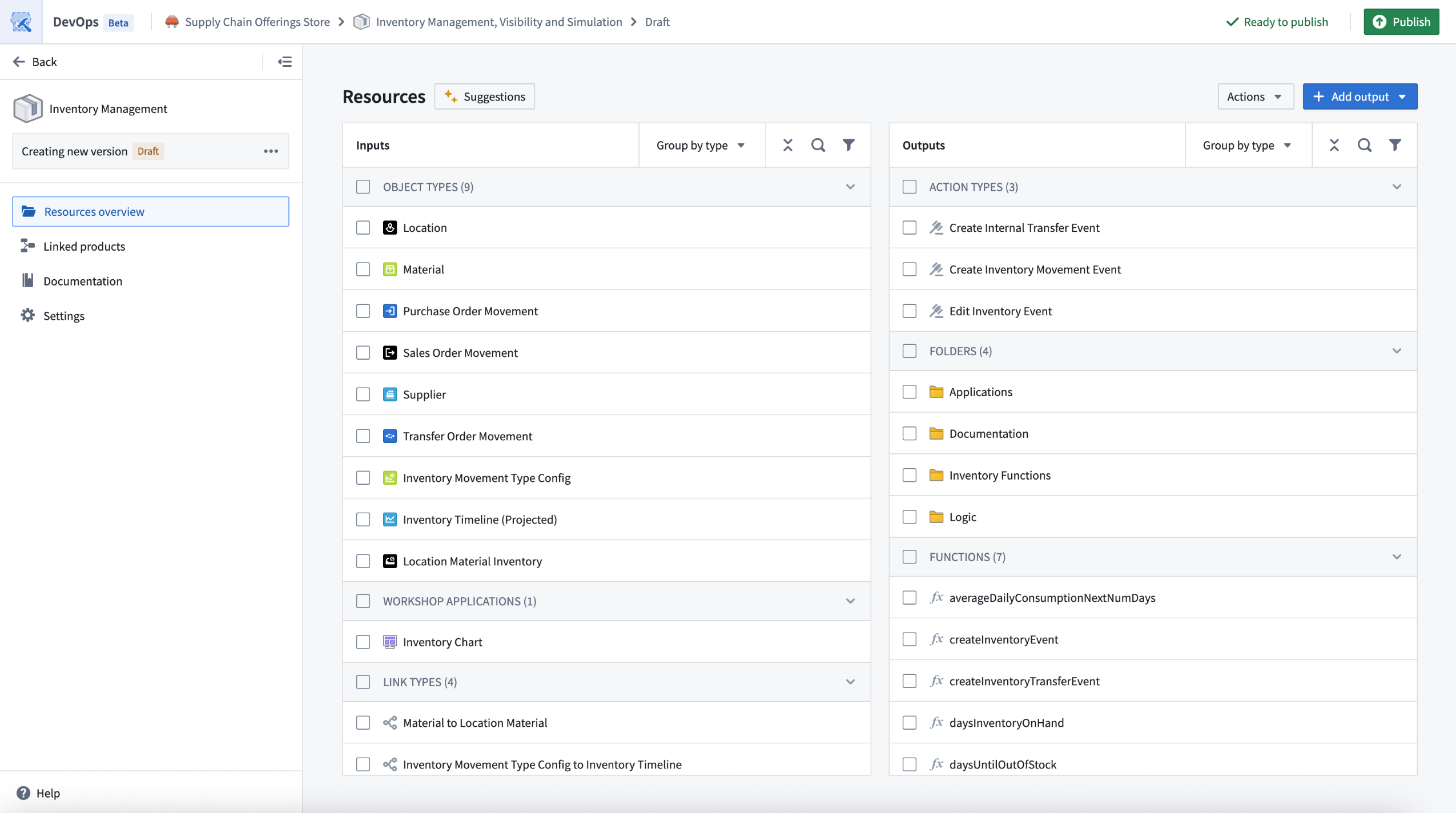

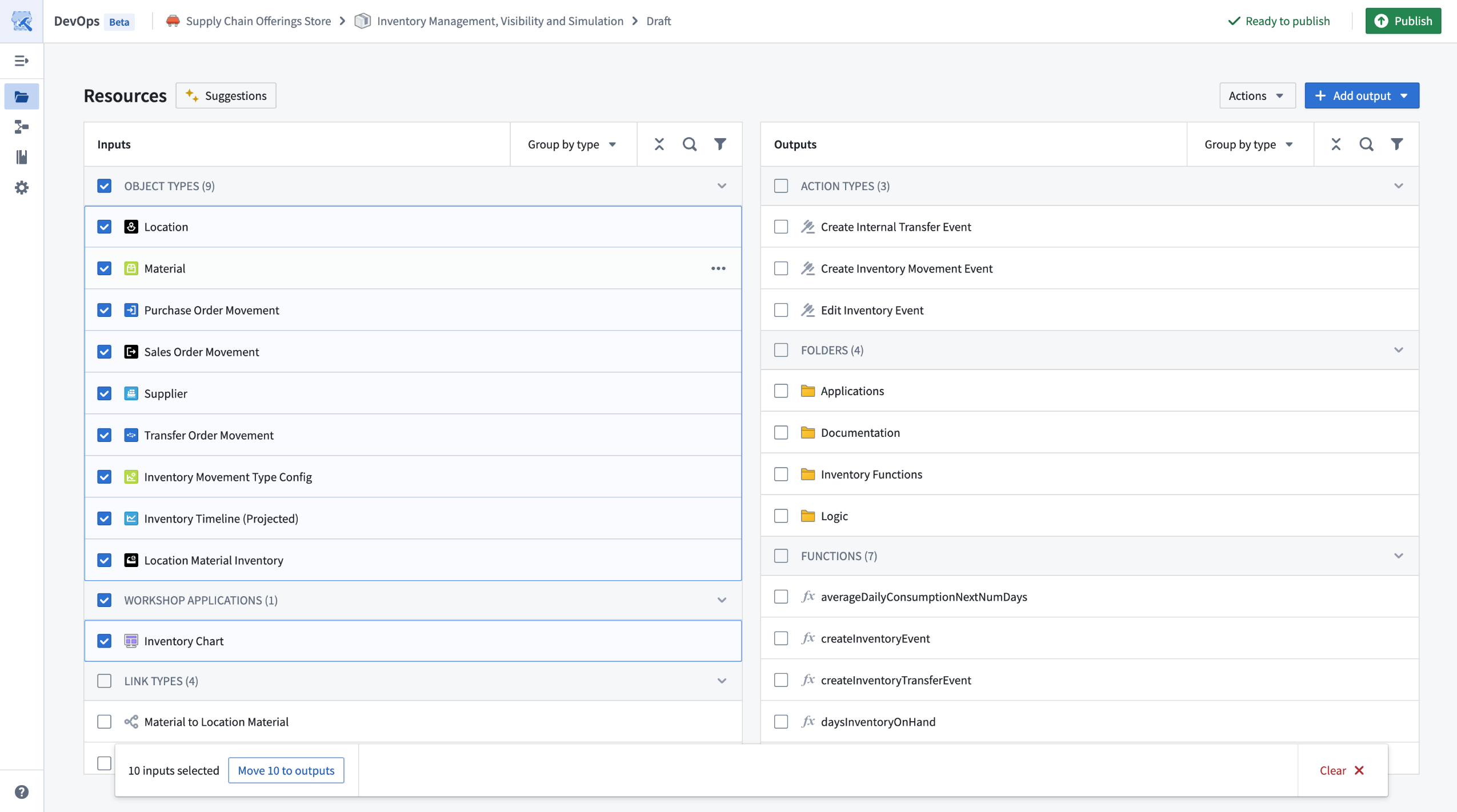

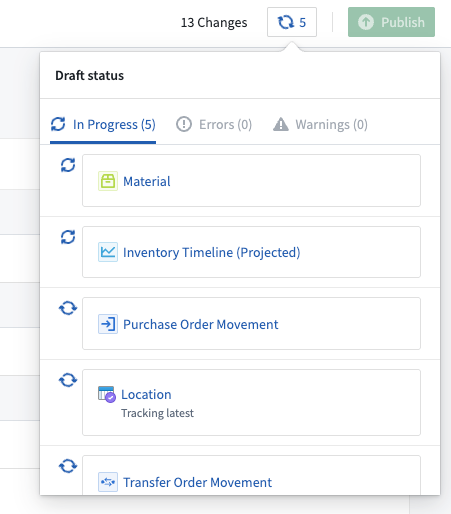

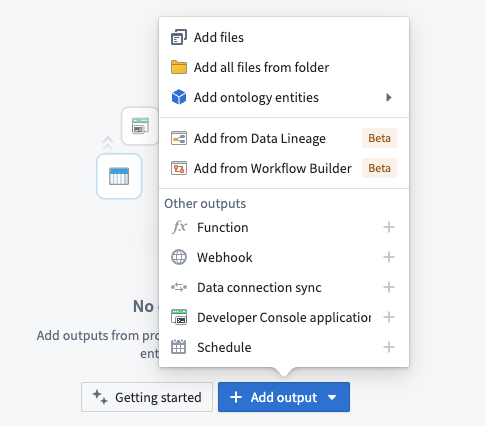

Experience faster DevOps packaging with streamlined bulk operations and an intuitive workflow

Date published: 2025-07-24

The new DevOps packaging experience for creating product drafts is now available on all Foundry enrollments as of the week of July 21. This major update introduces a fully redesigned packaging experience, built for speed, bulk operations, and an intuitive workflow.

If you have an existing draft, note that it will not be compatible with the new packaging workflow and must be recreated after the release. During the rollout week, you can switch to the previous platform version to continue working on existing drafts.

The new landing page for drafts in DevOps offers a consolidated view of inputs and outputs, along with bulk edit options.

Key highlights of this new DevOps packaging experience include:

- Streamlined drafts: Enjoy a consolidated view of all your inputs and outputs, enabling powerful bulk actions and faster iteration.

- Faster iteration: Edits can be stacked and applied without waiting for previous changes to complete, and backend operations are significantly faster.

- Bulk edit operations: Move multiple resources to outputs, refresh groups of resources, and manage errors directly in the draft view.

- Expanded support for selecting outputs: Add all resources from the Data Lineage and Workflow Builder applications.

Bulk operations, such as moving multiple types of inputs to outputs, are now available with multi-select edits.

Multiple edits can be stacked and applied without waiting for previous changes to complete.

Outputs can now be added in bulk from an existing Data Lineage or Workflow Builder graph.

Review DevOps documentation on creating a product.

We want to hear from you

As we continue to improve DevOps, we want to hear about your experiences and welcome your feedback. Share your thoughts with Palantir Support channels or our Developer Community ↗.

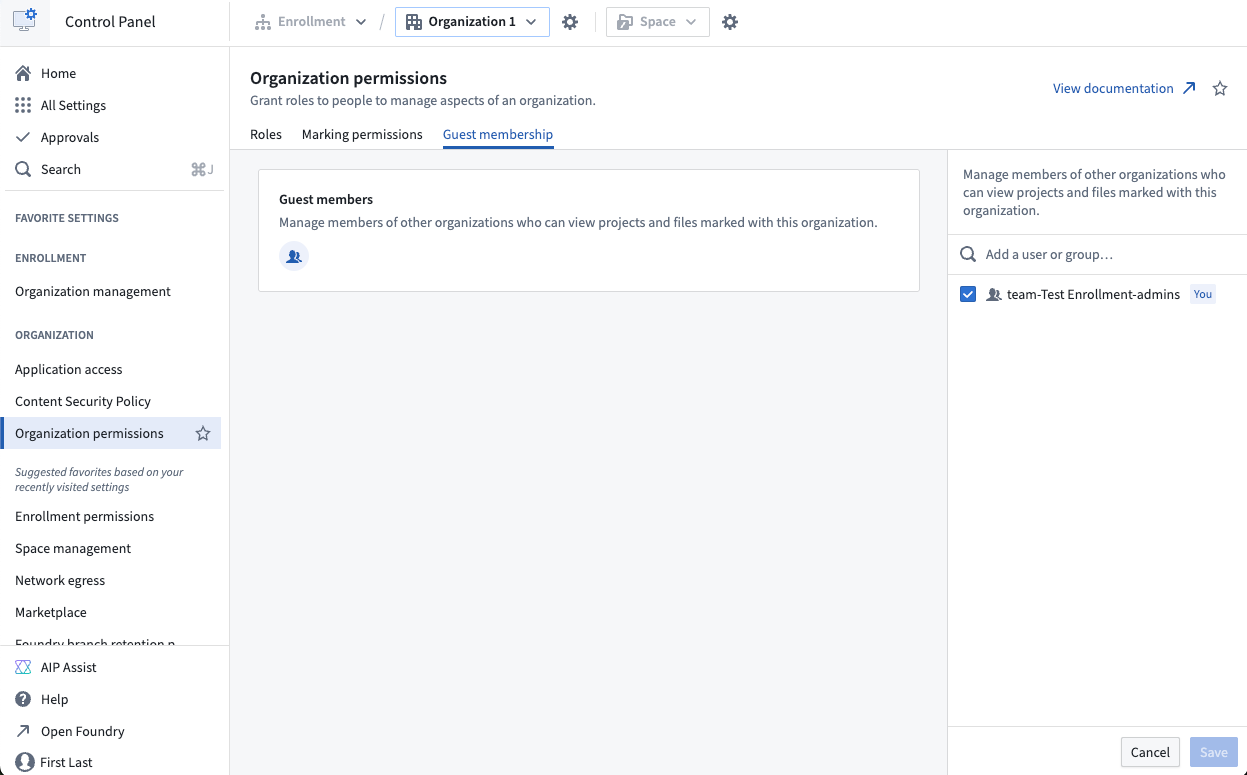

Organization guest membership now managed in Control Panel

Date published: 2025-07-22

The interface for viewing and managing guests for your organization has moved from the Platform Settings page to Control Panel. You can now manage guests for your organization in the new Guest membership tab on the Organization permissions page.

The new Guest Membership tab in the Organization Permissions page in Control Panel.

Previously, all organization members could view its guests. Now, viewing guest members requires the Organization settings viewer role, while managing guests requires the Organization administrator role.

You can review information on guest membership and managing organization access on the Manage Access documentation page.

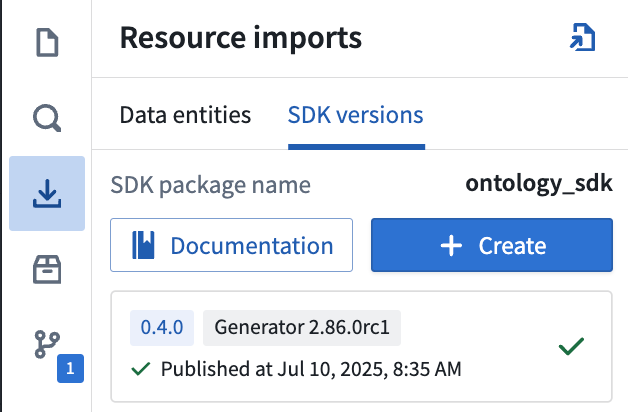

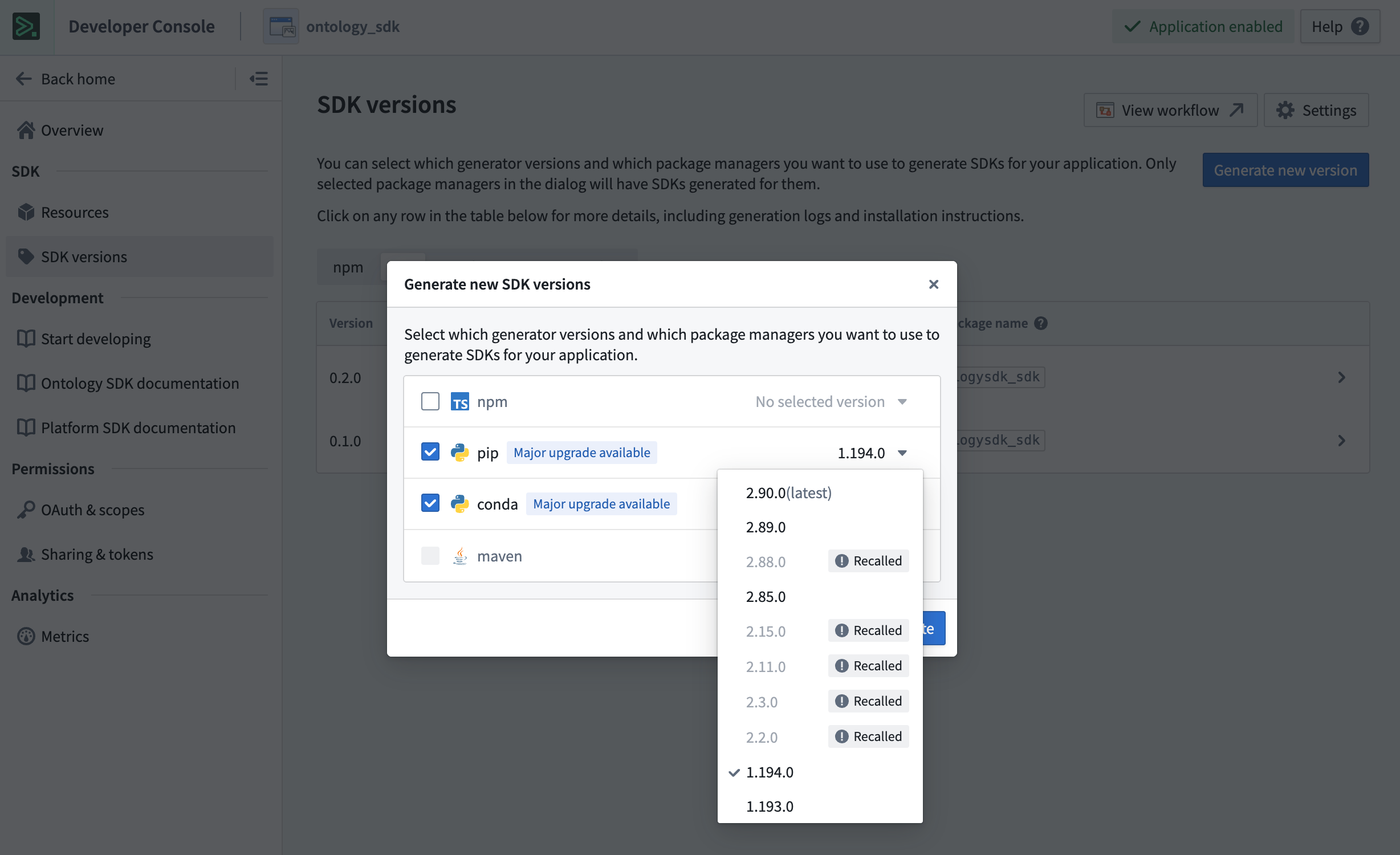

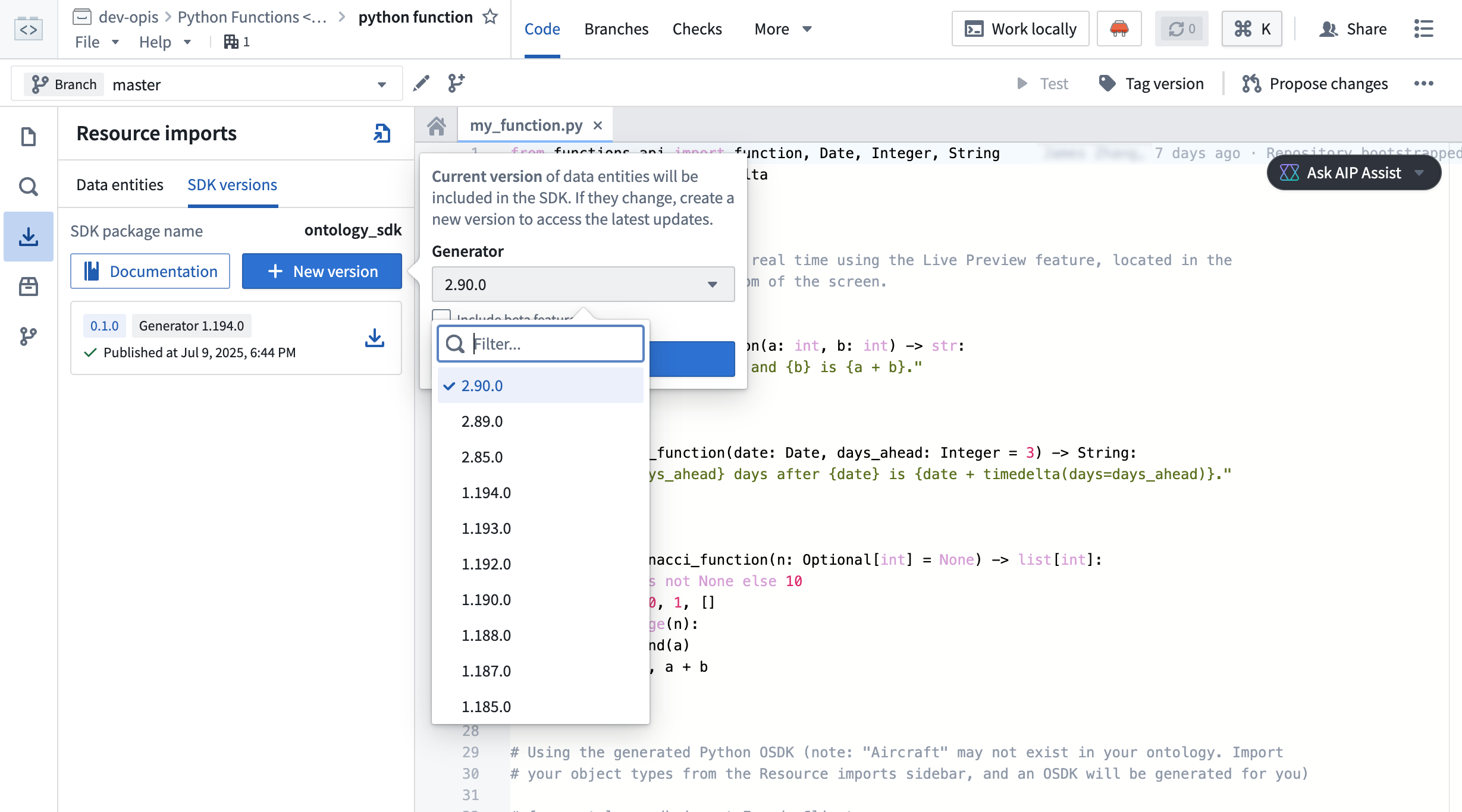

Python Ontology SDK 2.x now generally available

Date published: 2025-07-22

The Python Ontology SDK (OSDK) 2.x is now generally available as of the week of July 14. This update offers improved syntax, return types, and features to improve performance and enable more complex applications. By default, any new Python applications created in Developer Console or Python functions will now use the latest 2.x generator.

Migrate existing applications to Python 2.x

The Python OSDK 2.x migration guide explains version differences, highlights relevant syntax and structure changes, and provides code examples to help you update applications built using legacy versions.

Migrating legacy applications to use version 2.x syntax is not currently mandatory, but existing and upcoming beta features, such as derived properties and media sets will only be available on Python OSDK 2.x releases. To generate Python OSDK 2.x for an existing application in Developer Console, select the SDK versions menu in the left side panel of your application.

Generate Python OSDK 2.x for an existing legacy version application in Developer Console.

To generate Python OSDK 2.x for an existing code repository in Python functions, select the SDK versions menu in the left side panel of your code repository.

Generate Python OSDK 2.0 for an existing legacy version code repository in Python functions.

Palantir will maintain support for legacy Python applications for at least one year from the release of version 2.x.

Your feedback matters

As we continue to develop the OSDK, we want to hear about your experiences and welcome your feedback. Share your thoughts with Palantir Support channels, or our Developer Community ↗ using the ontology-sdk tag ↗.

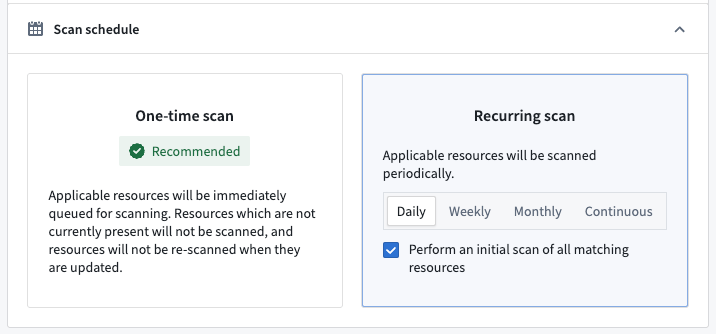

Configure sensitive data scans on a schedule

Date published: 2025-07-22

As of the week of July 21, Sensitive Data Scanner now allows you to schedule scans across all enrollments, giving you greater flexibility and control over your data governance workflows. Previously, scans could only be run as one-time scans or as recurring scans. Recurring scans would trigger each time an applicable resource was updated with new data, but this could be compute-intensive for teams working with high transaction volume resources or utilizing large and complex scans. Now, you can schedule scans to run daily, weekly, or monthly, helping reduce costs and better align your data governance workflows to your organization's needs.

The previously supported recurring scan type has been renamed to "continuous" recurring scans and remains available for use.

Scan schedule configuration panel, showing options for one-time and recurring scans, including new daily, weekly, and monthly scheduling choices.

What’s next

Scheduled scans are particularly helpful for running compute-intensive scans in an automated manner. In particular, this will offer additional flexibility for organizations configuring automated sensitive data scans on large document, image, and audio files through media set scanning [Beta]. We are looking forward to making media set scanning generally available in the coming weeks.

We want to hear from you!

We are continuing to improve Sensitive Data Scanner based on your feedback! Share your experiences via Palantir Support or our Developer Community and use the sensitive-data-scanner tag.

Palantir MCP enables AI IDEs and agents to design, build, edit, and review in the Palantir platform [Beta]

Date published: 2025-07-17

Palantir Model Context Protocol (MCP) is now available in beta across all enrollments as of the week of July 14. Palantir MCP enables AI IDEs and AI agents to autonomously design, build, edit, and review end-to-end applications within the Palantir platform. An implementation of Model Context Protocol ↗, Palantir MCP supports everything from data integration to ontology configuration and application development, all performed within the platform.

Key capabilities of Palantir MCP

Vibe code production applications: Enables developers to use AI to produce production-grade applications on top of the ontology while following Palantir's security best practices.

Data integration: Powers Python transforms generation by enabling AI IDEs to get context from Compass, dataset schemas, and execute SQL commands entirely locally.

Ontology configuration: Allows developers to configure their ontology locally without leaving the IDE.

Application development: Integrates with your OSDK to enable the development of TypeScript applications on top of your ontology.

Start using Palantir MCP

To get started, follow the installation steps and read the user guide for examples and best practices. We strongly encourage all local developers to install and regularly update the Palantir MCP to take advantage of the latest changes and tool releases.

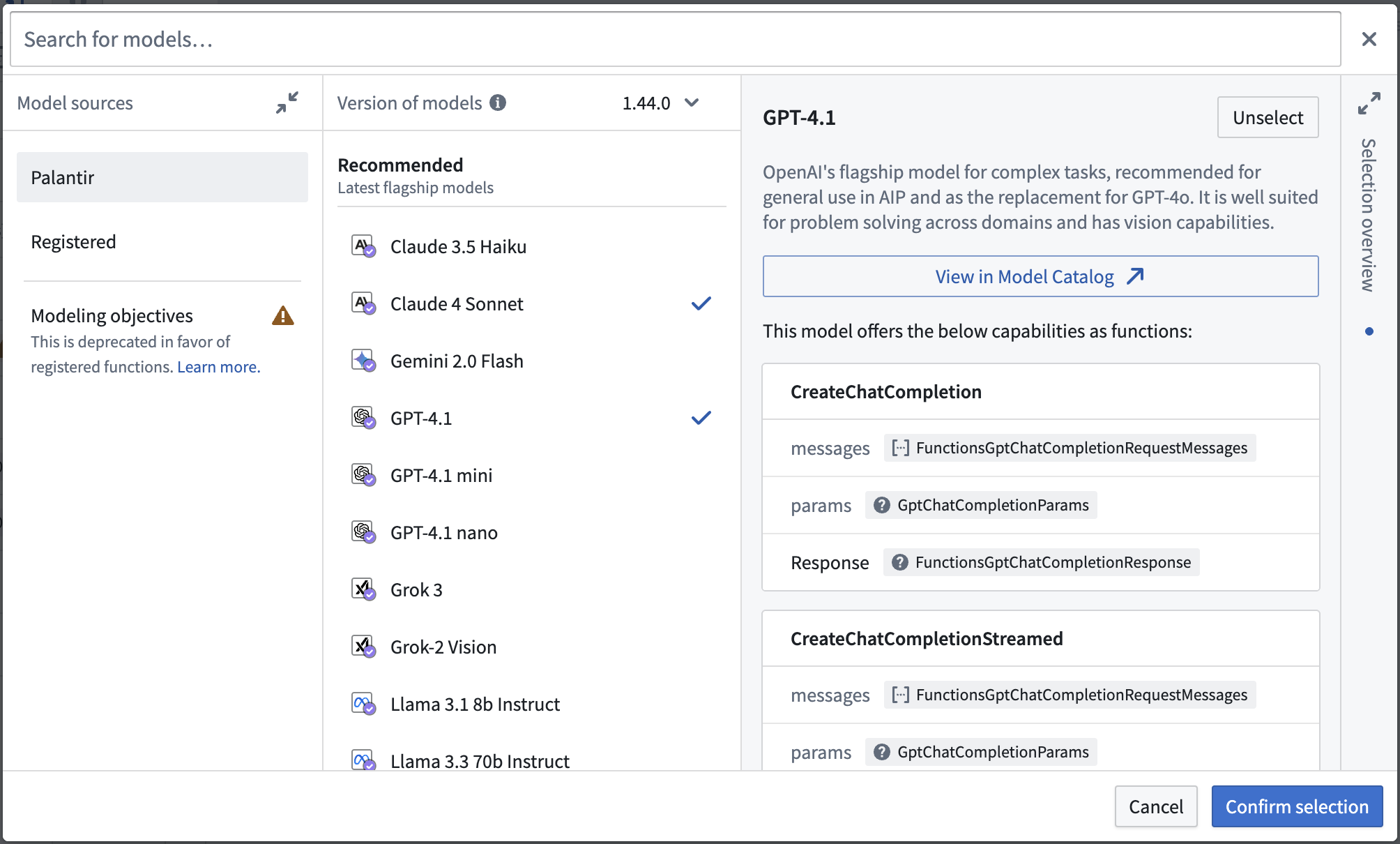

Updated language models now available in TypeScript functions repositories

Date published: 2025-07-17

Updated language models are now available in TypeScript functions repositories. These updates provide better consistency between model APIs, making it easier to interchange underlying models. Model capabilities have also been enhanced, with improved support for vision and streaming.

We highly recommend updating your functions repositories with the new models to ensure you stay up to date with the latest AIP features. Review the updated documentation for language models in functions to learn how to update your repository.

Viewing model capabilities when importing updated language models.

Share your feedback

Share your feedback about functions by contacting our Palantir Support teams, or let us know in our Developer Community ↗ using the functions tag ↗.

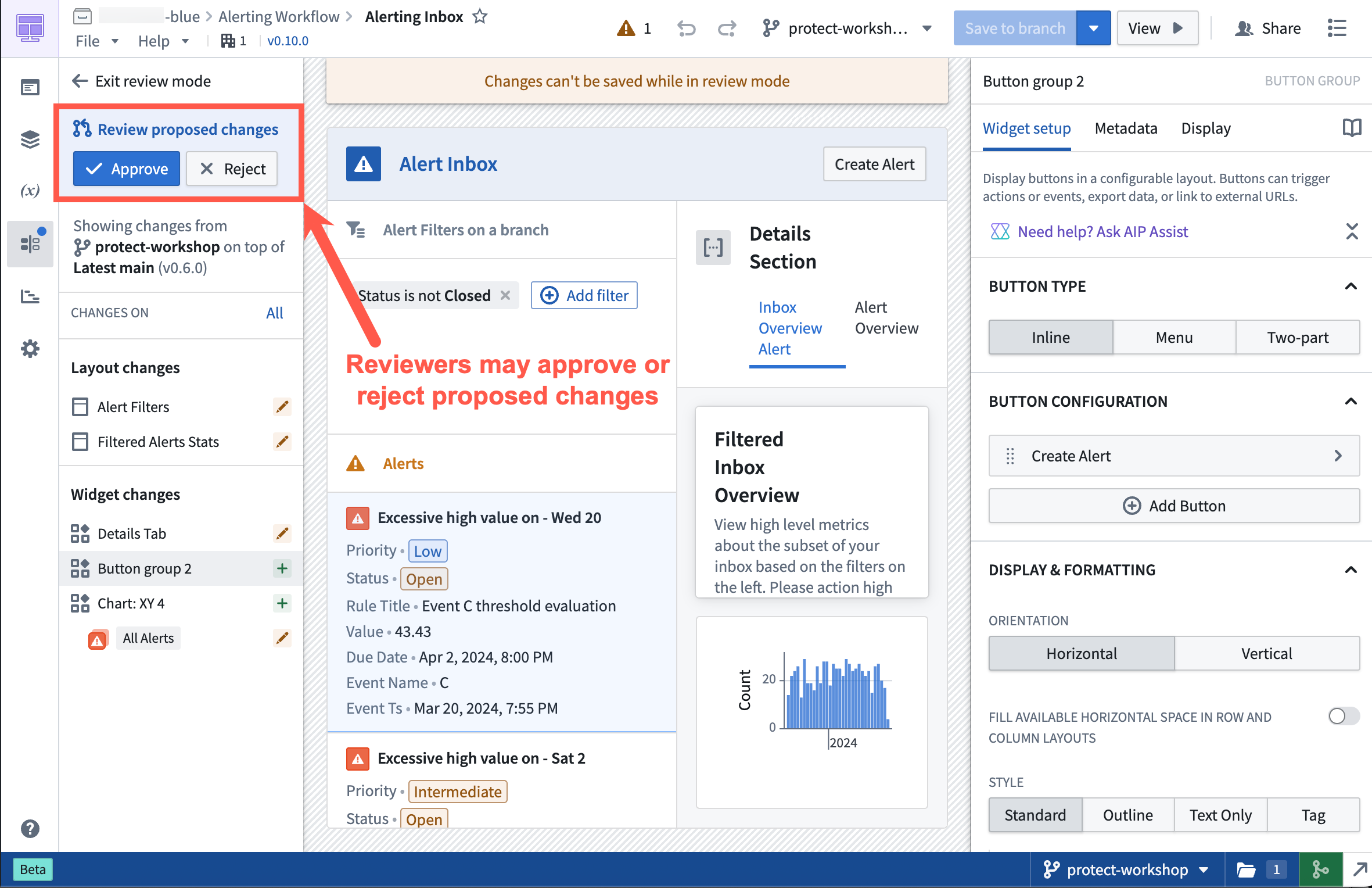

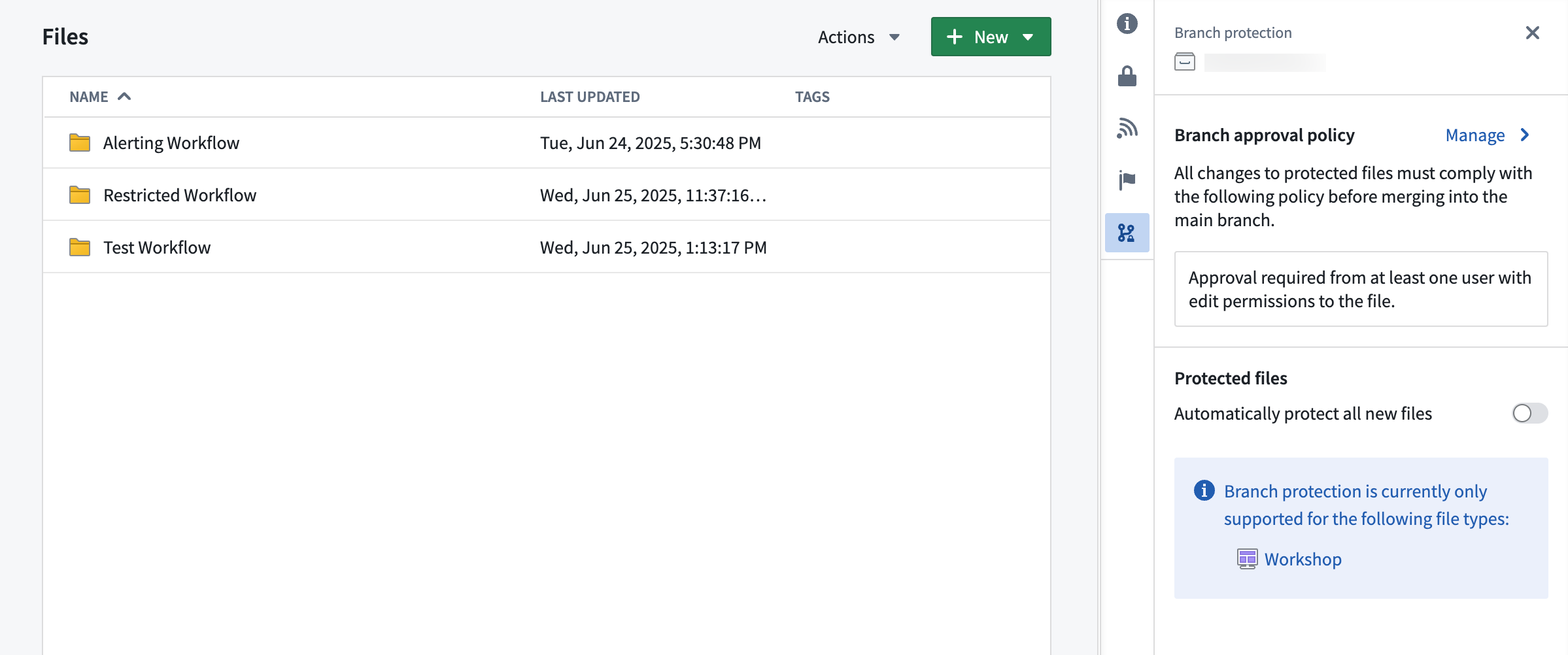

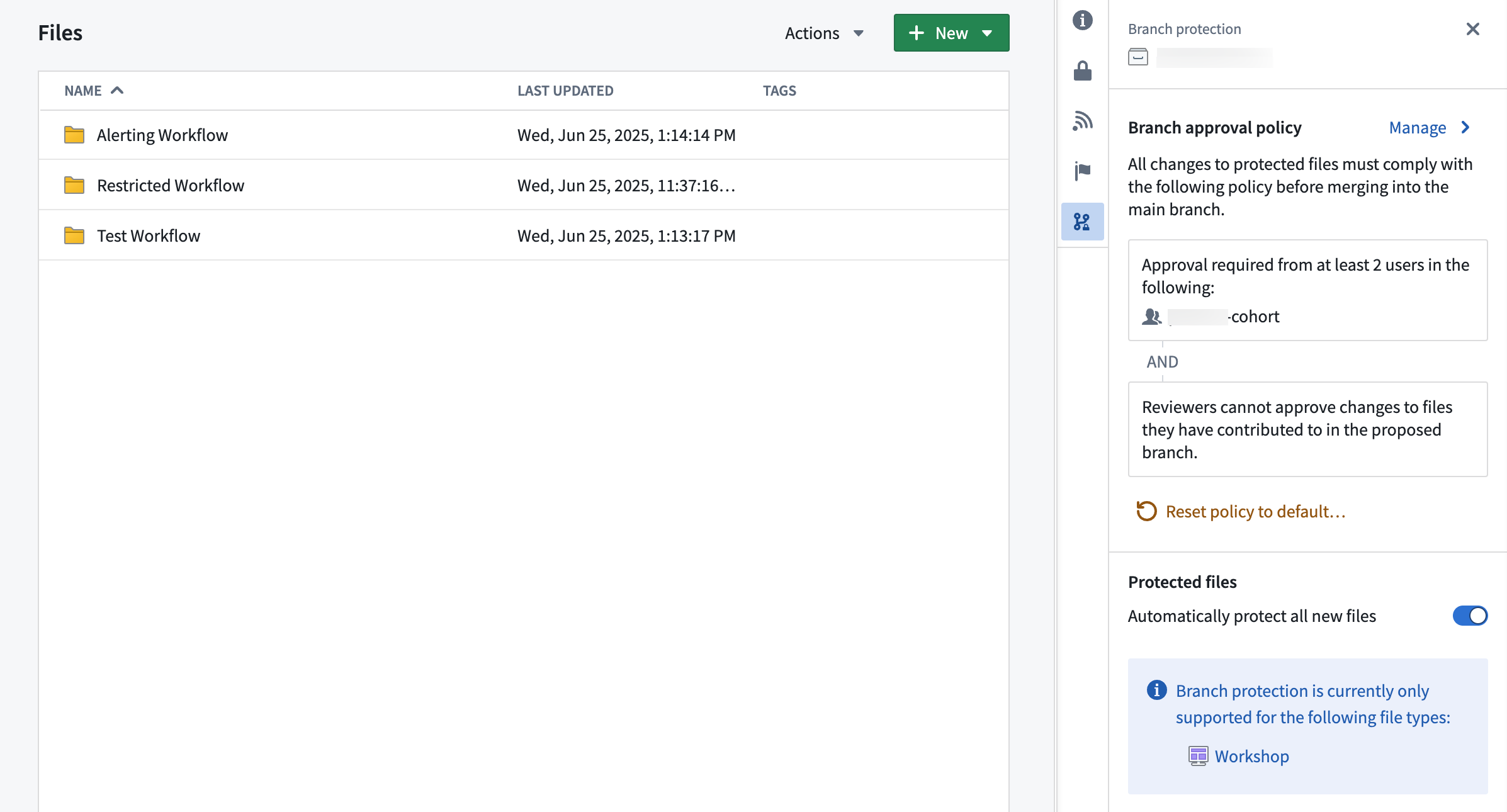

Protect the main branch of your resources and define approval policies for your projects

Date published: 2025-07-09

You can now protect the main branch of your Workshop modules and define custom approval policies. While this only applies to Workshop for now, all types of resources will eventually be supported, with support for ontology and Pipeline Builder resources coming next.

To safeguard critical workflows and maintain development best practices, you can protect the main branch of your resources. This means that any change to a protected resource must be made on a branch and will require approval to take effect.

Approval Flow in a protected Workshop application.

Once a resource is protected, any change to that resource will have to be made on a branch and go through an approval process. The approval policy is set at the project level, and defines whose approval is required in order to merge changes to protected resources.

Project with default approval policy.

Project with custom approval policy.

Approval policies have three customizable parameters:

- Eligible reviewers: Define the users or groups that are allowed to review and approve changes to the main branch of a protected resource.

- Number of approvals required: Define the minimum number of approvals needed to enable merging of a change. Options include any eligible reviewer, all eligible reviewers, or custom (specify the number of eligible reviewers).

- Additional requirements: Control whether reviewers can approve changes to files they have contributed to in the proposed branch. A contributor is defined as any user who has made a change to that resource on the branch.

Note that branch protection currently only applies to Workshop resources, but support for protecting ontology resources is coming soon.

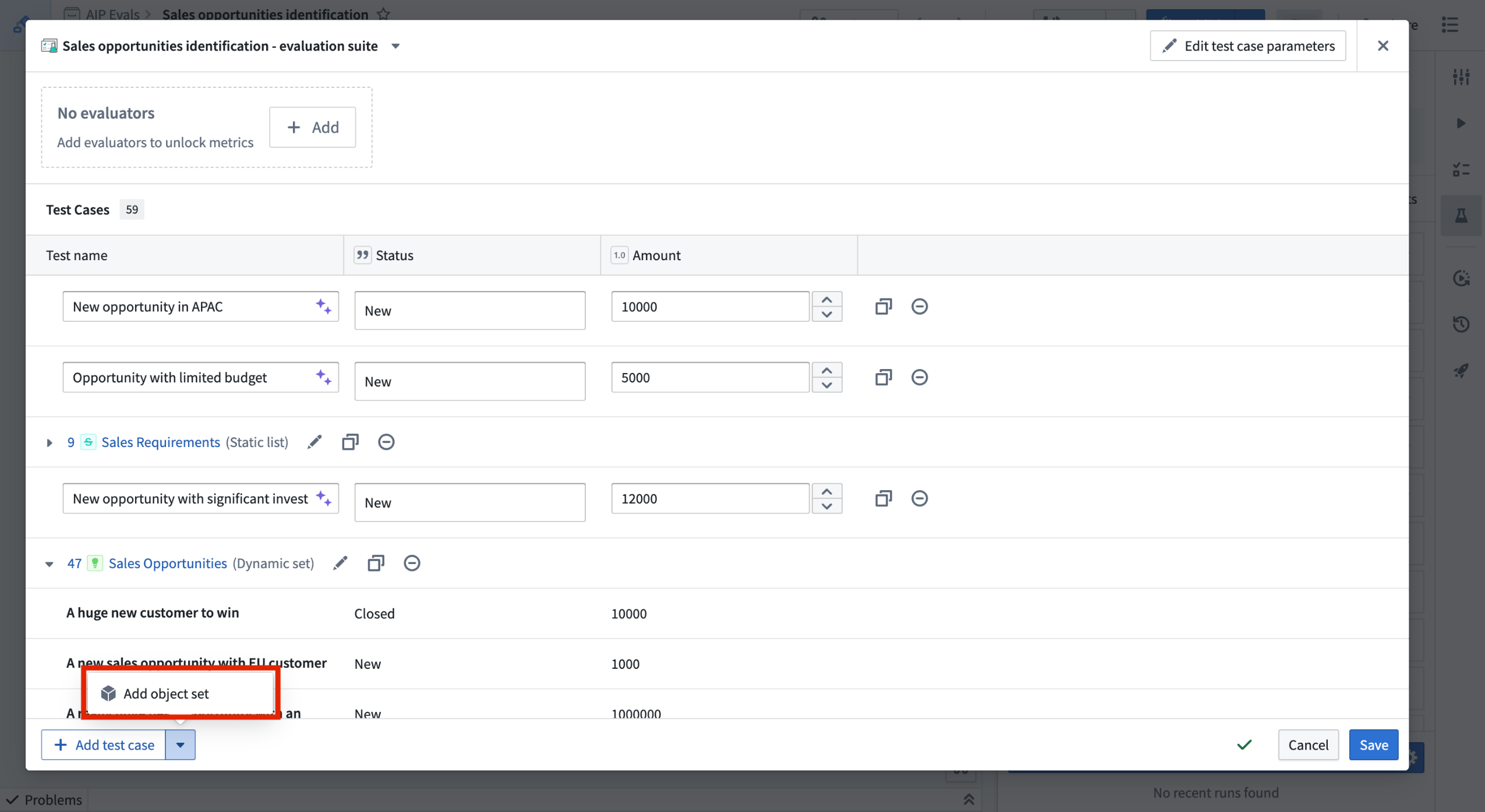

Combine multiple object sets and manual test cases in AIP evaluation suites

Date published: 2025-07-08

AIP Evals now supports combining multiple object sets and manual test cases within a single evaluation suite. The test case creation experience has been simplified, allowing you to add, delete, and duplicate object sets as needed. This flexibility enables you to leverage object sets while also adding specific manual test cases for comprehensive function testing.

You can now combine multiple object sets and manual test cases to an evaluation suite.

Learn more about adding test cases in AIP Evals.

We want to hear from you

As we continue to build upon AIP Evals, we want to hear about your experiences and welcome your feedback. Share your thoughts with Palantir Support channels or our Developer Community ↗.

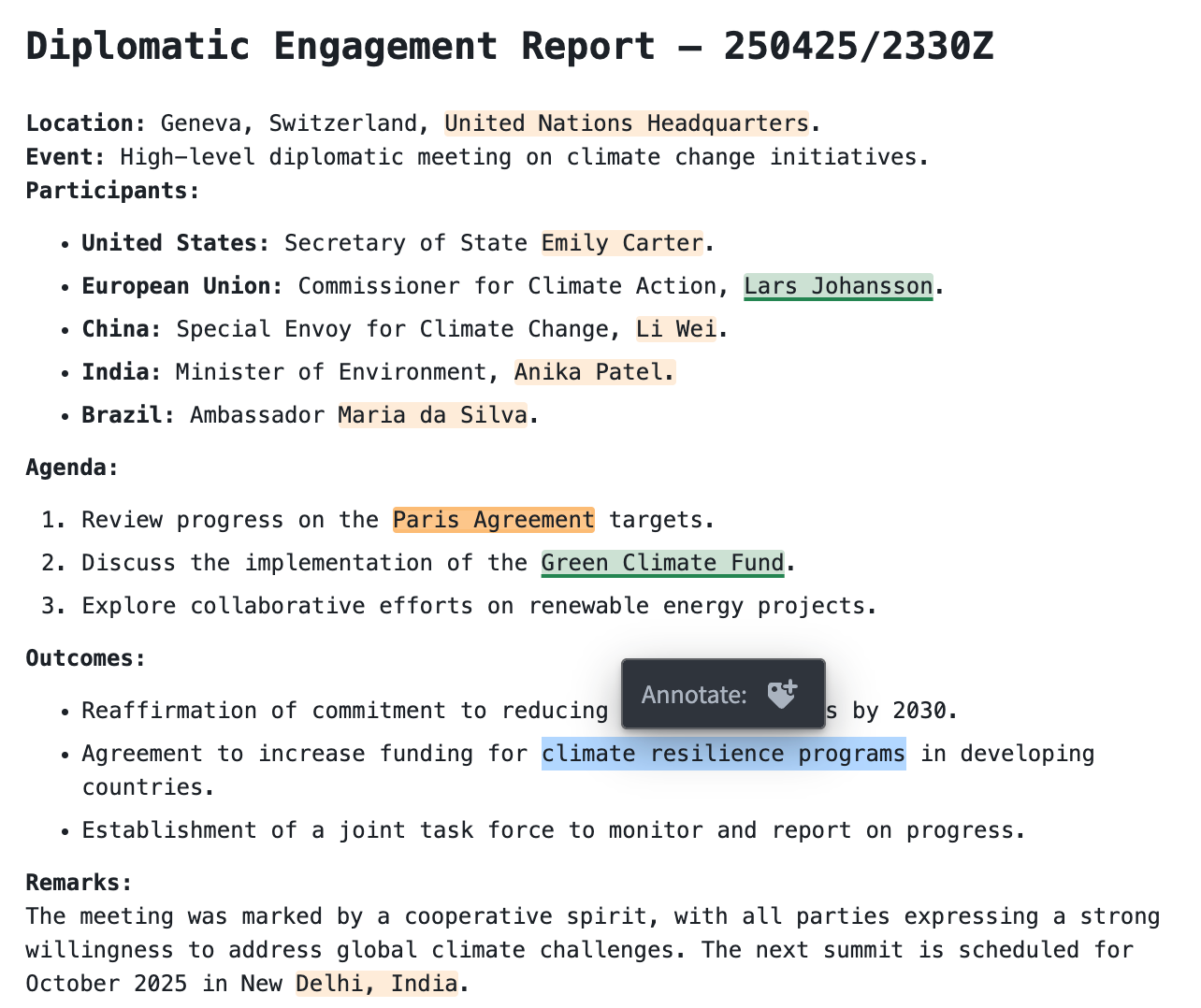

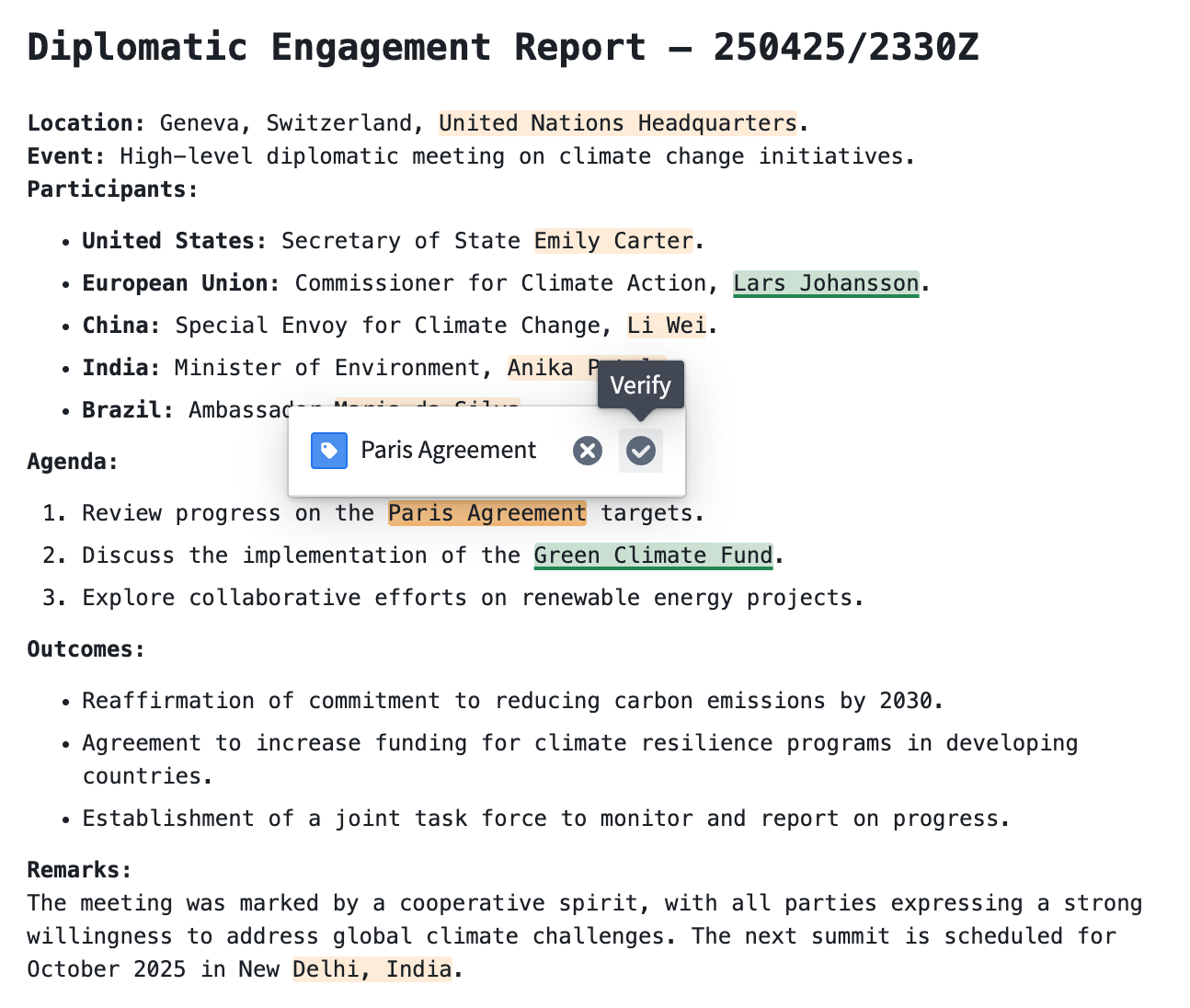

Annotate and tag text in Workshop with the Markdown widget

Date published: 2025-07-03

The Markdown widget in Workshop now supports text tagging with the new Annotation feature. With this feature, builders can seamlessly display, create, and interact with annotation objects on text directly in the Markdown widget.

An example of a Markdown widget with a configured "create annotation" action.

Key highlights of this feature include:

- Visual tags: Display annotation objects as highlighted or underlined text with configurable colors.

- On-click interactions: Users can interact with existing annotation objects in the widget by configuring actions and events.

- User tagging: Enable the creation of new annotation objects on specific portions of text.

An example of a Markdown widget with configured annotation interactions.

To learn more about configuring Annotations, refer to the Markdown widget documentation.

Your feedback matters

We want to hear about your experience with Workshop and welcome your feedback. Share your thoughts with Palantir Support channels or on our Developer Community ↗ using the workshop tag ↗.

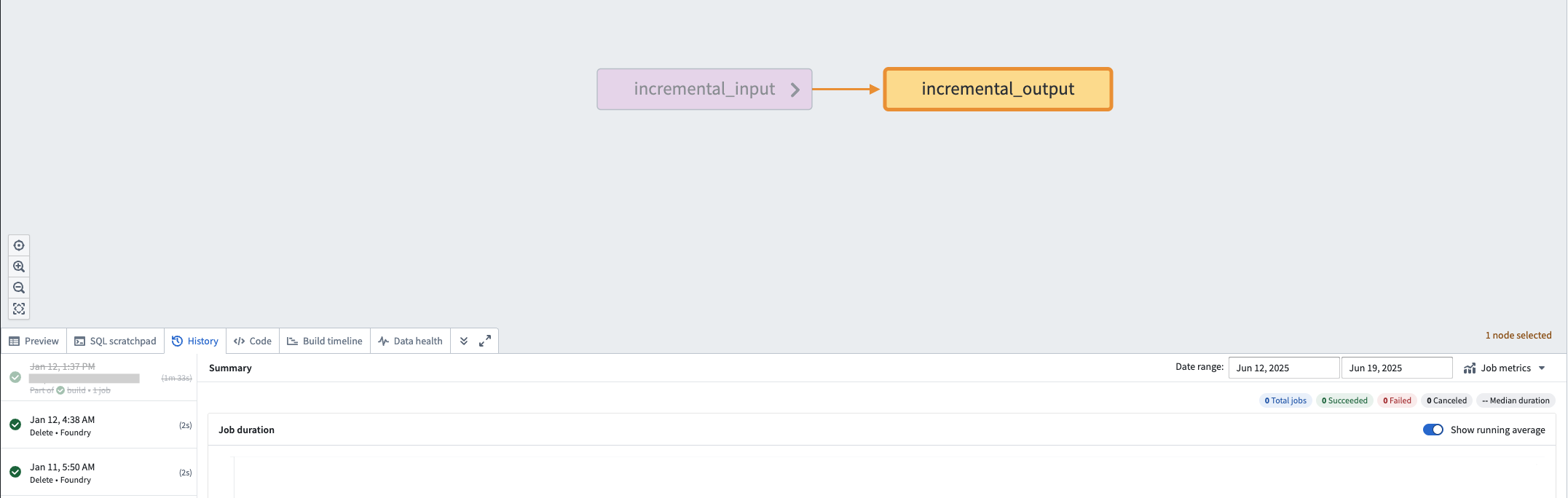

Limit batch size of incremental inputs to save time and compute costs

Date published: 2025-07-01

When running an incremental transform, you may encounter the following situations:

- An output is built as a

SNAPSHOTbecause the entire input needs to be read from the beginning (for example, the semantic version of the incremental transform was increased). - An output is built incrementally, but one or more inputs to the transform receive numerous transactions that collectively contain a lot of unprocessed data.

Typically, when an output dataset is built incrementally, all unprocessed transactions of each input dataset are processed in the same job. This job can take days to finish, often with no incremental progress. If the job fails halfway through, all progress is lost, and the output would need to be rebuilt. This process often results in undesirable costs and errors and does not address pipelines where large amounts of data need to be frequently processed.

Limiting the maximum number of transactions that should be processed per job offers a solution to this time-consuming problem.

An animation of incremental transform builds. On the left, the transform without transaction limits is constantly working on one job without noticeable progress. On the right, the transform has set a transaction limit of 3 for the input and is progressing through jobs consistently.

Add transaction limits to inputs

If a transform and its inputs satisfy all requirements, you can configure each incremental input using the transaction_limit setting. Each input can be configured with a different limit. The example below configures an incremental transform to use the following:

- Two incremental inputs, each with a different transaction limit

- An incremental input that does not use a transaction limit

- A snapshot input

Copied!1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24from transforms.api import transform, Input, Output, incremental @incremental( v2_semantics=True, strict_append=True, snapshot_inputs=["snapshot_input"] ) @transform( # Incremental input configured to read a maximum of 3 transactions input_1=Input("/examples/input_1", transaction_limit=3), # Incremental input configured to read a maximum of 2 transactions input_2=Input("/examples/input_2", transaction_limit=2), # Incremental input without a transaction limit input_3=Input("/examples/input_3"), # Snapshot input whose entire view is read each time snapshot_input=Input("/examples/input_4"), output=Output("/examples/output") ) def compute(input_1, input_2, input_3, snapshot_input, output): ...

Next steps and additional resources

After configuring your incremental transform with transaction limits, you can continue to configure and monitor your builds with the following features and tools:

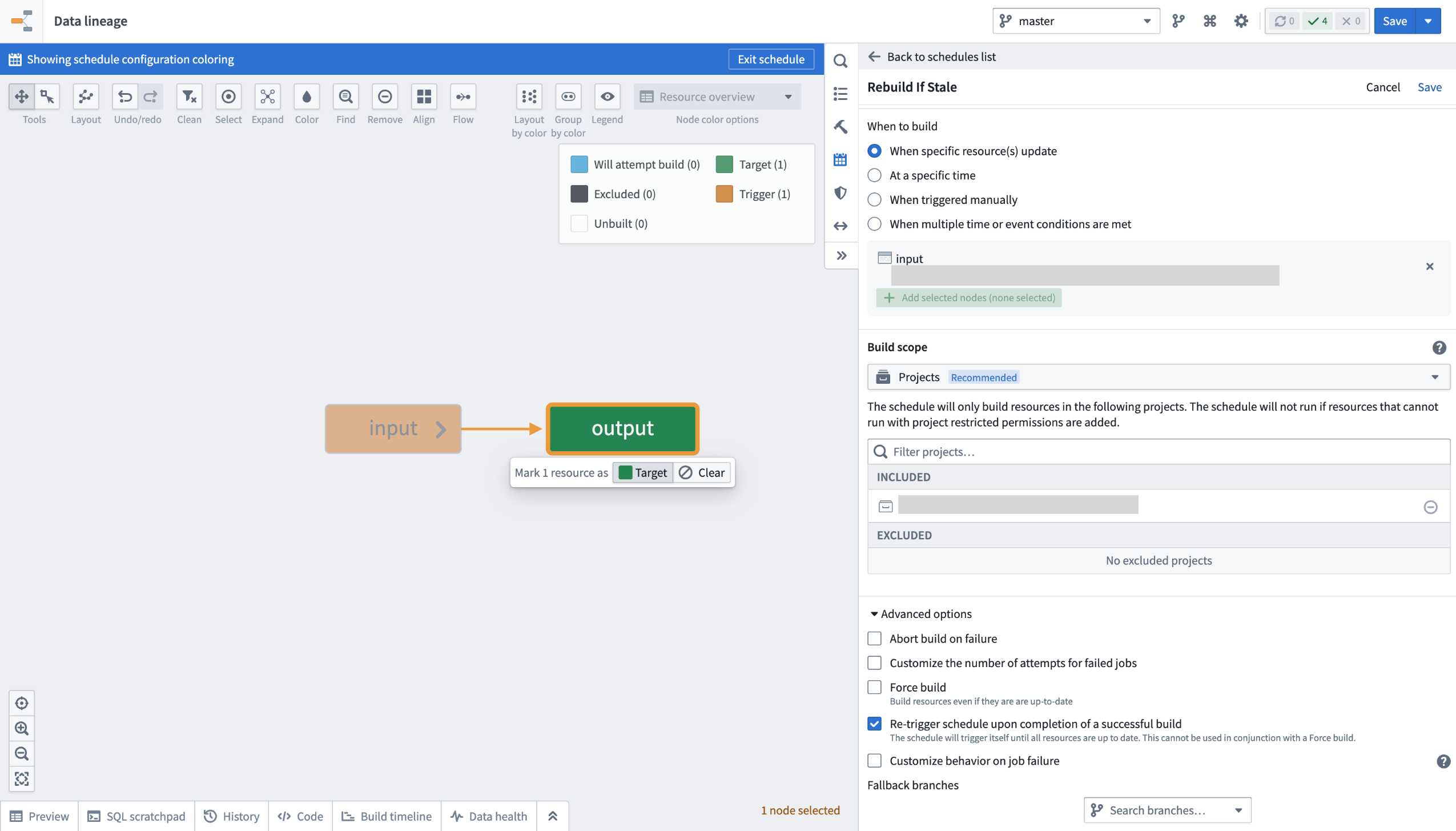

- Create a build schedule: Configure a schedule in Data Lineage to build at a regular interval, and enable an option that ensures your data is never stale.

Ensure your data is always up-to-date by configuring a build schedule.

-

Verify job ranges: Review Spark details for your build jobs to verify the transaction limits read per input.

-

Learn about read ranges when transaction limits are set: Review how the

added,current, andpreviousread ranges are used differently when incremental transforms are configured with and without transaction limits.

Requirements and limitations

To use transaction limits in an incremental transform, ensure you have access to the necessary tools and services and that the transforms and datasets meet the requirements below.

The transform must meet the following conditions:

- The incremental decorator is used, and the

v2_semanticsargument is set toTrue. - It is configured to use Python transforms version

3.25.0or higher. Configure a job with module pinning to use a specific version of Python transforms. - It cannot be a lightweight transform.

Input datasets must meet the following conditions to be configured with a transaction limit:

- It must be a transactional dataset input.

- In the current view of the dataset, the input must have only

APPENDtransactions; however, the starting transaction can be aSNAPSHOT. - It cannot be a snapshot input.

Your feedback matters

We want to hear about your experiences when configuring incremental transforms with transaction limits, and we welcome your feedback. Share your thoughts with Palantir Support channels or our Developer Community ↗.

Roll back pipelines to a previous state in Data Lineage [Beta]

Date published: 2025-07-01

When building your pipeline, you may need to roll back a dataset and all of its downstream dependents to an earlier version. There can be many reason for this, including the following:

- You identified a mistake in the logic required to build a dataset and need to revert it.

- Incorrect data was pushed into your pipeline from an upstream source.

- An outage occurred, and you want to quickly navigate back to an earlier state of your pipeline.

The pipeline rollback feature allows you to revert back to a transaction of an upstream dataset. When performing a rollback, the data provenance of the upstream dataset transaction is used to identify its downstream datasets and their corresponding transactions to create a final pipeline rollback state. Typically, this process would require several steps to properly roll back each affected dataset. With pipeline rollback, this is reduced to a few simple steps discussed below, along with the ability to preview the final pipeline state before confirming and proceeding with the rollback. Pipeline rollback also ensures that the incrementality of your pipeline is preserved.

As you set up your rollback, you can choose to exclude any downstream datasets; these datasets will remain unchanged as the pipeline is rolled back to the selected transaction.

This feature is currently in the beta stage of development, and functionality may change before it is generally available.

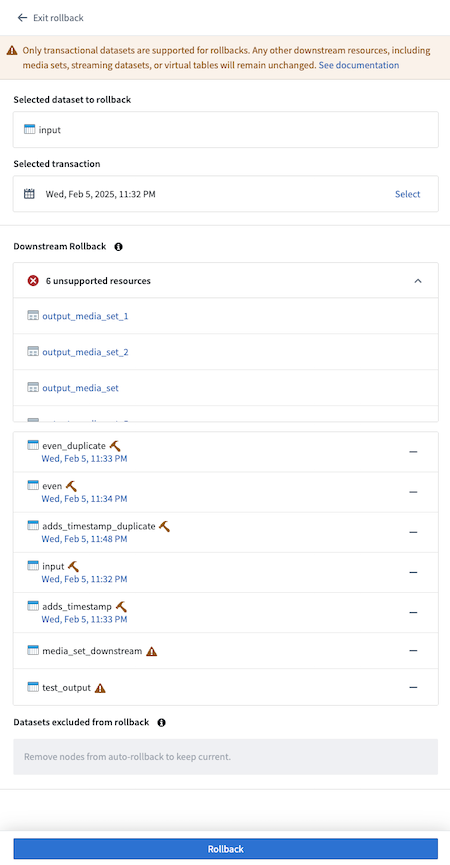

Execute a pipeline rollback

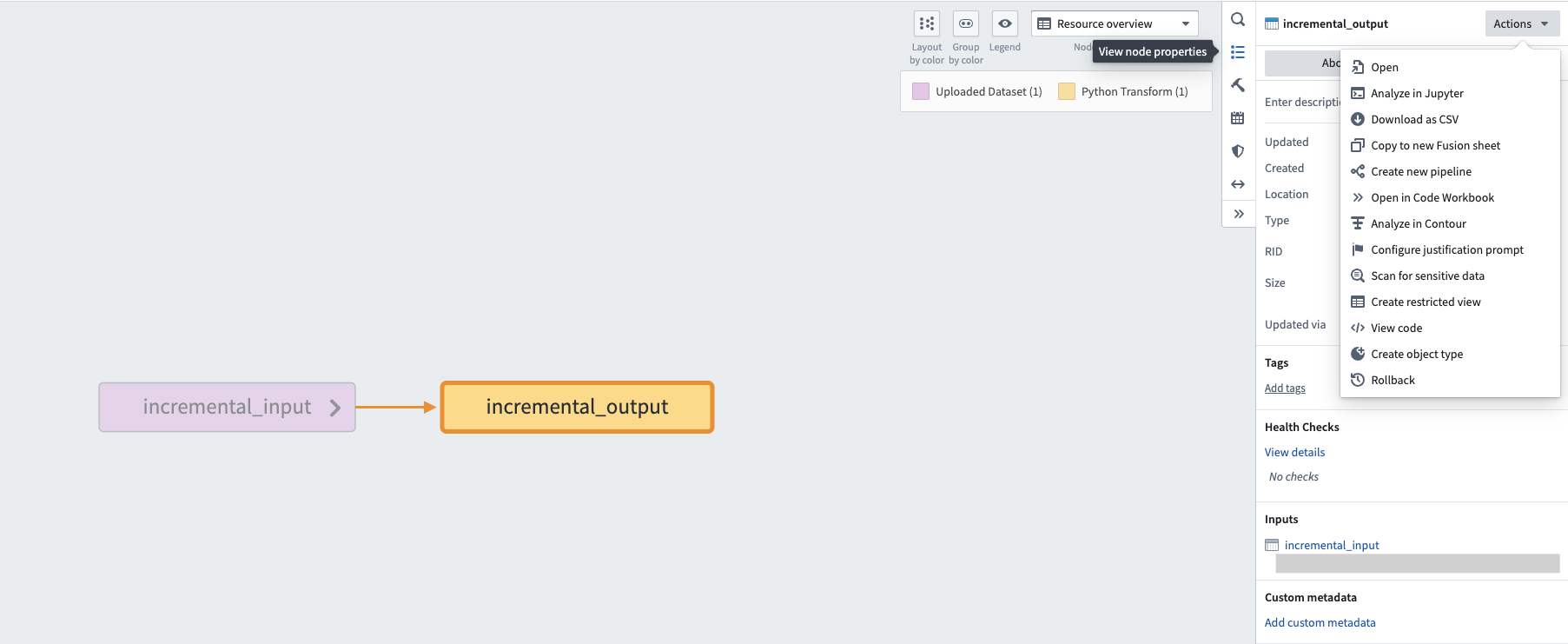

- Navigate to a Data Lineage graph containing the upstream dataset you would like to roll back.

- Select the dataset in the graph. Then, from the branch selector at the top of the graph, select the branch on which you would like to perform the rollback.

- Select View node properties in the panel on the right.

The right editor panel in Data Lineage, with the option to View node properties.

-

Select Actions, then Rollback.

-

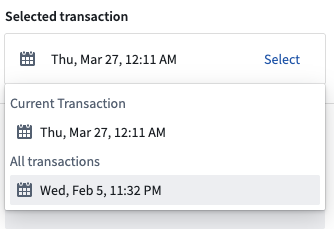

Under Selected transaction, choose the transaction to which you would like to roll back.

An example of a selected transaction.

After choosing the transaction, downstream datasets will automatically be found and the states they will revert to if the rollback is actioned will be displayed.

Resource types that are unable to be rolled back, including streaming datasets, media sets, and restricted views, will be displayed under the unsupported resources section. Transactional datasets on which you do not have Edit access will also be included in this list.

- Select the timestamp under each dataset to navigate to the History page of the input, where the corresponding transaction will be highlighted.

A list of datasets with timestamps of the builds.

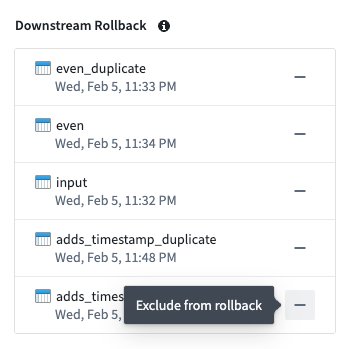

- Select any datasets to exclude from the rollback by selecting — to the right of the dataset name. Once excluded from rollback, the dataset will appear in the Datasets excluded from rollback section.

A list of datasets selected for rollback that you can exclude.

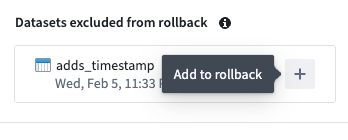

- To add an excluded dataset back to the rollback, select + to the right of the dataset name.

A dataset excluded from rollback that you can choose to add back.

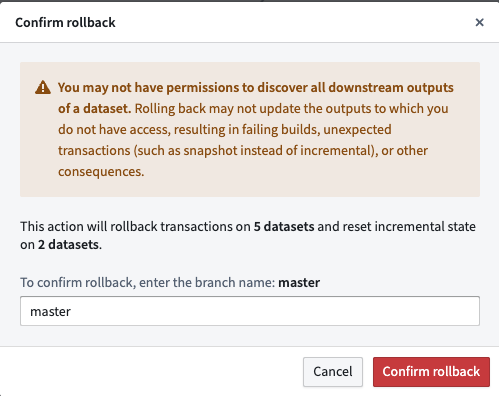

- After finalizing the state of your desired rollback, select Rollback. A confirmation dialog will appear.

A confirmation dialog confirming the rollback of five dataset transactions and incremental state resets of two datasets.

- Enter the branch name as confirmation, then select Confirm rollback to proceed.

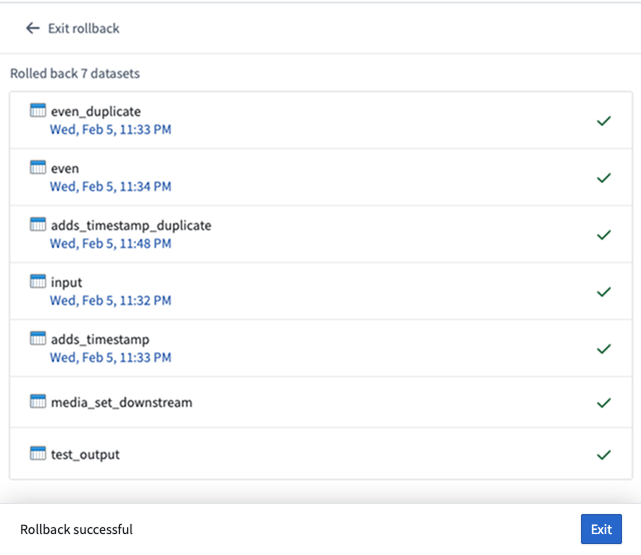

A confirmation of seven successful dataset rollbacks.

- Once the rollback is complete, navigate to the History tab of the datasets and notice that the rolled back transaction is now crossed out, as shown below:

An example of a dataset that was rolled back, with the rolled back transaction crossed out.

Additional resources and support

To learn more about pipeline rollbacks, review our public documentation. We also invite you to share your feedback and any questions you have with Palantir Support or our Developer Community ↗.