Analyze run results

Run results show how your functions performed against test cases and evaluation criteria. Result views are available in the AIP Evals application or the integrated AIP Evals sidebar in AIP Logic and AIP Agent Studio.

If you have configured pass criteria on your evaluators, AIP Evals will automatically determine a Passed or Failed status for each test case. The results page displays the overall pass percentage across all test cases.

Test case debug view

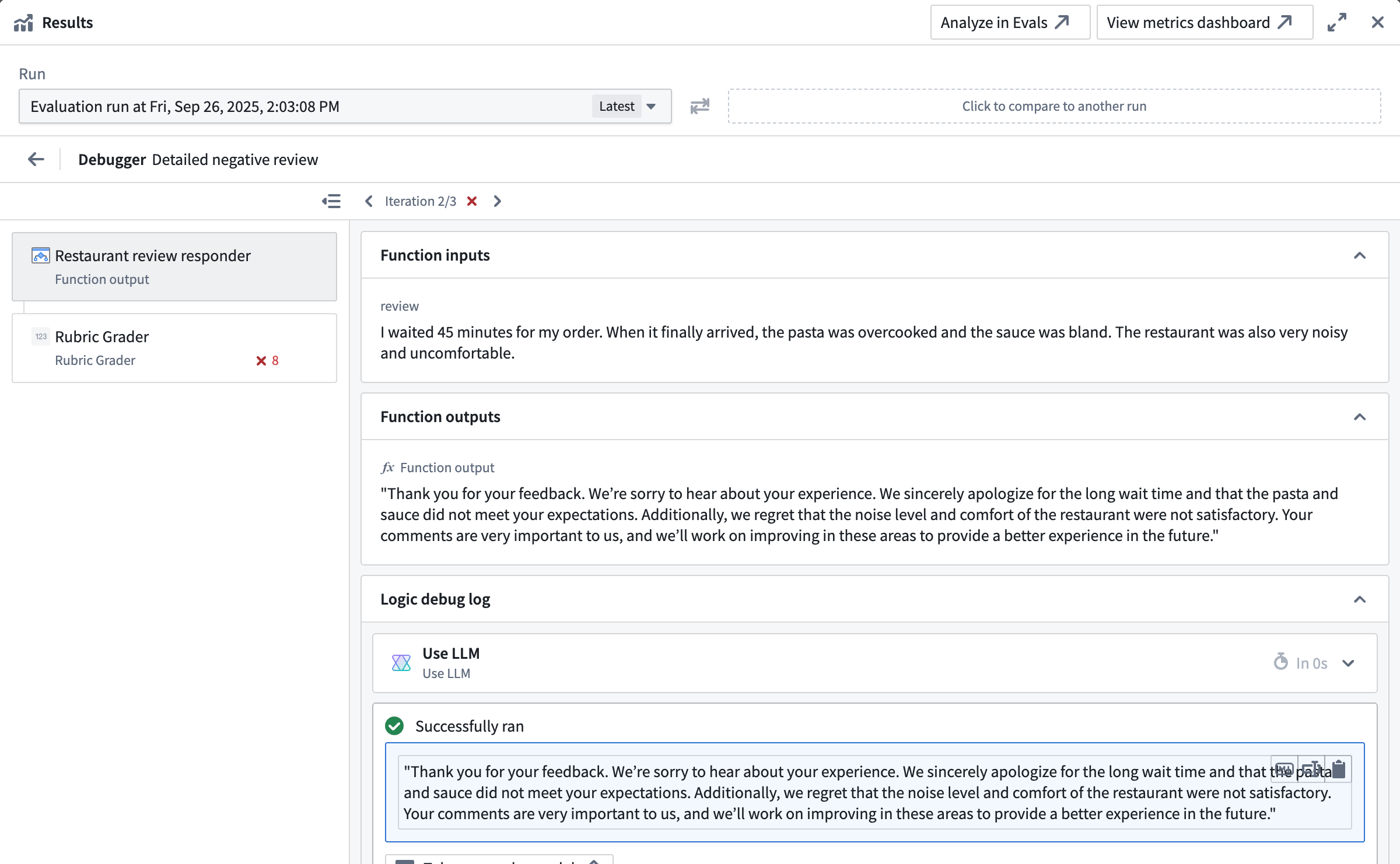

In some cases, you may want to investigate a specific test case result further. For these cases, the debug view is available. This view provides execution traces, input/output data, and error messages for individual test cases so you can understand your function outputs and evaluator results.

Access the debug view

There are multiple ways to open the debug view for a test case. You can do it from AIP Evals, AIP Logic, or AIP Agent Studio.

In AIP Evals

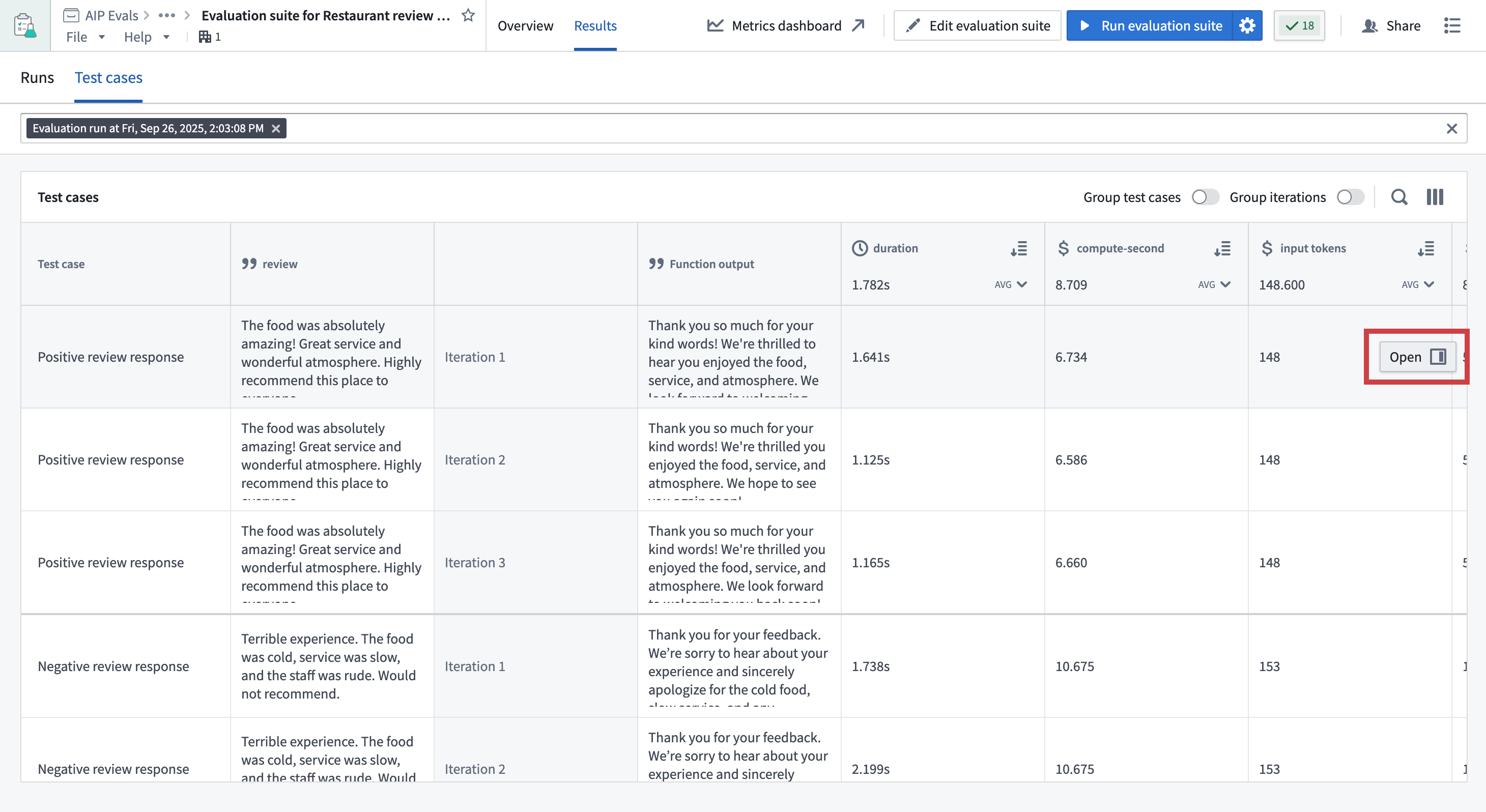

- Open the Results tab on your evaluation suite page.

- Select a run and switch to Test cases.

- Hover over a test case result.

- Select the Open option that appears in the right side of the test case row.

In AIP Logic or AIP Agent Studio

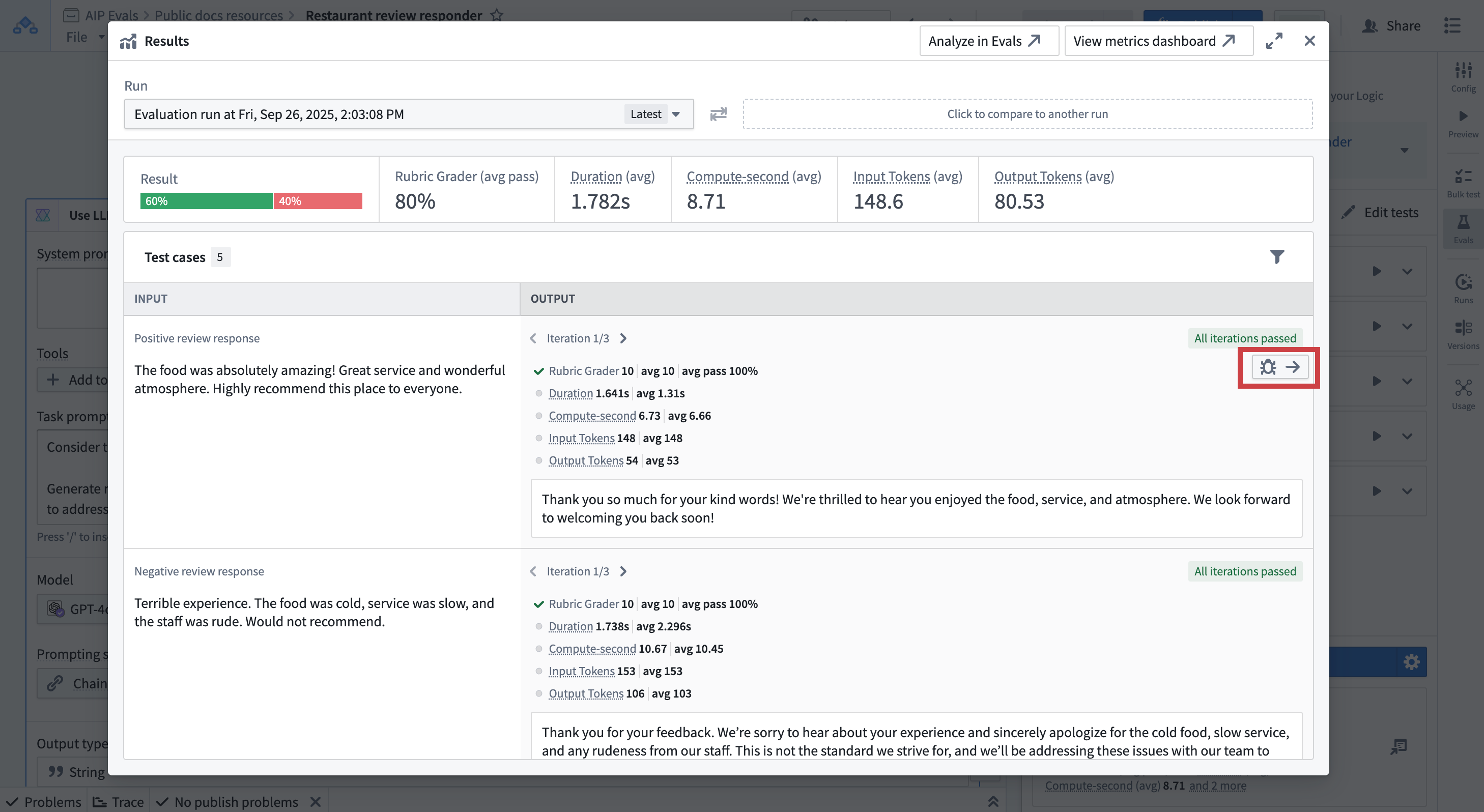

- In the run results dialog view, hover over a test case result.

- Select the Debugger option that appears in the top-right corner of the test case card.

- The debug view will open, showing detailed execution information.

Debug view capabilities

The debug view provides detailed information about test function execution and evaluator results. It allows you to:

- Inspect test function inputs and outputs for a test case.

- TypeScript/Python functions: Access syntax-highlighted code preview of executed code.

- AIP Logic functions: Trace the function execution step-by-step with the native Logic debugger.

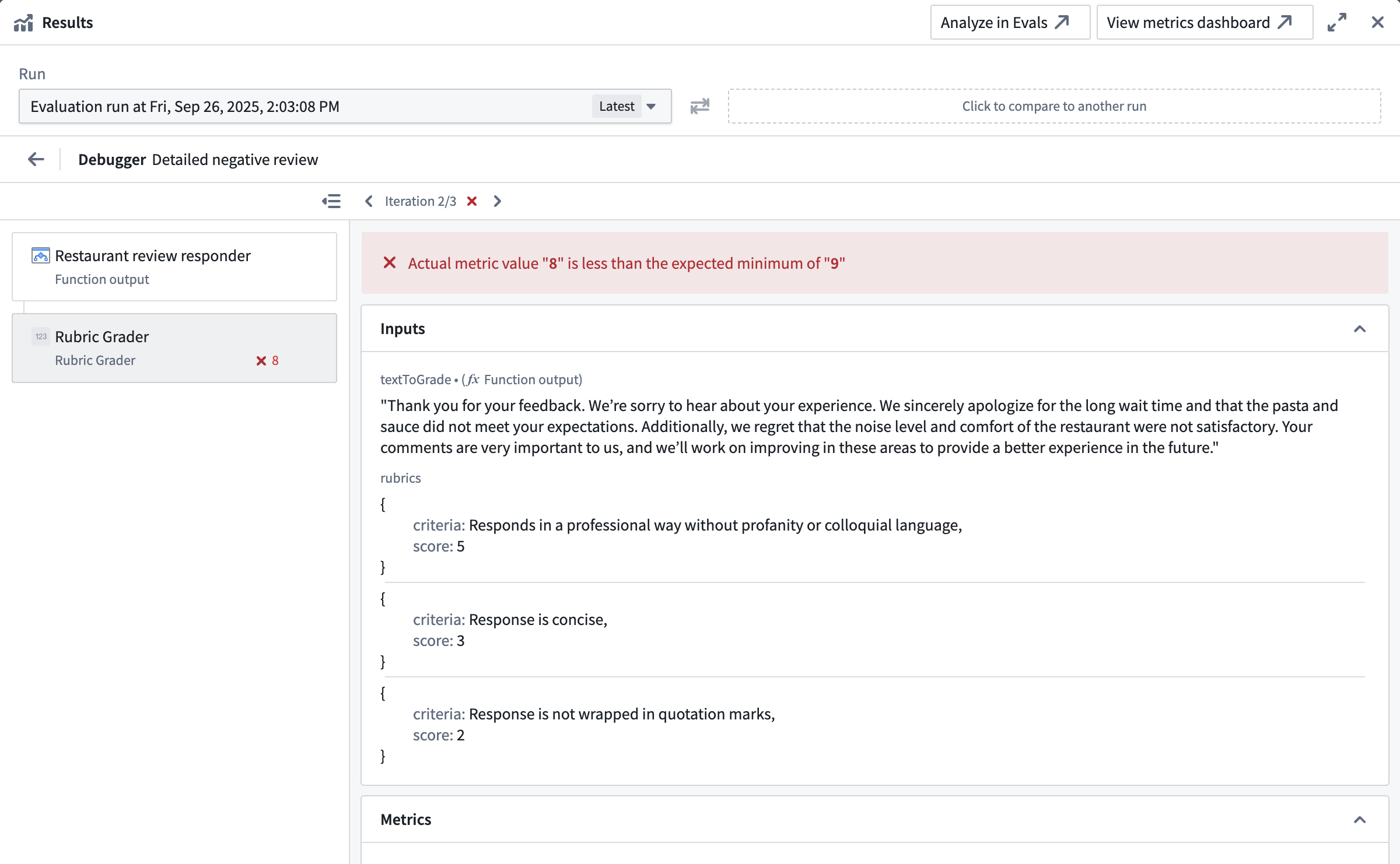

- Evaluators: Check input and output values, expected vs. actual evaluator results, and debug outputs from custom function evaluators.

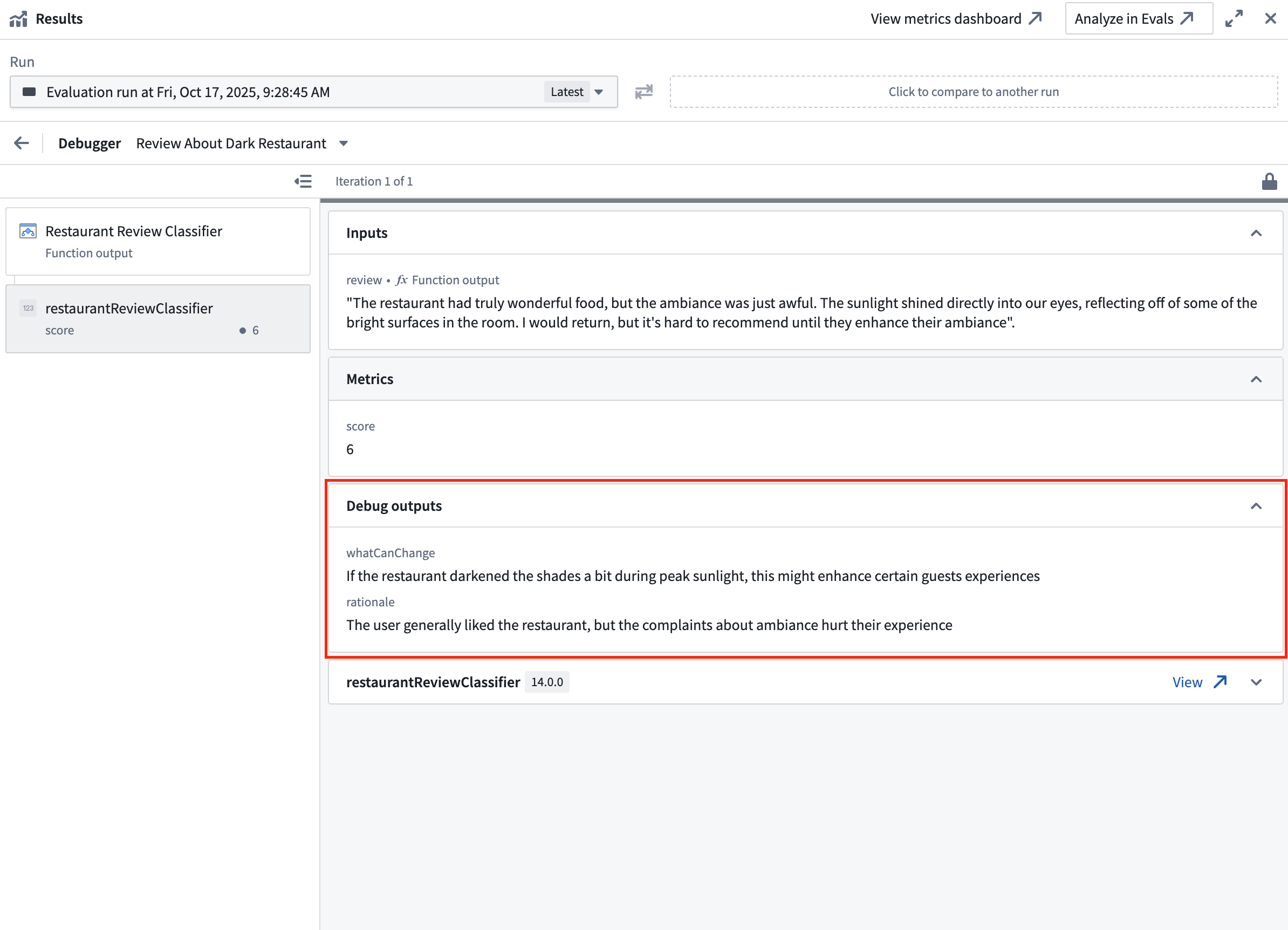

Custom function evaluators can return string values alongside their metric outputs. These strings appear as Debug outputs in the evaluator tab, providing additional context such as reasoning, intermediate values, or diagnostic information.

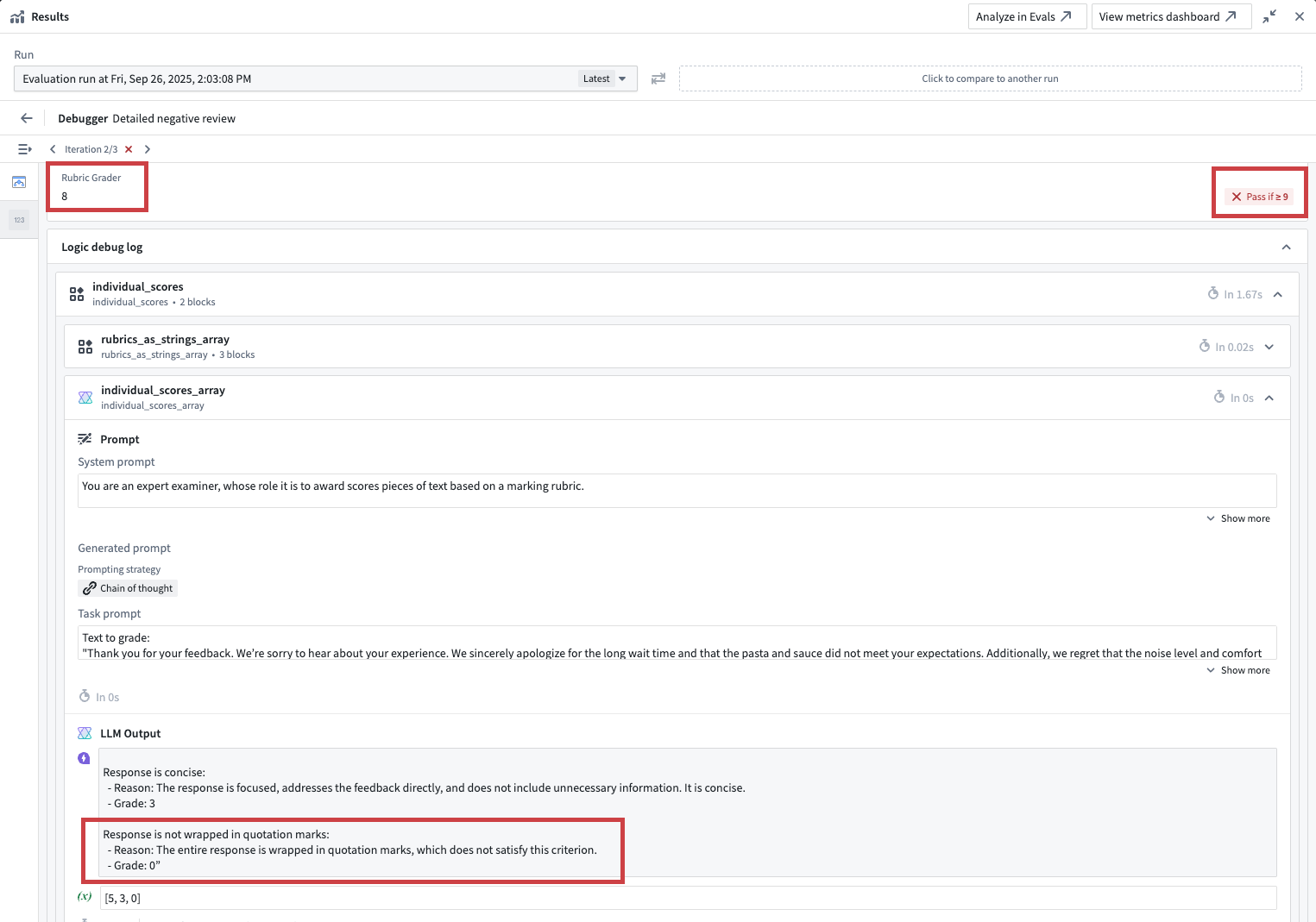

Evaluation functions that are backed by AIP Logic, like the out-of-the-box provided Rubric grader or Contains key details evaluators allow access to the native Logic debugger. This helps you understand why the evaluation produced a specific result which is particularly helpful when using an LLM-as-a-judge evaluator.

In the example shown in the screenshot below, the rubric grader evaluator did not pass, because the result of 8 did not cross the defined minimum threshold of 9. Looking into the Logic debugger, we can see that the LLM judge only awarded 8 points because the response was wrapped in quotation marks. To earn a higher score, we will need to improve our prompt.

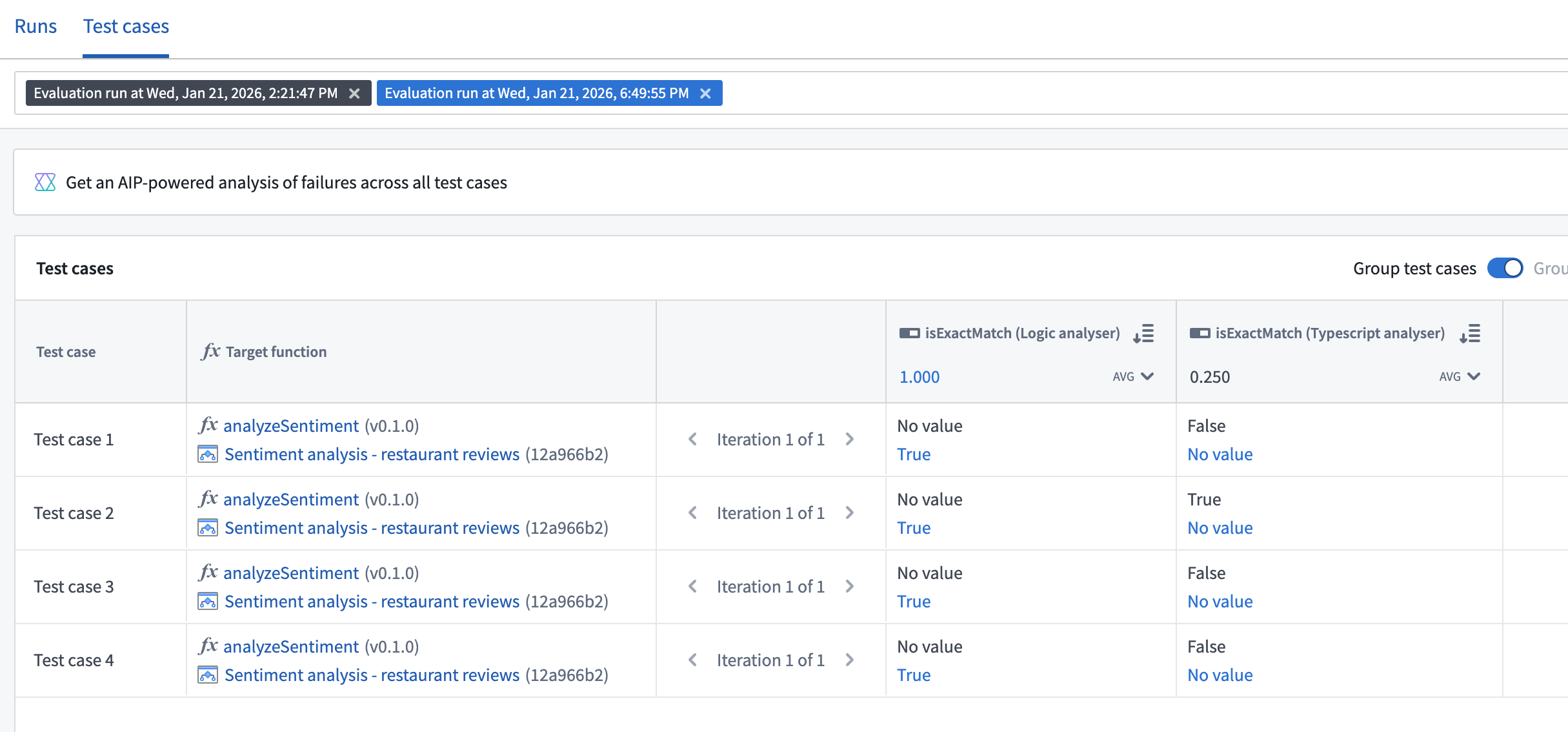

Compare results across target functions

When your evaluation suite has multiple target functions configured, you can select and compare results from runs across different targets in AIP Evals. This is useful for analyzing how different function implementations perform on the same test cases.

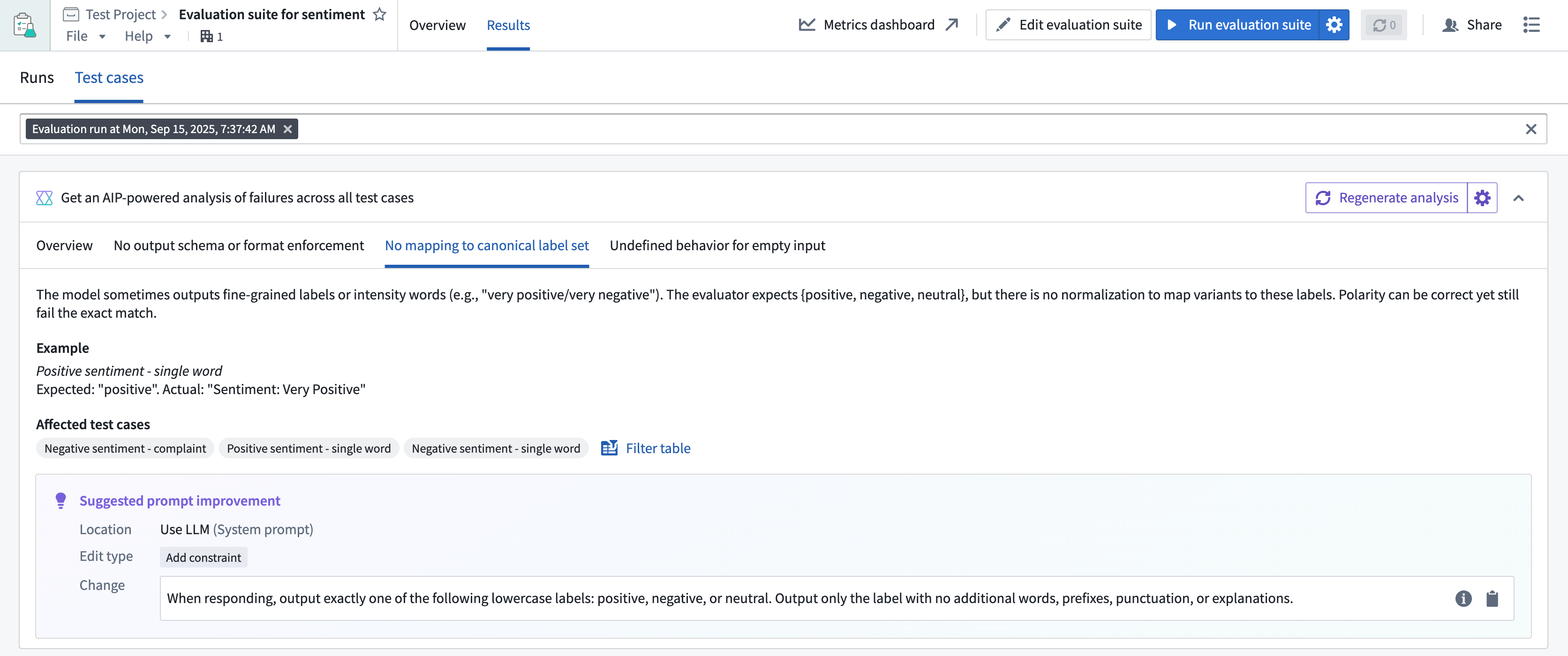

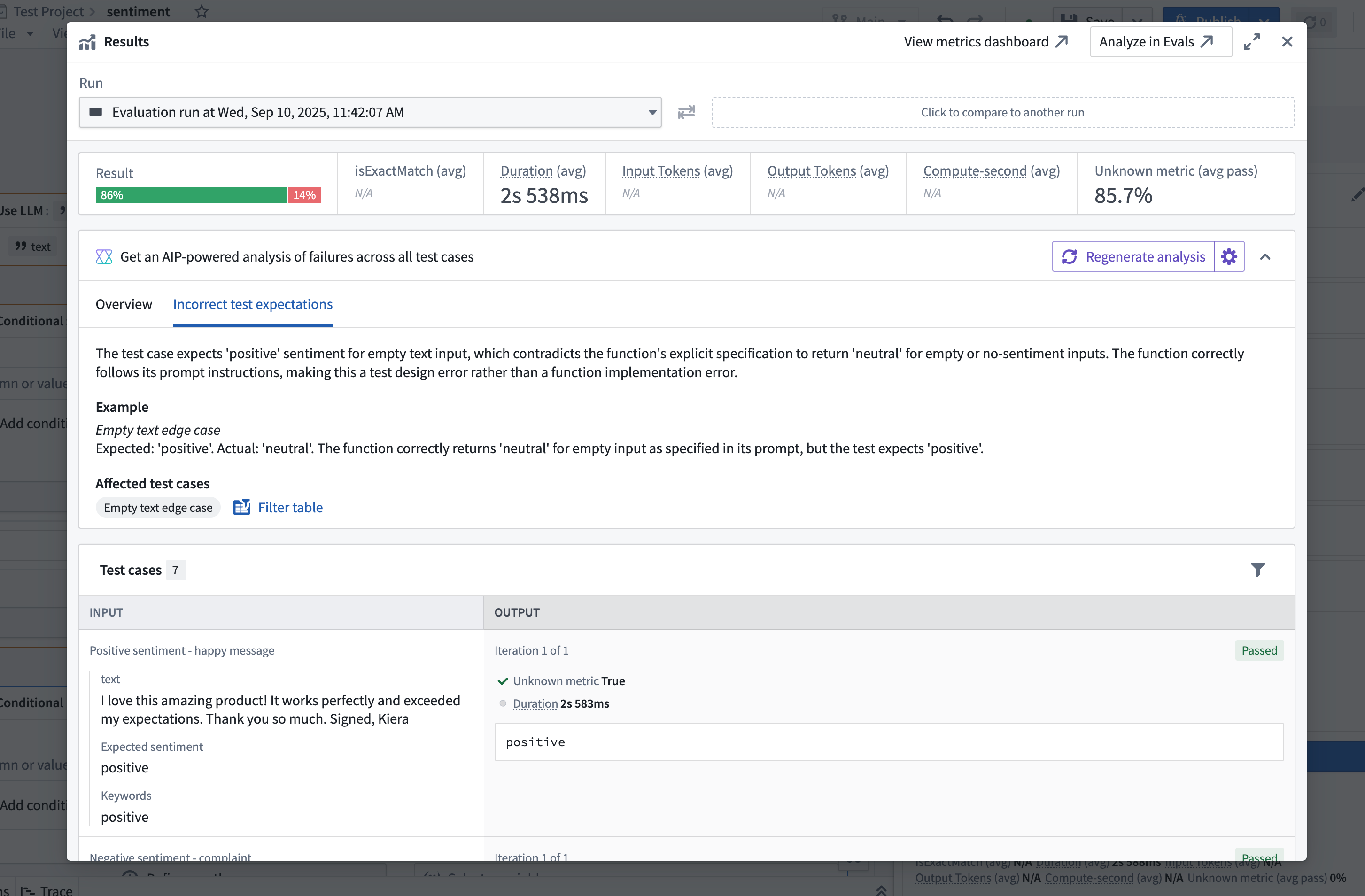

Automate debugging and prompt optimization with the results analyzer

The AIP Evals results analyzer allows you to quickly understand why test cases failed and how to fix them using large language models. It automatically clusters failures into root cause categories and, when appropriate, proposes targeted prompt changes.

Use the results analyzer to:

- Summarize failure patterns across a run.

- Group failures into clear, distinct categories with examples.

- Receive concrete suggestions to improve your prompts.

Prerequisites

- An evaluation suite with at least one run that has failing test cases.

- The AIP Evals results analyzer is only supported for AIP Logic functions.

Generate an analysis

You can use the results analyzer from either AIP Evals or AIP Logic.

From AIP Evals:

- Open the Results tab on your evaluation suite page.

- Select a single run that contains failing test cases and select the Test cases tab.

- Select Generate analysis.

From AIP Logic:

- Open the run results dialog view from the AIP Evals sidebar

- Select Generate analysis

Configuration

You can optionally configure:

- Model: Select the LLM that powers every step of the results analyzer (individual case reviews, the aggregate summary, and prompt suggestions). Higher-tier models can surface deeper insights but may take longer and consume more tokens. A recommended model is preselected.

- Max categories: Controls how many distinct failure patterns the model is prompted to return. The default value 3, and you can configure up to 6. Raising the maximum enables finer-grained buckets when failures stem from different causes, but setting it too high may create redundant categories if issues share the same root cause.

- Max test cases: Sets the upper bound on failing test cases to analyze. The default value 100, and you can configure up to 500. Only failing cases are considered; when a run exceeds this limit, the analyzer randomly samples that many failures, which may lead to slightly different results between analyses.

Understand the results

The Analyzer displays a root cause analysis card with an overview and category tabs.

- Overview: A brief, top‑line summary of the most important failure patterns.

- Categories: Each category includes:

- Name and description of the underlying issue.

- Example: A representative failing test case and short explanation.

- Affected test cases: Tags you can select to filter the table to exactly these cases.

Use the Filter table option to focus the results table on a category or single example test case, allowing you to drill down further with the debug view or evaluator details.

Prompt suggestions

For AIP Logic functions, each category can include a Suggested prompt improvement that you can copy with one click. Suggestions call out:

- Location: System prompt or Task prompt.

- Edit type: One of:

- Append instruction

- Prepend context

- Replace text

- Add example

- Add constraint

- Clarify format

- Change: The exact text to add or replace, along with a brief reason why the suggestion will improve your prompt.

Review suggestions and apply changes in your Logic function’s prompts where appropriate, then re‑run your evaluation suites to validate improvements.